An engineer’s guide to the CFD hardware galaxy

A tower, it says, is about the most massively useful thing an engineer can have.

Clark F. Douglas

In the last decade, computing hardware for simulation software has greatly diversified. Engineers, IT specialists and companies struggle to find the devices best suited for their use cases. So, what is the right choice in an ever expanding hardware universe? Although there is no such thing as one answer to such a generic question, I tried to find one myself on the route through the CFD hardware universe…

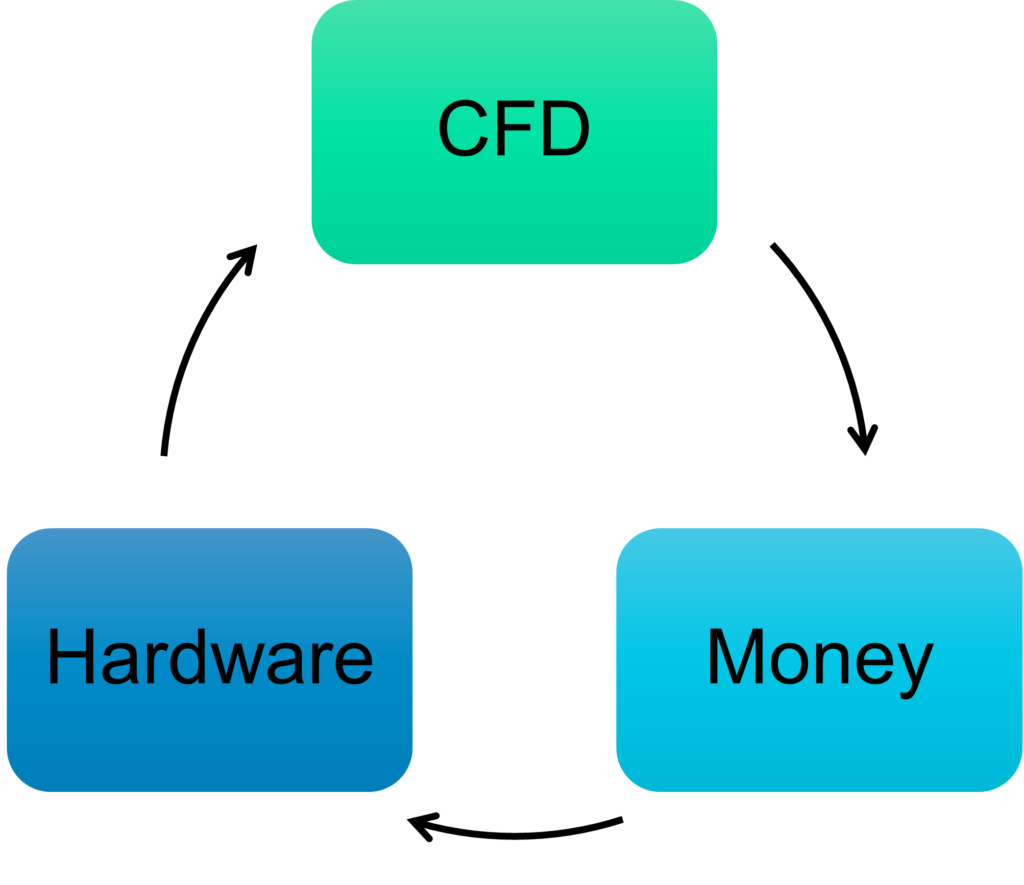

The Purpose of Salary from a CFD Engineer’s Perspective

When the sun sets, the CFD work is done. Taking a last pleased glimpse at my still running simulation’s (currently) falling residuals, I leave my workplace. But what does the CFD engineer do at the end of the working day?

Well, on some occasions, I fire up my private workstation. As a tech enthusiast, I spend some of the money hard-earned with CFD on my private hardware… so that I can do some CFD!

Simply buying any CFD hardware and just running anything is not my style. It might make the decision either ineffective or cost inefficient. For me personally, this might be a loss of some time, some money – but for companies this failure can be of a totally different order of magnitude.

I take close consideration before I decide on CFD hardware.

- Absolute computing power. Will the component of my choice make my setup faster?

- Efficiency. I want my actions to have a limited impact on the environment. And a limited impact on my electricity bill. Besides that, less efficiency leads to higher cooling demands, most likely with additional costs and more noise.

- Well, there still is a budget limit. I still ask myself if a certain performance gain is worth the investment – from my point of view. (“Honey, the computer does NOT work without this!”)

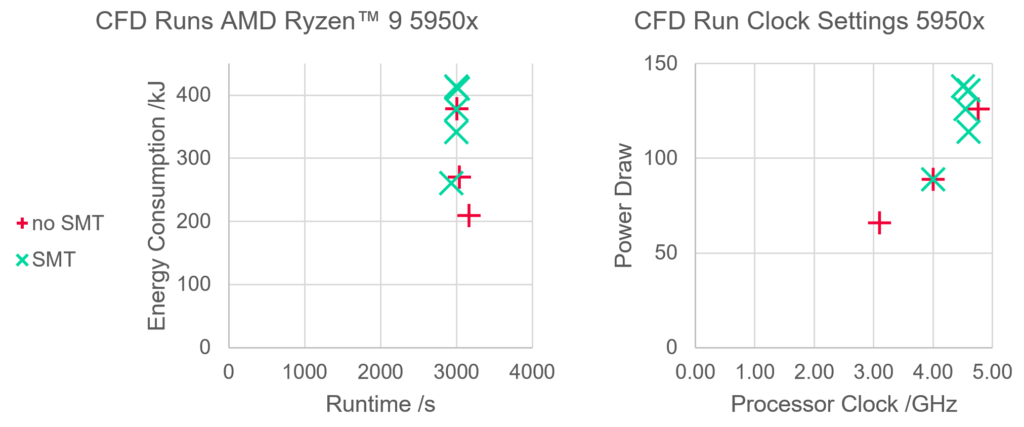

My hobby process does not stop after buying and assembling CFD hardware. Settings are as important as the setup itself. After spending a micro-fortune, I invest hours to fine-tune the clean, cool and capable computer. To verify my build, I run the same CFD simulation over and over again, changing processor clock speed, monitoring runtime and power draw. I will not bother the gentle reader with trifles like PBO, SMT, PPT, FCLK… in this blog but the essence was:

For free benchmark software, the score difference between high-power and low-power settings is huge – 13k / 17k / 19k on geekbench5 in Eco/Standard/Overclocked mode. But not for CFD. Apart from extreme power-saving settings, I cannot achieve any changes in my runtime by changing the processor clock. The impact on energy consumption however is enormous – idle, in benchmark software, in CFD, all alike. And energy consumption not only harms the environment but also your budget.

Go big or go budget with new CPU?

Well, with a single simulation as a test case, I cannot be too sure that my methodology is above all doubts.

And again, my tiny little CFD hardware galaxy is no different to the industrial CFD engineer’s universe, just on a different absolute scale. If I make a wrong decision, it’s a few thousand bucks, if you make a false decision in a professional industrial CFD environment it’s approximately ~ V_Galaxy/V_SolarSystem * 1000 bucks.

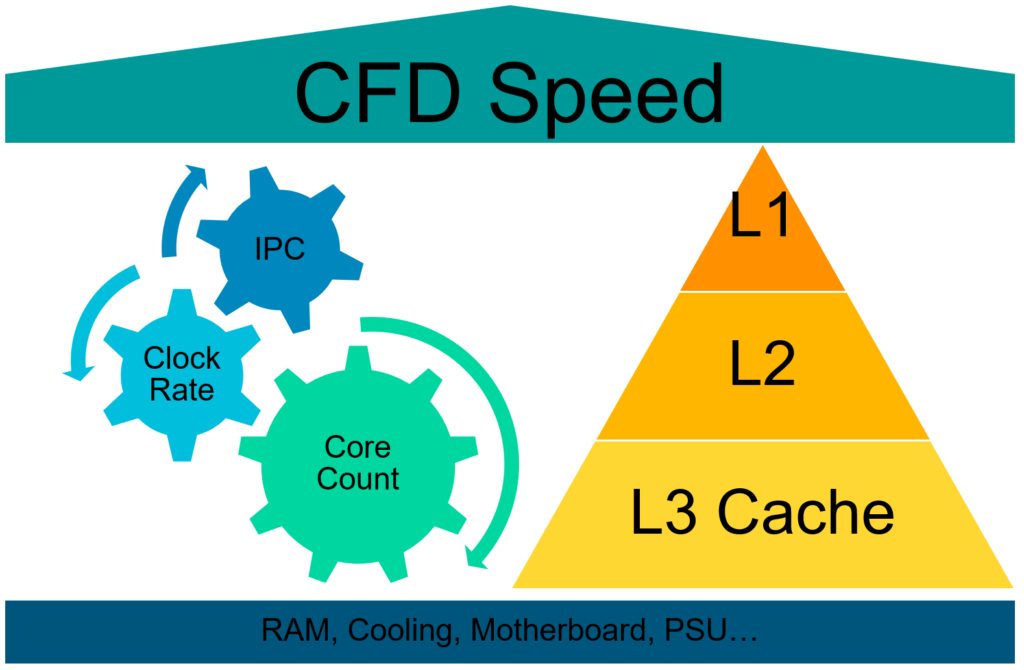

Hence, professional benchmarks on CFD hardware run several standardized cases with different requirements to every hardware feature. A huge variety of mesh sizes and physics ensures the exploration of all characteristics. Fast CFD codes like Simcenter STAR-CCM+ rely on two pillars in computing that have their own influence on the duration of the simulation:

- the raw computing speed. For CPUs, this is determined by core count, clock rate and instructions per cycle (IPC).

- memory size, memory speed, memory bandwidth.

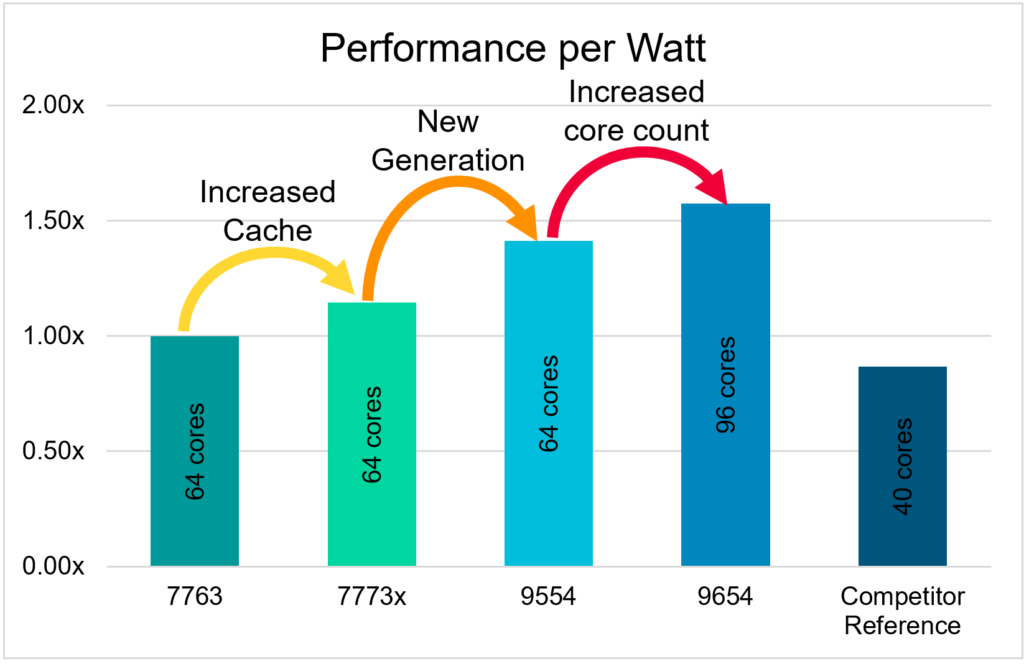

The greatest relative speedups can be realized by maximizing the cache memory. Cache is a memory that is located within the processor – the closer it is to the actual computing cores, the faster it is. But as there is less space the closer you come, it gets more difficult to get big memories here. Cache comes in three levels: L1 to L3, close to far, fast to slow.

Processors with greater cache can more than compensate lower core count or lower clock rates in CFD. This trend can not only be seen in laptop CPUs and desktop CPUs, but also in server processors like the AMD EPYC ®. But the raw speed is not the only criterion these days. As I can tell from my bill, the cost of energy greatly contributes to the total cost of a simulation. New generations of processors can crop that, as more cores and more cache does not necessarily mean more energy consumption. Or to say it in a CFD way: less energy for the same simulation – no matter if you invest the same power for a shorter period or less power for the same duration as before.

Clearly, despite the fact that they are the oldest star in the CFD hardware universe, with innovative technology that leads to even superlinear scaling, HPC based on CPUs remain a crucial powerhouse for CFD simulation.

Is CFD just a game? Are GPUs up to it?

At some point upgrading your CFD hardware might face a “wall of compatibility”. I already upgraded from the previous CPU generation. But I cannot find excuses for changing motherboard, CPU and RAM at once, so there is only one way out!

When I started with CFD, nobody thought about solving Navier-Stokes equations on a graphics card. Graphics cards are for gaming! To calculate images very fast, graphics cards (GPU) always used a vast number of calculation units. Very fast, but too specialized. For a few years now, they have been generalized to do GPGPU – General-Purpose computing on GPUs. Every manufacturer still has a unique nomenclature for these computing cores.

Even nowadays consumer graphics cards are hardly any good for industry-sized CFD simulations. But why?

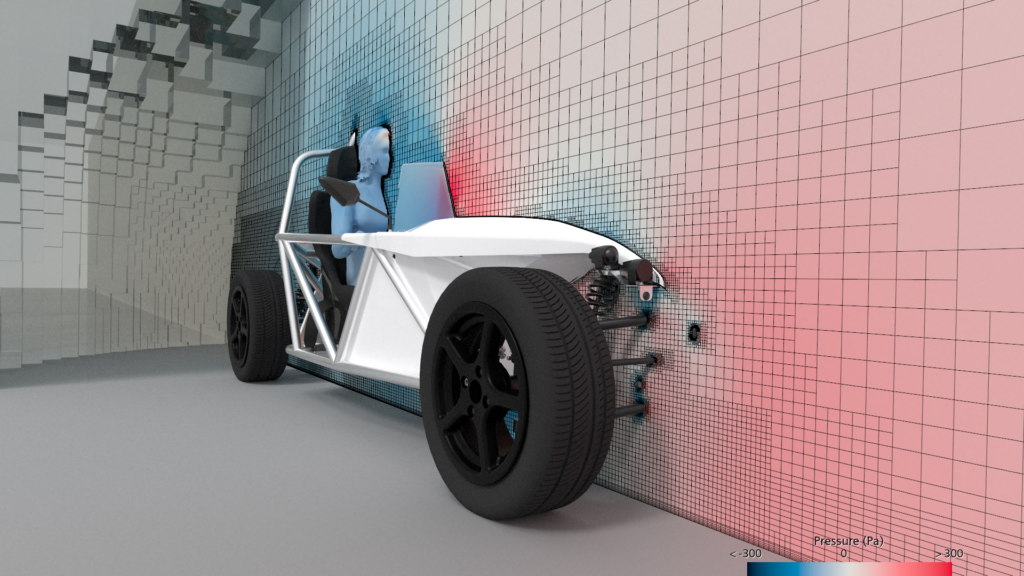

The mesh discretization of the flow field directly contributes to the amount of data that must be solved iteratively. This discretization with all its fluid quantities like pressure, velocities in three directions, temperature and more is a lot of data. As a rule of the thumb, I like to say that every million cells in my mesh needs 2 Gigabytes of memory. Just imagine the following case:

- Mesh size is 30 million, thus 60 GB of memory are required.

- One iteration takes 60 seconds.

- One Simulation takes 1000 iterations.

memory consumption – the major bottleneck for GPGPU-accelerated CFD

In case of insufficient memory the simulation needs to transfer data from the fast memory to another device. Writing these 60 GB anywhere forth and back in every iteration will need a lot of additional time, even in the fastest systems. And this is a small hydrocyclone case, not an external aerodynamics automotive case.

Why are consumer graphics cards hardly any good for CFD nowadays? Well, point me towards the graphics card with 60 GB memory! Whereas you can “easily” fit 128 GB of RAM into a consumer desktop, or 64 GB into a laptop…

But in an industrial CFD environment you want to go big anyways. And so as CFD hardware advances, multi-GPU workstations and servers have reached a few hundreds of GB RAM. That is still one order of magnitude below the memory capabilities of CPU based CFD hardware, but suitable for CFD. As GPUs do not work in the same way as CPUs do, the code of Simcenter STAR-CCM+ is iteratively ported to GPU – with an enormous speedup! With every new release, more physical models become GPU-executable. The results are several times less energy and less time for the same simulation as one GPU replaces hundreds of CPU cores. Overnight Large Eddy Simulation on a single blade server become possible!

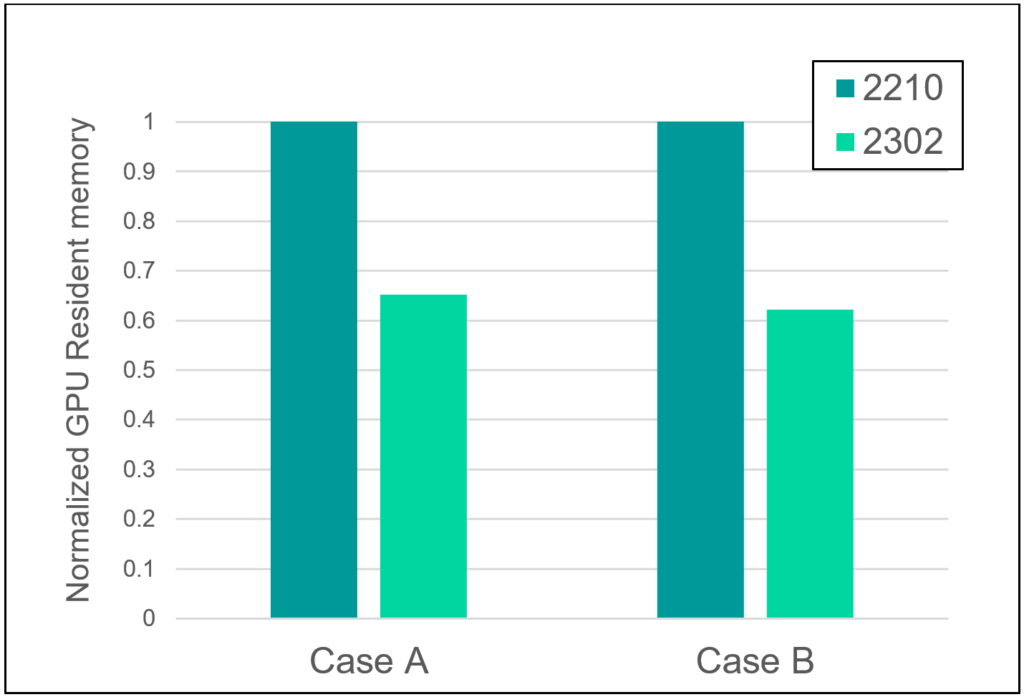

With the upcoming 2302 release of Simcenter STAR-CCM+ the computation requires up to 40% less memory. This makes more use cases executable on smaller GPUs, thus increasing usability, changing the rule of the thumb “2 gigs per million cells” to 1.25 GB RAM per million cells. Additional benefit: the runtime is also reduced by up to 10%.

Internet of Things? CFD on Things! ARM processors take over!

CPUs and GPUs are not the most common computing devices. So many “intelligent” devices surround us daily – maybe are even in your hand right now.

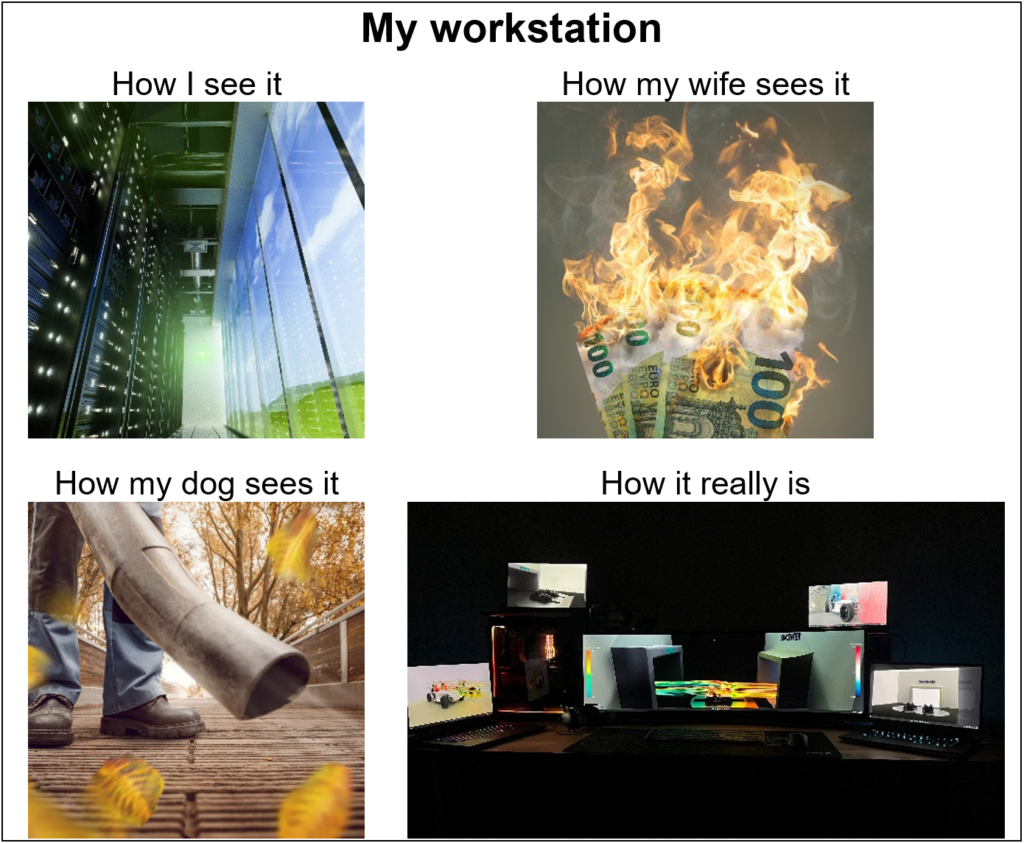

My wife has no understanding why I change computer parts so often. And I don’t understand why her mobile phone was more expensive than her computer, whereas mine is more like a steam-powered telephone with an extendable antenna. I am not sure what is leading in the financial impact – my CFD hardware or her annual phone changes.

But this might instantly change on the very day CFD code becomes executable on mobile phones!

Just like mobile phones, “clever” microwaves, fridges and some servers use a different kind of processor called ARM. Whatever this abbreviation means by the time you read that blog – these devices differ from our laptop and desktop processors. CPUs in laptops and desktops use an architecture called x86, which relies on so-called “CISC” – a Complex Instruction Set Computer. All commands to the CPU can be seen as detailed information what to do. They need several cycles to be executed – which is reflected in the “Instructions per Cycle” metric determining processor speed.

On the opposite there is the R in ARM, which stands for RISC – Reduced Instruction Set Computer. The idea is quite simple: make instructions so simple that one cycle completes one instruction. IPC = 1. This procedure leads to fewer and simpler instructions. 1 Instruction per cycle. Overall, there are a few more differences to a common CPU:

- Instructions are less complex.

- The number of different instructions – the library so to say – is smaller.

- The clock rate is lower.

- The core count is higher! My phone has eight cores, my laptop only six.

- Simultaneous Multithreading – two or more threads per core – is not a thing on most processors. Actually, CFD does not benefit from Multithreading on any CPU, so this is okay!

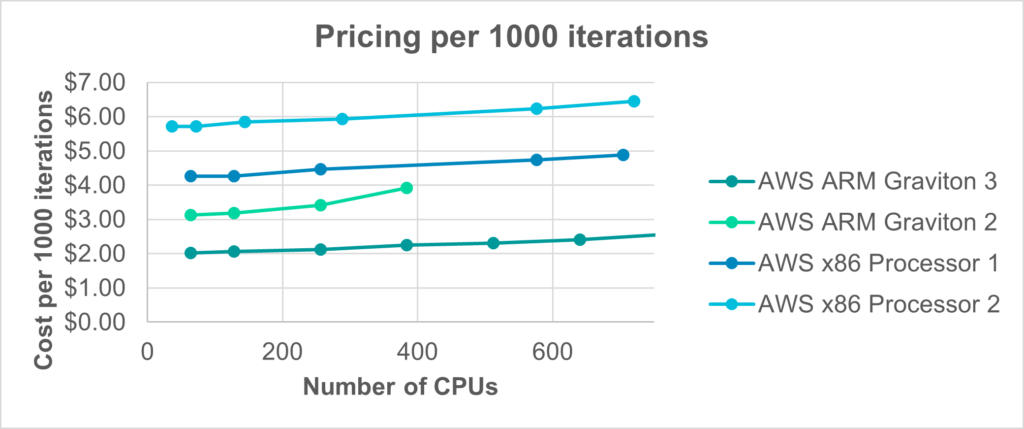

A side effect: to execute these instructions is very energy efficient compared to standard x86 CPUs. This energy efficiency is the reason why mobile phones and many IoT devices rely on this technology. But the drawback is: applications designed for desktop CPUs will not run (unless emulated, which is inefficient). This does not matter for newly designed, special software – thus there are servers like AWS EC2 which use processors of this ARM design. They are more energy efficient than other CPUs and thus more cost-efficient. Although they are far behind in clock rate – they are more than competitive in performance per watt and thus performance per buck!

With the newest release, available on February 22, Simcenter STAR-CCM+ 2302 has been adapted to run on these ARM servers as well, more cost-efficient than on x86 CPUs. So, you will be able to run your CFD hardware greener and at the same time cost-efficient than ever while I am just an ARM GUI away from deploying Simcenter STAR-CCM+ on my mobile phone!

Watch residuals locally, run simulations globally in the Cloud!

I guess I don’t want to wait for mobile phones to be suitable as CFD hardware. For some time now mobile devices can already address running simulations. The clue is to turn away from simulating on your own device. In some businesses, the demand for simulation is not constant. Self-owned CFD hardware tends to be either idling around – as my private workstation does the greatest part of the year – or being so heavily overloaded that you start playing sneak-into-the-queue games with your colleagues. And computing capacity is always scarce exactly when you desperately need results for an upcoming project, And if I had to pay for every maintenance hour and every online search because I just sudo-purged some vital parts of my operating system… again… I leave this question as an exercise for the well-disposed reader.

Simcenter STAR-CCM+ offers an on-board solution for cloud simulation: Simcenter Cloud HPC.

- No need to choose CFD hardware.

- No need to program servers.

- No maintenance.

- No reinvesting in newer CFD hardware every year.

Simulation results are just two clicks away – the only question is: how fast do you want it?

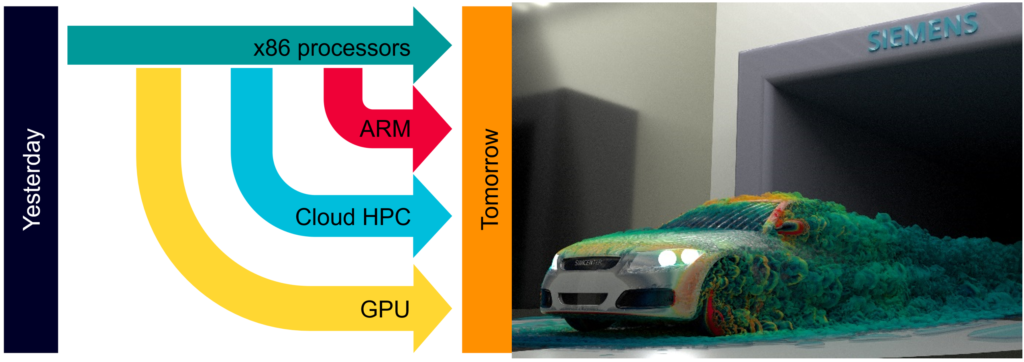

The best directions on the CFD hardware map

No matter which option suits best, Simcenter STAR-CCM+ advances to exploit promising CFD hardware trends for increased energy efficiency, cost reduction, reduced IT overheads and simulation speedup. All options offer great multi-node scalability:

- Flexible x86 processors as found in many servers, workstations, and laptops.

- GPU-computing on workstations and servers for outstanding energy efficiency.

- ARM processors provide a sustainable route with reduced cost per simulation.

- Simcenter Cloud HPC is an in-built scalable solution free of maintenance.

The answer to the question “Which CFD hardware is the best?” is 42.

It really depends on your project, budget and current priorities and yet I hope the above insights will help you find your way through the CFD hardware galaxy. As a rule of thumb, let me summarize:

- x86 CPUs have run simulation for ages now. Every solver was initially developed and verified for this platform. Look for CFD hardware with maximum cache – servers, workstations, laptops all alike. Simcenter STAR-CCM+ shows super-linear speedup scalability on those clusters. Find out more here

- GPUs support most of the solvers nowadays and software will adapt to this even further. This solution is very energy efficient. Pay close attention that your required solvers are supported (we are extending the range of ported solvers at high pace) and meet the memory requirements of your use case. With the upcoming Simcenter STAR-CCM+ Release 2302 the memory demand is decreased by nearly 40% and you will also see a 10% extra speedup compared to last release. This way you can get the most out of multi-GPU workstations and GPU clusters.

- ARM processors support everything but the graphical user interface. This is an approach for cost-efficient computing, especially on cloud services. Simcenter STAR-CCM+ Release 2302 supports this CFD hardware for your cost reduction.

- Simcenter Cloud HPC is a very easy solution. No investment in expensive compute hardware, no idle cost and scalable on demand!

On February 22, jump on the flagship Simcenter STAR-CCM+ 2302, ready to explore the CFD hardware galaxy as it is further expanding.