More with LES on GPUs – 3 high-fidelity CFD simulations that now run while you sleep

Long before there was LES on GPUs, a colleague of mine used to have a sticker on his desk that said

“Watching residuals doesn’t count as working”.

As truthful as this statement is, it is not to say that no work is happening when you set a simulation off to run. Indeed, one of the many advantages of simulation is that when your case is running you can continue to work on the analysis of previous simulations, go to meetings or finish that report you have been putting off for weeks.

Naturally, this also means there is a great desire to run simulations overnight, so that upon the start of the next working day, there are simulation results, hot off the press, to look at.

GPU acceleration for high-fidelity CFD

GPU acceleration is redefining traditional CFD design practices, and as GPU architecture continues to improve at a rapid pace, so too shall the boundaries of what is considered a practical level of fidelity for CFD simulations. i.e. higher fidelity CFD while you sleep!

In Simcenter STAR-CCM+ 2210, we continue to expand our GPU-enabled physics unlocking overnight high-fidelity CFD. Specifically, you can leverage GPU acceleration for simulations involving:

- Large Eddy Simulation (LES) for turbulence

- Segregated Energy

- Ideal Gas equation of state

- AND two acoustic wave models (Perturbed Convective Wave and Lighthill Wave).

All of this alongside our initial offering of steady and unsteady flows with constant density plus all standard turbulence models, including RANS, DDES and Reynolds Stress Models. Quite the selection!

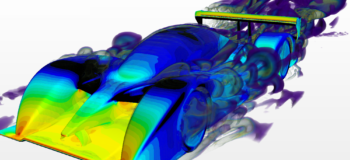

Faster LES on GPUs

TUM School of Engineering and Design, Technical University of Munich

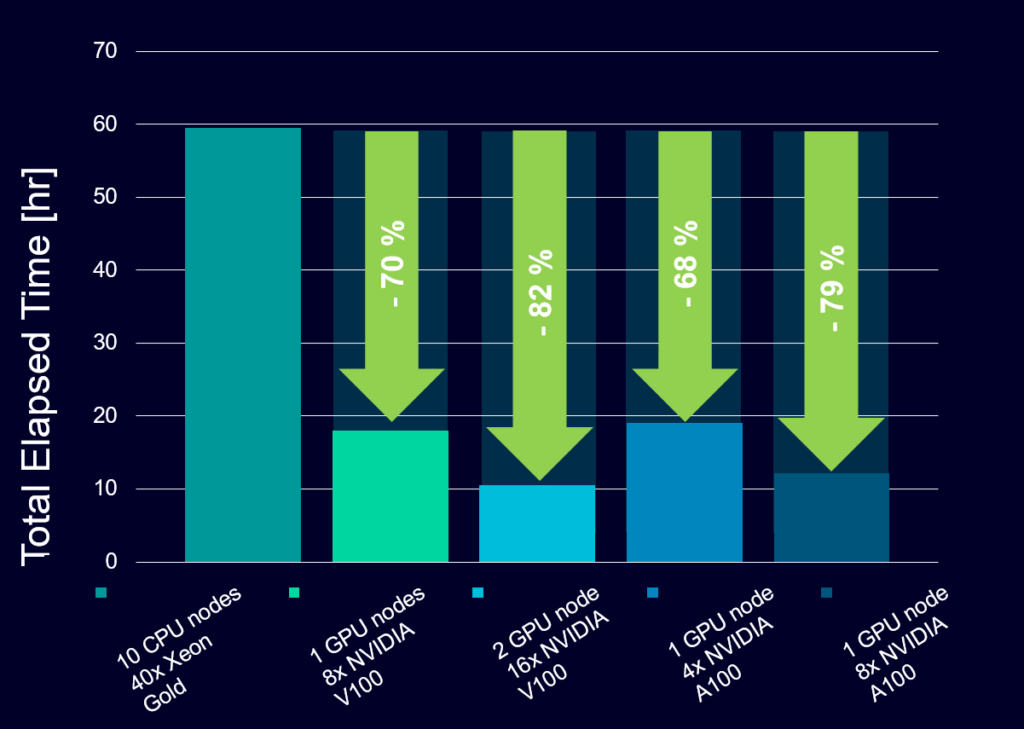

Large Eddy Simulations (LES) offer high-fidelity resolution of the largest turbulent scales and typically give a much more detailed insight into the flow field. Here we have an example of the DrivAer hatchback model that we ran according to the specifications of the 3rd Automotive CFD Prediction Workshop (AutoCFD 3). The mesh contains 130M trimmed cells and is run for 4s of physical time (16,000 time steps). Running LES on GPUs, 4 NVIDIA A100, gives a run time of around 20 hours and extending this to 8 A100 GPUs would allow for this high-fidelity LES to be run in around 12 hours! The equivalent number of Xeon Gold CPU cores to match this is close to 2000.

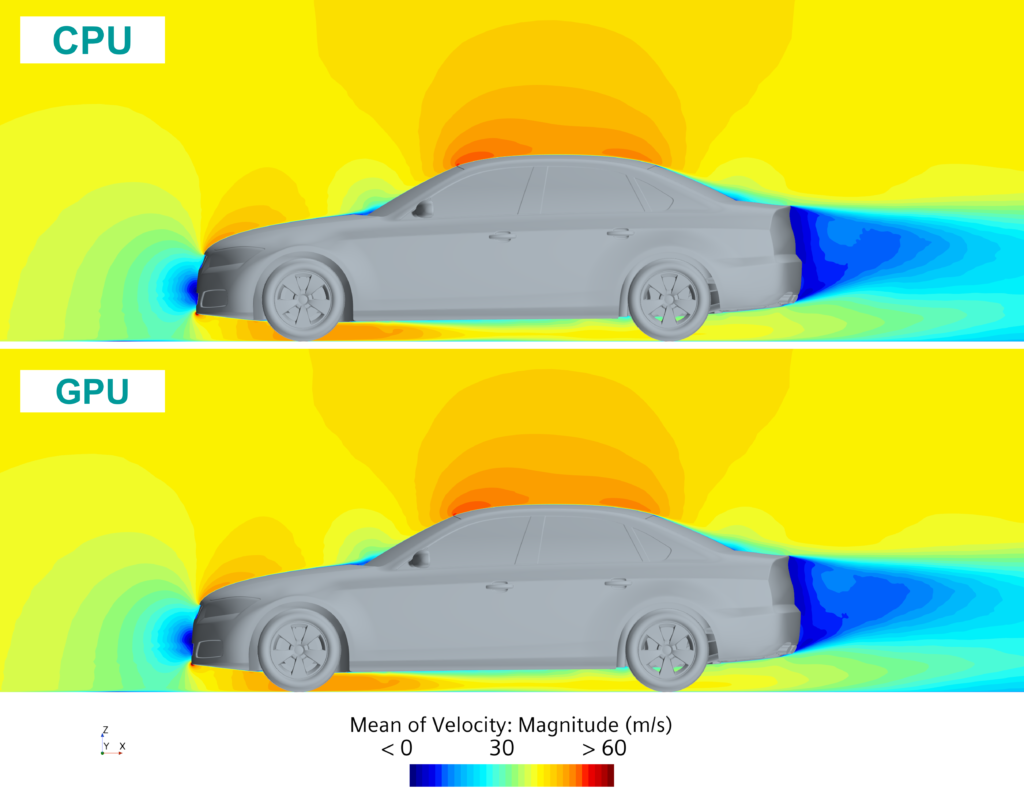

Now, all benefits of LES on GPUs aside, thanks to its continuous development and the introduction of new hardware technology, CPU solver technology will continue to play a relevant role in CFD. There will always be situations where CPU might be the solution of choice and others where GPU is the right way forward. And hence, CFD engineers will always want to rest assured that they get consistent results whether they run on CPUs or GPUs. Now, thanks to the fact that Simcenter STAR-CCM+ is not using a siloed GPU solver solution, but rather we port existing solver technology from CPUs to GPUs within one framework, a high level of consistency is granted:

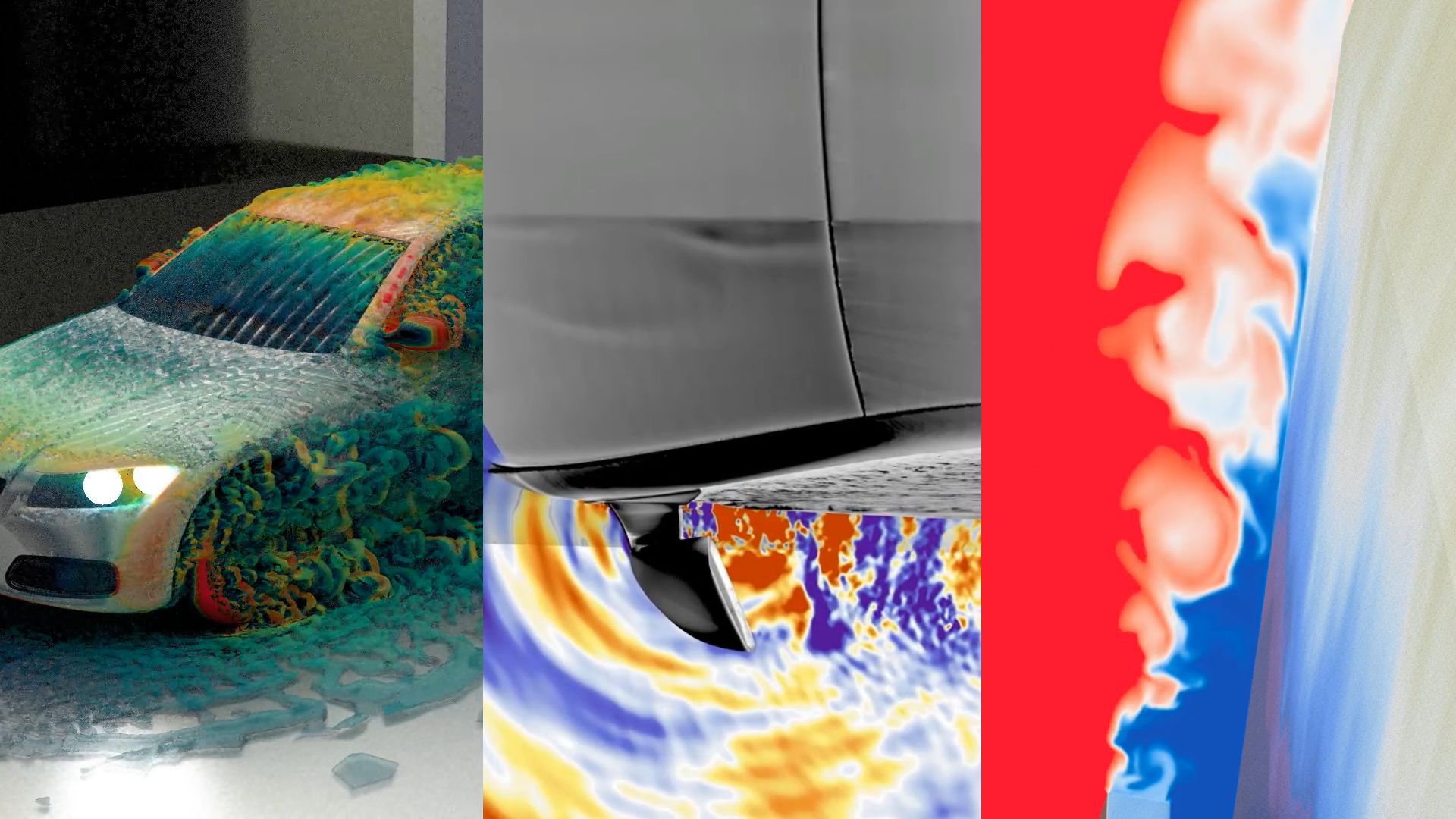

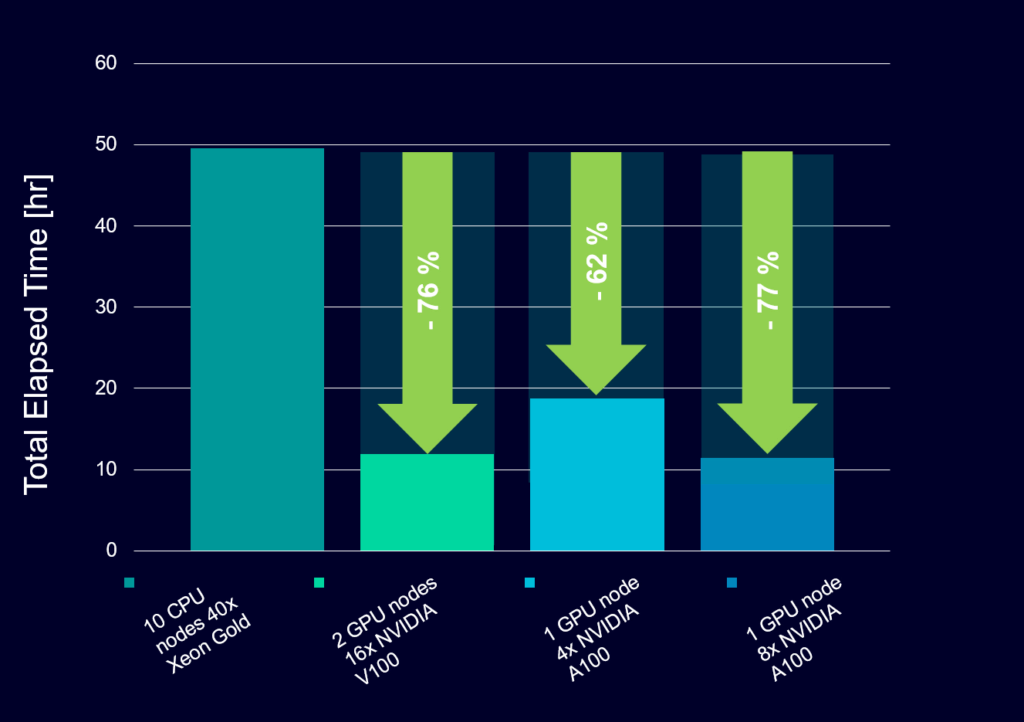

Faster Aeroacoustics CFD on GPUs

Another key component to a good night’s sleep (aside from the knowledge that your CFD simulation is converging nicely) is silence. And the sound of silence is also a key consideration for many engineers. Automotive engineers trying to make their vehicles quieter will often target the side mirrors – one of the major noise sources of a car. In Lucie Sanchez’s blog you can read about when noise cancellation goes too far, but with GPU acceleration of the Perturbed Convective Wave and Lighthill Wave acoustics models, it can go even further!

As we have seen with other examples of high-fidelity CFD, the performance of this case leveraging GPU architecture is excellent. 8 A100s mean that such a simulation could be turned around in 12 hours – one high-fidelity simulation delivered as you log on for the day with coffee in hand.

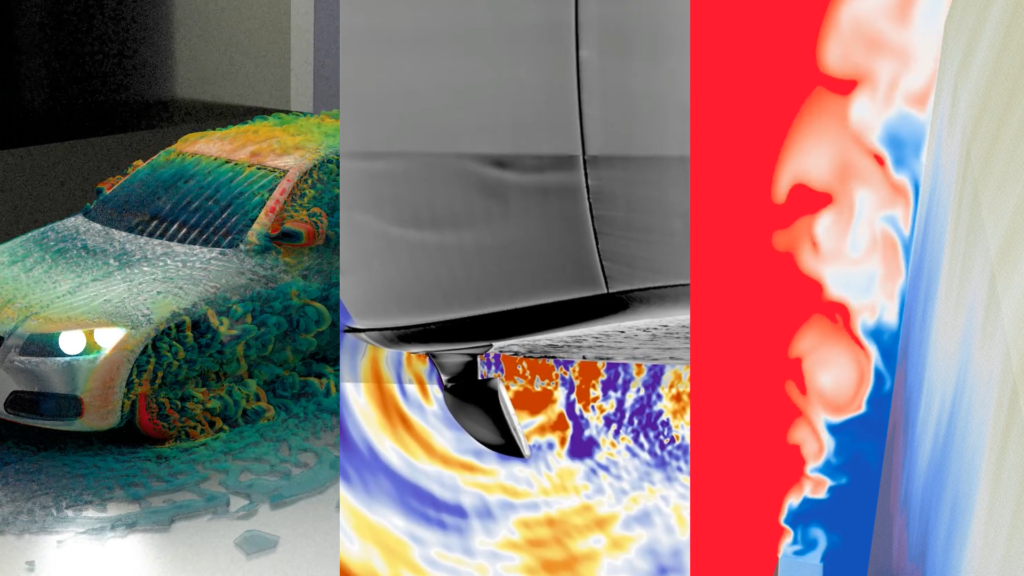

Segregated Energy Solver on GPU

Aside from high-fidelity aeroacoustics and LES on GPUs, in Simcenter STAR-CCM+ 2210, GPU hardware can be used to leverage thermal problems, with the inclusion of the Segregated Energy model and Ideal Gas Equation of State.

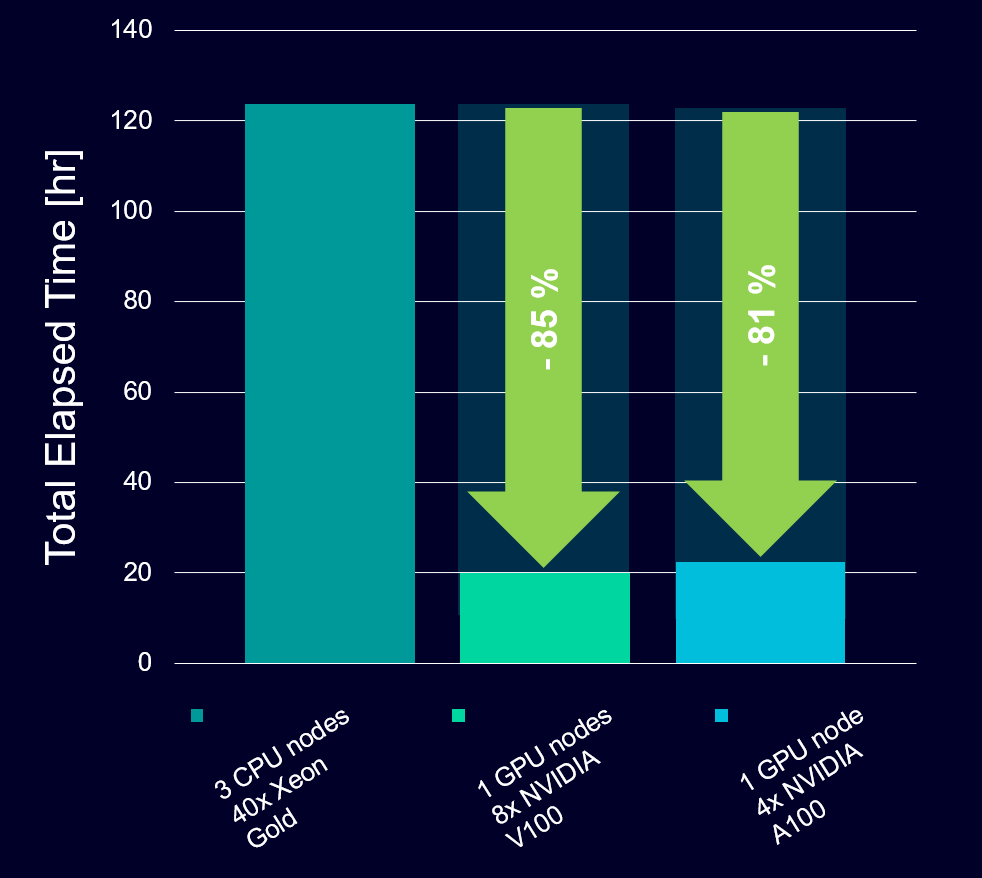

One such example of the need to leverage high-fidelity thermal simulations is the design of trailing edges in gas turbine blades. These blades must withstand incredibly high temperatures and rely on advanced engineering to ensure the integrity of the blades is not compromised – something that keeps designers up at night!

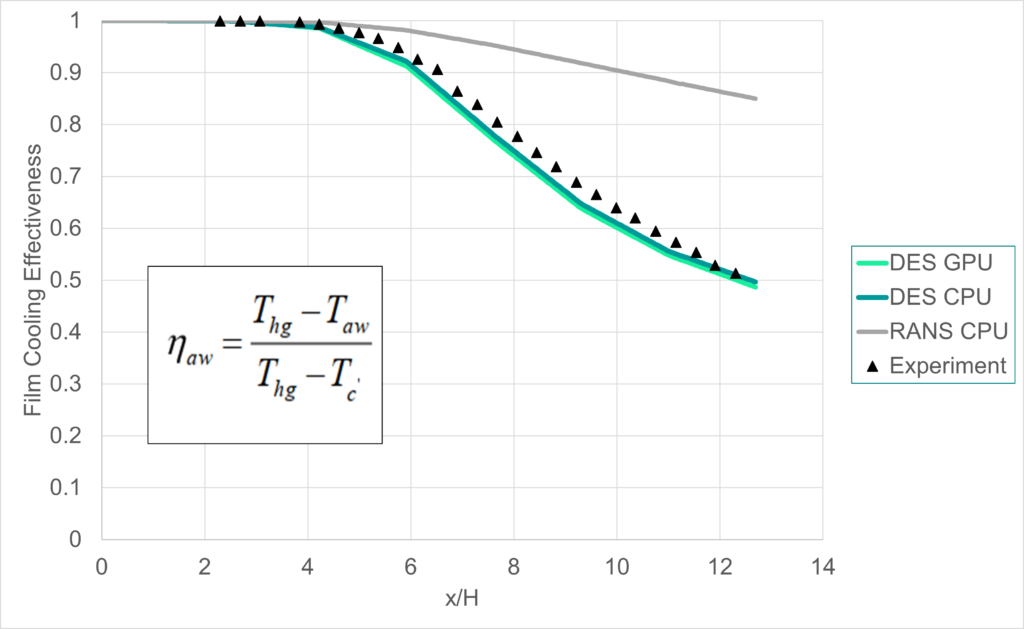

At the trailing edge, hot gas from the external gas path of the blade interacts with the relatively cooler internal blade flow which often exits through a slot at the trailing edge. There is then mixing and impingement on the trailing edge of the blade. Capturing the correct mixing and impingement of these two flows typically requires higher fidelity than a standard RANS calculation. Here we model a representation of such a scenario using the experimental rig of Martini [1] completely with GPU hardware.

Once again, the performance comparison between CPU and GPU is considerable. Taking a typical number of nodes, in this instance 3 Xeon Gold nodes (120 CPU cores), this problem would take close to a week to derive meaningful results. GPU architecture means that realistically design iterations can be turned around in less than a day.

And yet again, consistency with CPU results is granted – with excellent accuracy on both GPU and CPU thanks to DES.

Burning the midnight oil (a.k.a heating up your server room)

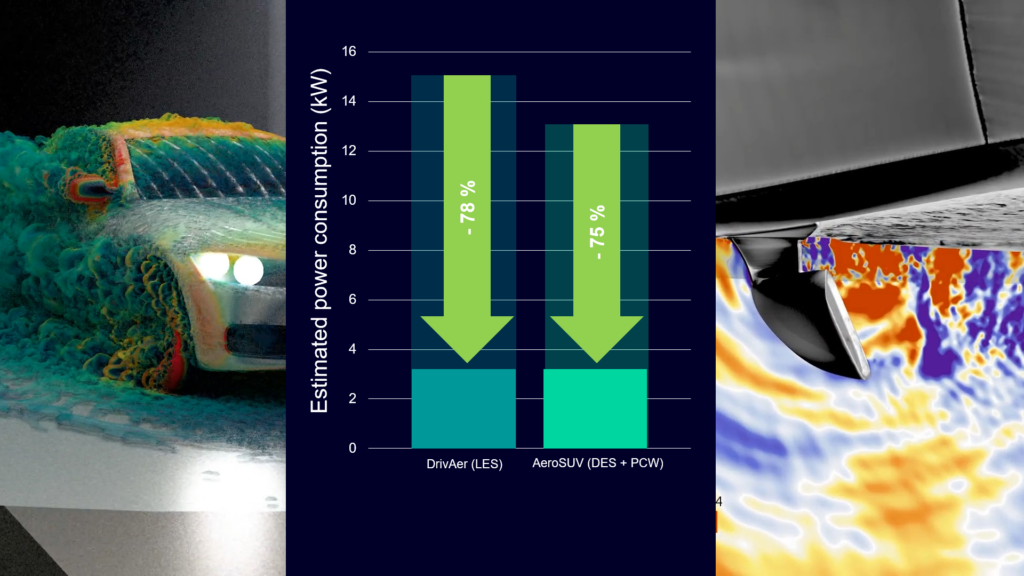

Of course, overnight high-fidelity CFD can be achieved with traditional CPU usage if you throw enough cores at the problem. However, more cores mean more energy consumption and ultimately higher bills. As well as this, from a sustainability perspective being able to minimize power consumption is often a high priority. Whether it’s LES on GPUs or aeroacoustics using GPU hardware allows you to run your high-fidelity CFD simulations at a fraction of the power consumption of more traditional CPU architecture.

Using our simulations of the DrivAer notchback and AeroSUV as two examples, we can clearly see the drastic reduction in required energy consumption. Using Xeon Gold CPUs would require around 15kW of power to run an equivalent simulation on 8 A100s using 3.2kW of power, a whopping 78% less. You can sleep more soundly at night in the knowledge that your simulation is both saving the environment and your wallet.

So, there you have it, Simcenter STAR-CCM+ 2210 is allowing engineers to pursue high-fidelity simulations more readily with GPU hardware. From aeroacoustics, through segregated energy to LES on GPUs, we continue to enable you run your simulation on the hardware that best fits your project. All that while ensuring consistent results between CPUs and GPUs.

And no, this is not a dream; it really is that fast!