Recent Advances in Simulation Hardware: The Sky is the Limit?

“When I was your age, boy, we did CFD on our steam-powered abacus! And we programmed it with punched paper cards!”

(Random senior engineer)

Well, apart from the fact that this was the last sustainable storage medium, really no one wants old hardware back. We’ve all heard the factoid that we flew to the moon with the calculation power of a 1990s cell phone. Looking back decades, we always wonder how we could possibly have gotten along with that hardware.

Over fifty years later rocket CFD using Simcenter STAR-CCM+ made it possible to create a digital twin of the historic Apollo 11 NASA broadcast. [1]

Games, hardware, and engineering processes have evolved a lot over the decades since we first walked on the moon. The physics behind CFD has not. This fact provides the great opportunity to compare “apples to apples.”

When you compare the Moon Rocket Saturn V to your first cell phone, this does not really give you an idea how far we have come. But what if you take the same use cases and the same physics – to see how new hardware deals with unchanged scenarios?

Show ‘em what you’ve got!

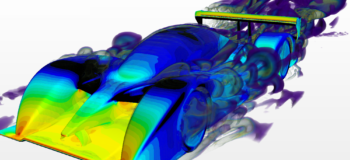

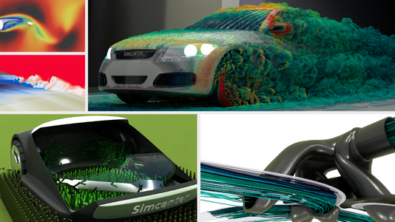

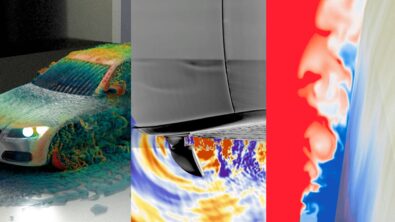

Over the last years, Simcenter STAR-CCM+ has been used not only to develop innumerable products but also to test new hardware – so the engineer knows what to expect if opting for this or that processor.

Nowadays, one of the fastest and most scalable ways is deploying a whole cluster to solve your simulation. Siemens and AMD ran several benchmarks to stress the advantages of the new AMD EPYC™ 9004 series processors with multiple CFD use cases:

- Business Jet (20 million cells)

- Hydrocyclone (30m)

- Spacecraft (6m)

- Ship Hull (3m)

- LeMans (100m) coupled

- LeMans (100m) segregated

- LeMans (17m)

- Reactor (9m)

- Turbocharger (7m)

- Vehicle Thermal Management (178m)

- Vehicle Thermal Management (64m)

These CFD benchmarks are run in Simcenter STAR-CCM+ on various machines under constant boundary conditions, as they have been for many years. Not really back to the sixties, but we do have some history.

The all-new processors show a significant speed-up when compared to the previous generation with the above use cases.

As expected, the AMD EPYC 9004 series processors (in blue shades above) perform significantly faster than their predecessors on all benchmark cases, be it heat transfer, hypersonic external aerodynamics, or multiphase oil-water flow. The 32-core AMD EPYC 9374F processor is 30% to 68% faster than its predecessor AMD EPYC 75F3 with the same number of cores – so you are getting more from every core and thus making better use of the Simcenter STAR-CCM+ license.

The AMD EPYC 7763 processor is one of the highest density AMD EPYC processors for its generation, with 64 cores. Now, it not only gets out-performed by the newer AMD EPYC 9554 (64 cores) by 82% on average across all benchmark cases – it also hands over the crown for core count: the AMD EPYC 9654 processor sets a new high-water mark for x86-processors with 96 cores, supporting further performance improvements.

More super-linear speedup? How is that even possible?

Previous tests on multi-node servers showed that the previous generation more than doubled the overall speed when doubling the number of nodes working on a single simulation. This totally contradicts common sense! How can two boosters combined be more than twice as fast?

Well, the answer is hidden within the chip. Fast CFD codes like Simcenter STAR-CCM+ rely on two pillars in computing:

- the raw processor speed – determined by core count, clock speed, instructions per cycle…

- memory speed. But which memory?

Although some nerds might know something about RAM speed, maybe even timings, relatively few know how the cache works. The cache is a “small” (rumored to exceed 1 GB soon…) memory located directly in the CPU chip. It is a fantastillion times faster than RAM. And this is the reason why clusters are suited for those tasks: Unlike a consumer desktop CPU, AMD EPYC processors are not bottlenecked by their cache size.

And if you have two nodes working on a single simulation – you also have twice the cache! So, you are multiplying raw processor speed and adding extra ultra-fast cache. This is even more likely to happen with the new 4th generation AMD EPYC processors. The further increased processor cache size makes it more likely that the necessary data for the next calculation is already stored in that ultra-fast memory.

If the data is not there, the new AMD EPYC 9004 series processors can fetch it even faster, as it now supports new memory features:

- DDR5 RAM. This new memory is faster than the predecessor, vital for simulation. Whereas the 3rd generation had 8 memory channels, the 4th generation AMD EPYC processor has 12 channels, and it supports up to 6 TB of RAM. For CFD on smaller devices, RAM used to be the “GO/NO GO” decision, as you need to fit the whole simulation with its huge mesh into the simulation. But 6 TB RAM roughly equals three BILLION cells – one to two orders of magnitude above industrial standard use cases!

- Saving your simulation gets faster as well – as you can address your hard drives with 128 or up to 160 PCIe 5.0 lanes (single socket/dual socket mainboards). All other future applications like future graphic cards, network connectors and many more will benefit from these interfaces.

- Talking about future applications: the socket SP5 plan is to remain for several years to come, so upgrading to newer generations in the future will result in limited hardware costs.

The heat is on! But cooler than before

Every single bit of electricity pumped into the CPU is converted into heat, I am afraid. Not even AMD can change that. But they can change how much of it is needed for a certain computation.

If your CPU uses the same power but finishes the CFD job earlier, you save energy on the whole run. The AMD EPYC 9004 Series processors have a higher thermal design power than their predecessors and thus enable more thermal headroom in systems powered by these processors. The investment of a few percentage points of energy results in a greater share of runtime reduction, so the computation gets up to 41% more energy efficient after all. The mighty, core-count-wise unmatched AMD EPYC 9654 again takes the crown for the best efficiency: 58% better than last generation’s king with 64 cores when running Simcenter STAR-CCM+, as shown in the table below. Compare that efficiency gain to your next-gen car!

Calculations based on benchmark result and CPU TDP provided pre-launch. For details see [2].

Conclusions

It seems that AMD is a great choice for dedicated CFD systems. While the fourth stage of this AMD EPYC rocket takes CFD performance to new heights, the energy consumption remains on the ground. This way engineers can help reduce the carbon footprint of their products – optimizing it in CFD runs that use less energy than before.

In some ways, processor options seem like configurable rockets. You can choose how many boosters you want to add, depending on your payload – or directly go for an additional stage. In all cases, you may well benefit from reduced energy consumption per simulation as this greatly contributes to the lower operation costs for processors in 24/7 CFD use. This way, your budget does not keep you down on earth. So, how do you configure your next rocket? Maybe a CFD simulation might support you in that decision:

AMD, the AMD arrow logo, EPYC and combinations thereof are trademarks of Advanced Micro Devices, Inc.

[1] Source of the real twin video material: NASA/KSC. Via the Apollo Image Archive (also to be found here); close-up slow-motion footage sonicbomb.com (see here).

[2] Efficiency is calculated by relative speedup divided by relative TDP change. Relative speedup is simulation runtime on 3rd generation divided by simulation runtime on 4th generation. Relative TDP change is 4th generation TDP divided by 3rd generation TDP. Actual CPU power consumption during CFD runs can differ from TDP.

Comments

Comments are closed.

Any particular reason why the comparison is drawn with regular old Milan CPUs instead of Milan-X? It is acknowledged that Milan-X provides a significant performance increase over Milan. Yet none of the charts use them as a reference.