Part 6: The 2022 Wilson Research Group Functional Verification Study

FPGA Language and Library Trends

This blog is a continuation of a series of blogs related to the 2022 Wilson Research Group Functional Verification Study. In my previous blog, I discussed FPGA verification techniques and technologies adoption trends, as identified by the 2022 Wilson Research Group study. In this blog, I’ll present FPGA design and verification language adoption trends.

It is not uncommon for FPGA projects to use multiple languages when constructing their RTL and testbenches. This practice is often due to legacy code as well as purchased IP. Hence, you might note that the percentage adoption for some of the languages that I present sums to more than one hundred percent.

FPGA RTL Design Language Adoption Trends

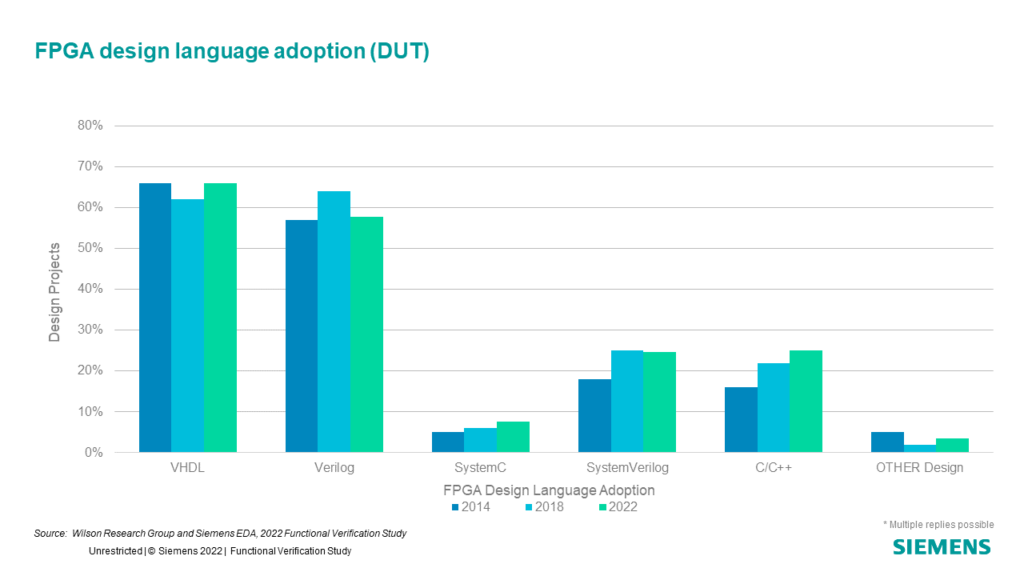

Let’s begin by examining the languages used to create FPGA RTL designs. Figure 6-1 shows the trends in terms of languages used for design, by comparing the 2014, 2018, and 2022 Wilson Research Group study.

It is important to note that the language adoption trends shown in fig. 6-1 are aggregated across all market segments and all regions of the world. When we filter the participants down to a specific region (e.g., Europe), or a specific market segment (e.g., Mil/Aero), then the adoption percentages are different. Yet, it is still useful to examine worldwide trends to get a sense of where the overall industry is moving in the future in terms of design ecosystems.

FPGA RTL Verification Language Adoption Trends

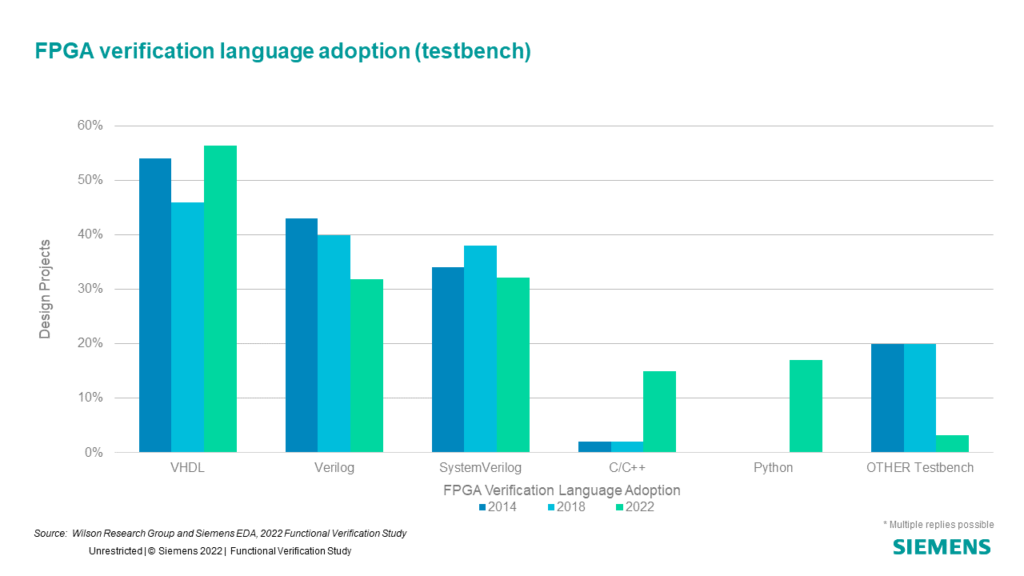

In fig. 6-2, we show the adoption trends for languages to build testbenches.

Historically, VHDL has been the predominant language used for FPGA testbench development. However, we are starting to see some interest in C/C++ and Python. Today, it’s not unusual to find that the RTL design was created using VHDL, and the testbench was created using a different language.

Recently we started tracking Python for testbench development. In our 2022 study we found that 17% of FPGA projects are using Python. We also found that 81% of the projects using Python were using it for runtime management, while 50% were using it for stimulus generation. We also found that 27% of the projects using Python were using it in conjunction with cocotb or some other methodology-based environment.

FPGA Testbench Methodology Adoption Trends

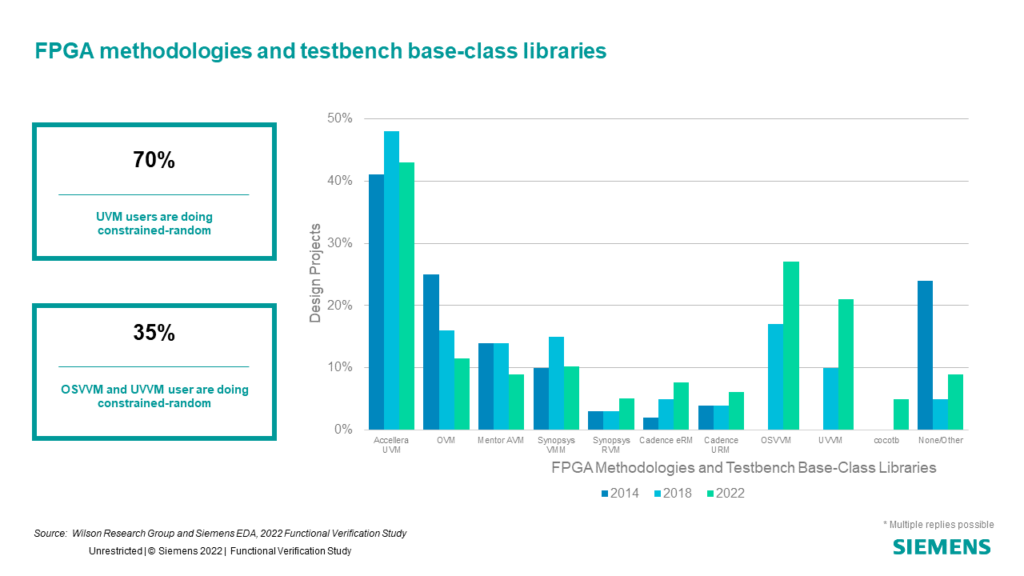

The adoption trends for various testbench methodology standards are shown in fig. 6-3, and we found that the Accellera UVM is currently the predominant standard that has been adopted to create FPGA testbenches worldwide.

Since 2018, we have seen healthy growth between the Open Source VHDL Verification Methodology™ (OSVVM) and the Universal VHDL Verification Methodology (UVVM), which is encouraging. We were curious about the type of testbenches being developed using these various methodologies, so we calculated the percentage of projects who were using UVM, OSVVM, and UVVM to create constrained-random testbenches. We found that 70% of projects using UVM were creating constrained-random testbenches, while only 35% of OSVVM and UVVM created constrained-random testbenches. I suspect this will increase over time as OSVVM and UVVM matures in its adoption.

FPGA Assertion Language and Library Adoption Trends

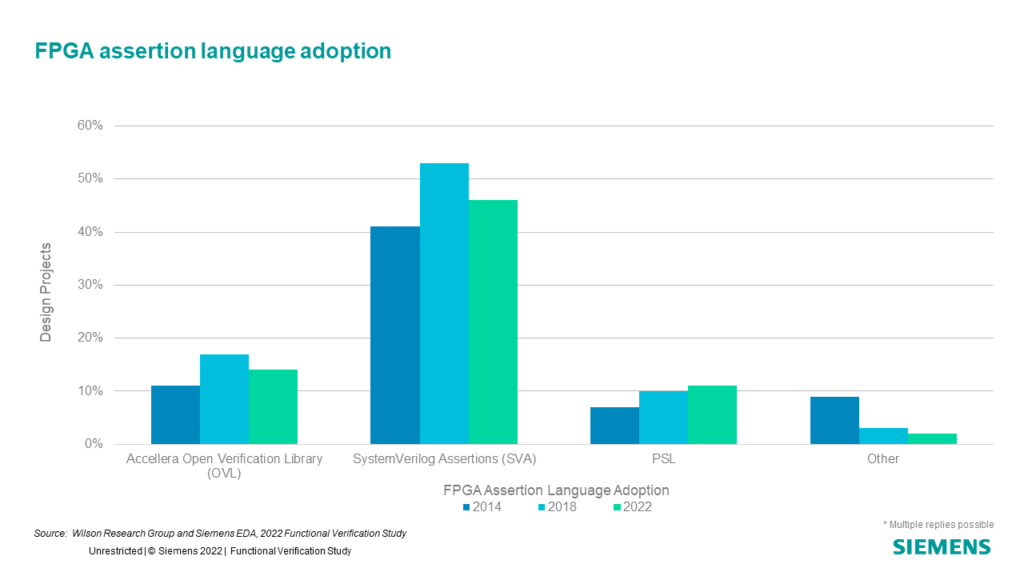

Finally, FPGA project adoption trends for various assertion language and library standards are shown in fig. 6-4. Although there is some lumpiness in the data due to shifting regional participation, SystemVerilog Assertions is the predominant assertion language in use today. Finally, similar to languages used to build testbenches, it is not unusual to find FPGA projects create their RTL in VHDL and then create their assertions using SVA.

In my next blog, I will shift the focus from FPGA trends and start to present the IC/ASIC findings from the 2022 Wilson Research Group Functional Verification Study.

Quick links to the 2022 Wilson Research Group Study results

- Prologue: The 2022 Wilson Research Group Functional Verification Study

- Part 1 – FPGA Design Trends

- Part 2 – FPGA Verification Effectiveness Trends

- Part 3 – FPGA Verification Effort Trends

- Part 4 – FPGA Verification Effort Trends (Continued)

- Part 5 – FPGA Verification Technology Adoption Trends

- Part 6 – FPGA Verification Language and Library Adoption Trends

- Part 7 – IC/ASIC Design Trends

- Part 8 – IC/ASIC Resource Trends

- Part 9 – IC/ASIC Verification Technology Adoption Trends

- Part 10 – IC/ASIC Language and Library Adoption Trends

- Part 11 – IC/ASIC Power Management Trends

- Part 12 – IC/ASIC Verification Results Trends

- Conclusion: The 2022 Wilson Research Group Functional

- Epilogue: The 2022 Wilson Research Group Functional

Comments

Leave a Reply

You must be logged in to post a comment.

Hi, Harry – do you have regional visibility for Figure 6-1? I would guess that Europe is stronger on VHDL FPGA design and the rest of the world might be stronger on SystemVerilog FPGA design, but that is only my guess. Do you have better data? Great industry information as always! Regards – Cliff Cummings