Unleashing the Power of Verification Data with Machine Learning

The gap

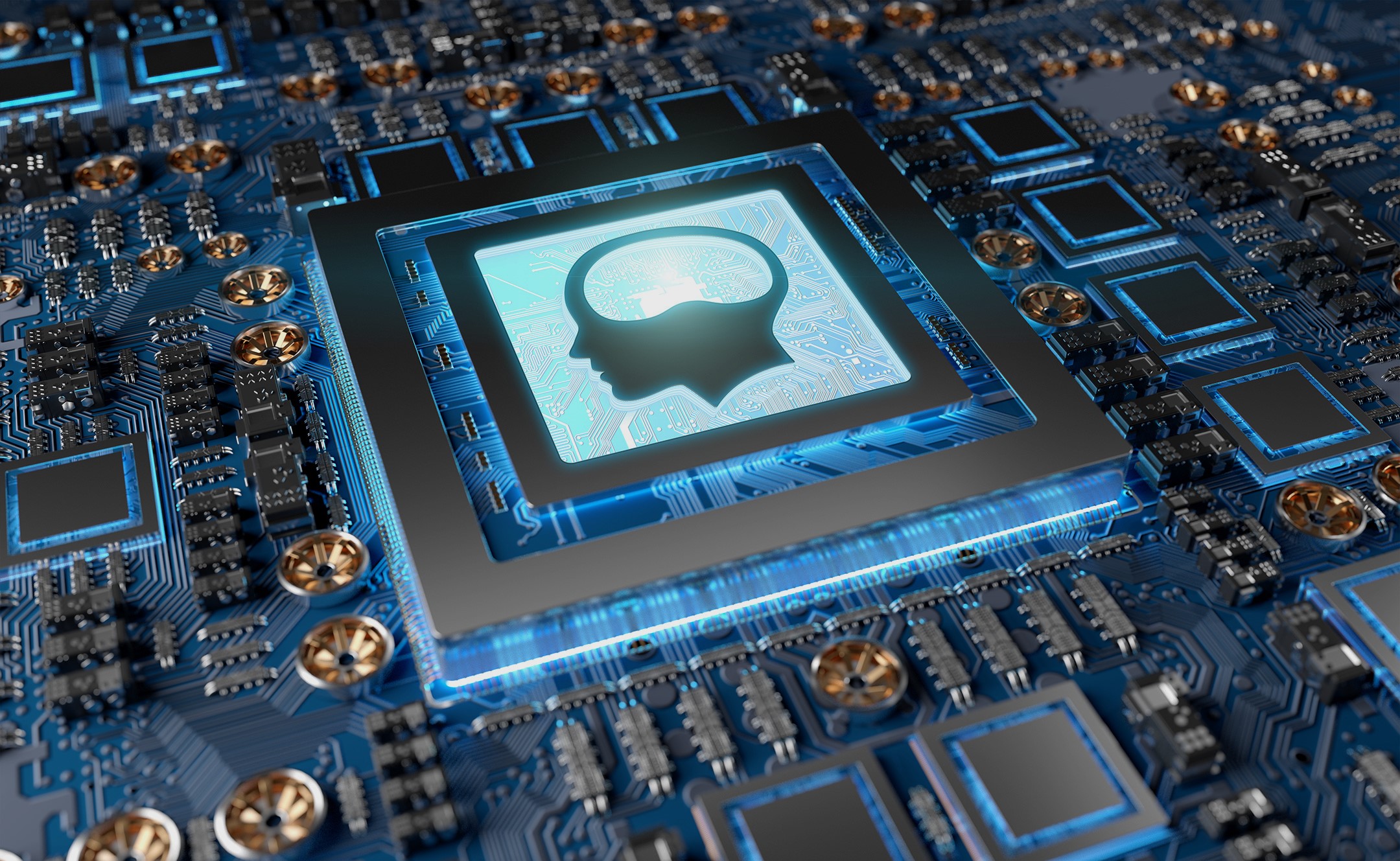

Recent chip developments have started showing signs of overtaking Moore’s law. The largest chip ever produced is an AI chip consisting of 2.6 trillion transistors. The following figure shows the Cerebras WSE2 on Moore’s curve, along with other prominent chips, including their debut year and transistor counts. Noting the logarithmic scale on the vertical axis, WSE2 has 22x more transistor than the closest CPU/GPU, Apple’s M1 Ultra. It shows how fast these new chips are supercharging Moore’s law.

The gap between IC design complexity and verification productivity is widening. People closely tracking the industry have noticed this worrying trend, which is documented in the latest Wilson Research Group Functional Verification Study Despite the industry’s effort to close the gap by increasing the investment in verification, the success rate of 1st spin silicon is steadily dropping, according to the survey.

How to make verification more effective and efficient? If history has taught us anything, it is that wisdom can be gained by looking at seemingly irrelevant problems. People working on other complex systems started using data to improve their designs more than a century ago.

The century-old methodology

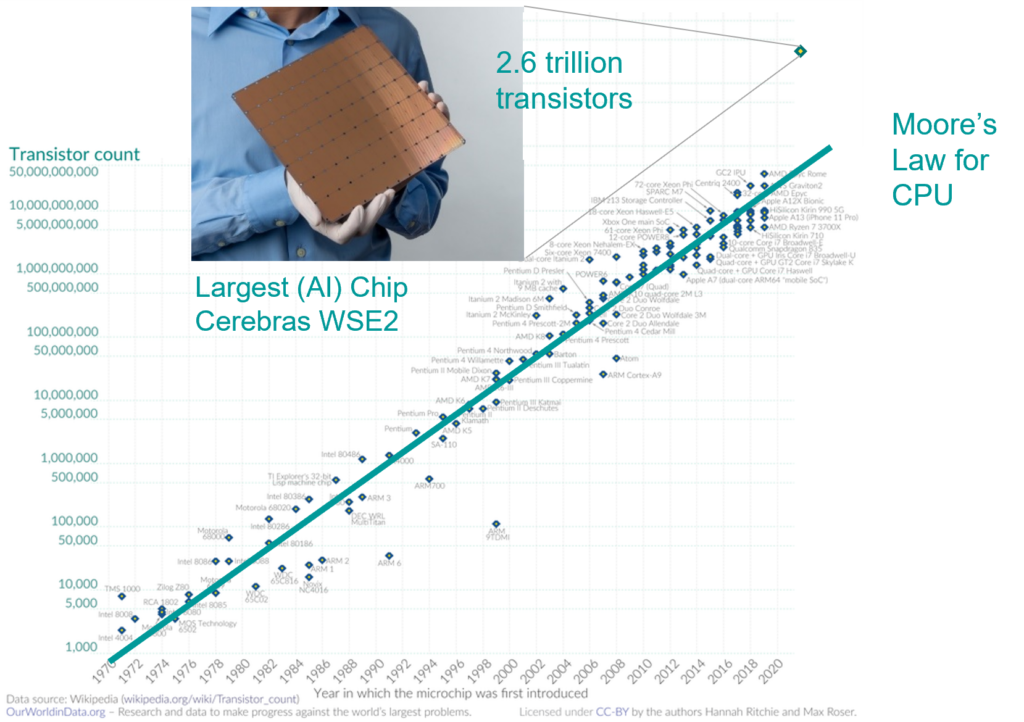

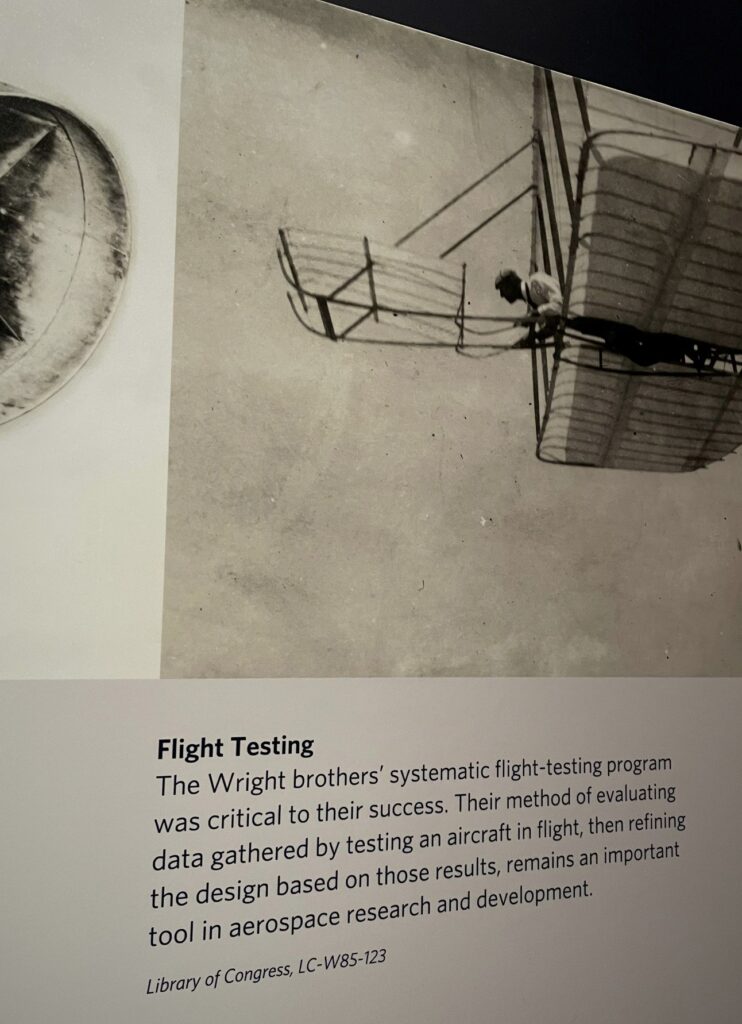

Many would attribute the success of the Wright brothers in flying powered and controlled airplanes to their innovative minds, knowledge, ingenuity, persistence, and even resources. While visiting the National Air and Space Museum last winter, I was attracted to the writings on their use of data.

The quality of my cellphone photo left more to be desired. However, the still legible text accompanying this Library of Congress photo (call number LC-W85-123) makes it clear that the importance of data should never be discounted in the Wright Brothers’ success. If you replace “flight/aerospace” with familiar words in IC design and verification, it still makes perfect sense today, even though the methodology of data-driven verification was adopted more than 120 years ago.

Systematic verification is critical to an IC design’s success. The method of evaluating data gathered by testing an IC design, then refining the design based on the results, remains an important tool in IC design and development.

The Wright brothers were meticulous in their testing and data collection, using wind tunnels and balances to measure the lift, drag, and thrust of various wing shapes and airfoil designs. Using data to guide their design and testing process, they made rapid progress in their work. Of course, they did not have access to the more advanced data analytic theories and tools developed in the past 120 years. If they had, it’s safe to say that they would have iterated even faster with less trial and error in their design verification.

Verification must be data-centric

Complete verification of a chip is an NP-complete problem, and enormous amounts of data can be generated during the entire verification cycle of even a simple chip design. Given the complexity of modern chips, are we in a hopeless situation?

We at Siemens have been meeting with innovative customers and academic researchers eager to try advanced data analytics, especially machine learning (ML) algorithms, to improve verification speed, accuracy, and/or automation. Actually, many have already experimented with AI/ML techniques on many verification problems and shared their encouraging results. An overview of these results is given in a whitepaper conveniently titled Verification Data Analytics with Machine Learning.

Siemens shares the same vision and believes ML is one of the most effective technologies in tackling these enormous amounts of data in NP-complete problems to bring improvement to the users of our verification tools, just like using ML to predict protein folding, another famous NP-complete problem.

Despite the promise of using ML to address verification problem. The verification community still need to pay more attention to data. Proper implementation of AI/ML requires a change of mindset, recognizing the value of data, and pivoting from the existing workflow to a data-centric workflow. ML can only be effective with proper and enough data.

However, most existing verification workflows are not data-centric. Many do not value data generated during the verification cycle. The data are often used for single-case analysis, then shelved or discarded in most cases. Even worse, in these verification workflows data are scattered across different stages, owned by other users, located on different computers, and represented in various formats, all of which makes effective data analytics almost impossible.

These verification data are precious assets for people in the know. Unlike other assets, data represent a particularly valuable asset in that they are of limited value when isolated but tremendous value when connected. Data can be connected in multiple ways.

Horizontal data connection correlates different pieces of data in interesting ways. For example, knowing a list of phone numbers or the addresses of all houses in a city is barely interesting. However, once each phone number is associated with a specific house address, the combined data would certainly get advertising companies’ attention. Similarly vertical data connection correlates with similar data over time. Knowing a person’s current heart rate would barely interest anyone, but knowing how his heart rate varies over time may help a doctor gain insight into his health problem.

High-quality, well-connected mass data are crucial to the success of applying ML to verification. The success of ML applications on vision data and language data can be directly attributed to the availability of such kind of mass training data. With the data made available by ImageNet, researchers were able to invent many previously unpractical ML models for image classification. And only 4 years after ImageNet, ML models surpassed human performance for the task. Fei-fei Li, The founder of ImageNet , once talked about the importance of data.

The paradigm shift of the ImageNet thinking is that while a lot of people are paying attention to models, let’s pay attention to data. Data will redefine how we think about models.

In order to apply advanced ML techniques to bring more value to verification, focusing on building data assets will put a verification team on the path to ML success. With well-prepared verification data assets, ML can extract value by improving verification productivity, making it faster, more accurate, and more automatic.

Conclusions

Big data can significantly improve verification predictability and efficiency. The solution to close the design & verification gap lies in the same methodology adopted by the Wright brothers: data-driven verification for complex systems. A new whitepaper Improving Verification Predictability and Efficiency using Big Data talks about Questa Verification IQ, a verification management solution intended to help our users gather and connect data throughout their verification workflow and empower them to reap the value from the incredibly important data assets.