Part 5: The 2010 Wilson Research Group Functional Verification Study

Effort Spent On Verification (Continued)

This blog is a continuation of a series of blogs, which present the highlights from the 2010 Wilson Research Group Functional Verification Study.

In my previous blog, I focused on the controversial topic of effort spent in verification. This blog continues this discussion.

I stated in my previous blog that I don’t believe there is a simple answer to the question, “how much effort was spent on verification in your last project.” I believe that it is necessary to look at multiple data points to truly get a sense of the real effort involved in verification today. So, let’s look at a few additional findings from the study.

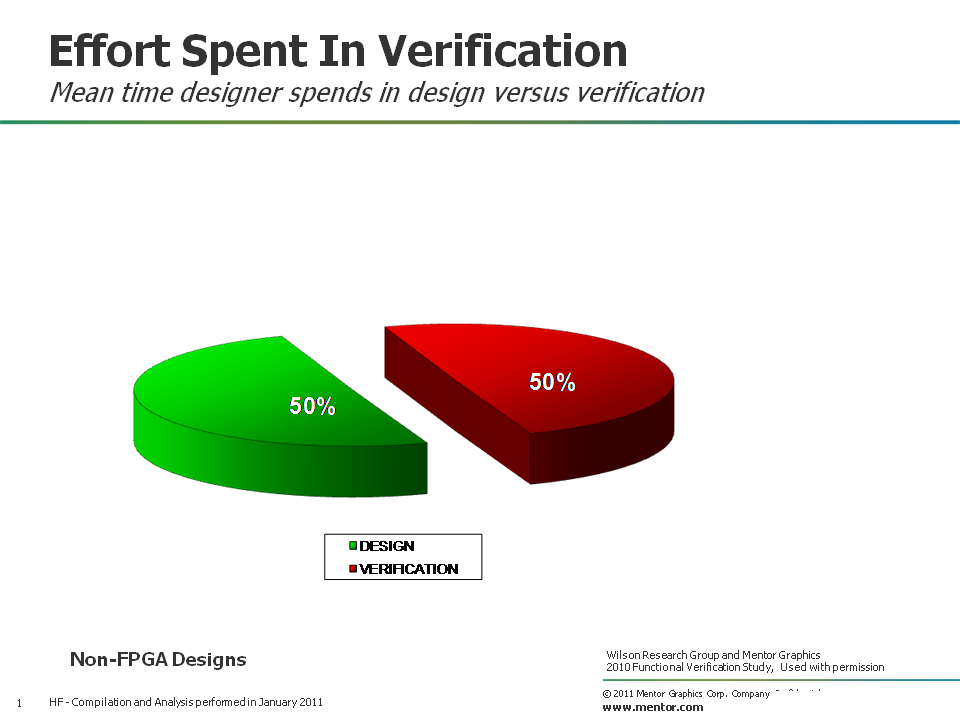

Time designers spend in verification

It’s important to note that verification engineers are not the only project members involved in functional verification. Design engineers spend a significant amount of their time in verification too, as shown in Figure 1.

Figure 1. Mean time designer spends in design vs. verification

In fact, one finding from our study is that the mean time a design engineer spends in verification has increased from an average of 46 percent in 2007, to 50 percent in 2010. The involvement of designers in verification ranges from:

- Small sandbox testing to explore various aspects of the implementation

- Full functional testing of IP blocks and SoC integration

- Debugging verification problems identified by a separate verification team

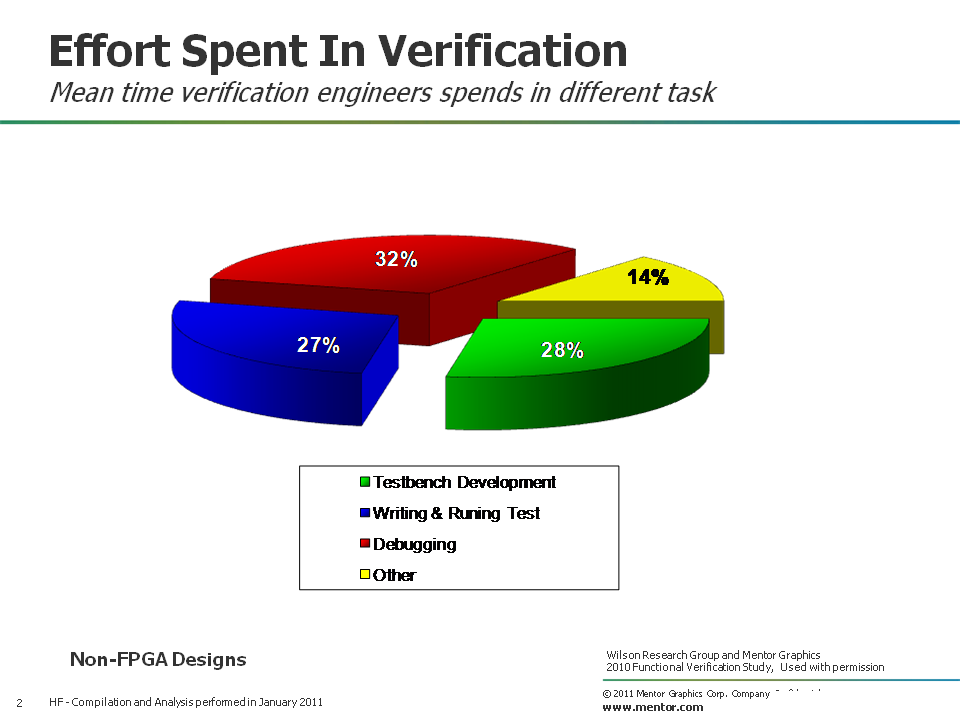

Percentage of time verification engineers spend on various task

Next, let’s look at the mean time verification engineers spend on various task related to their specific project. You might note that verification engineers spend most of their time in debugging. Ideally, if all the tasks were optimized, then you would expect this. Yet, unfortunately, the time spent in debugging can vary significantly from project-to-project, which presents scheduling challenges for managers during a project’s verification planning process.

Figure 2. Mean time verification engineers spend in different task

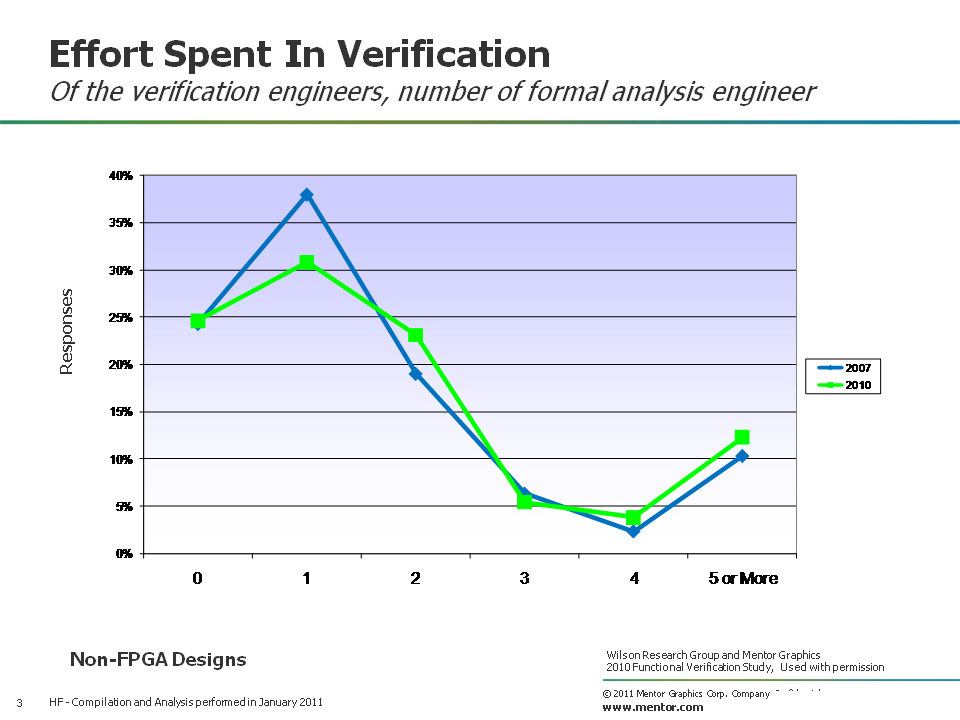

Number of formal analysis, FPGA prototyping, and emulation Engineers

Functional verification is not limited only to simulation-based techniques. Hence, it’s important to gather data related to other functional verification techniques, such as the number of verification engineers involved in formal analysis, FPGA prototyping, and emulation.

Figure 3 presents the trends in terms of number of verification engineers focused on formal analysis. In 2007, the median number of verification engineers focused on formal analysis on a project was 1.68, while in 2010 the median number increased to 1.84.

Figure 3. Median number of verification engineers focused on formal analysis

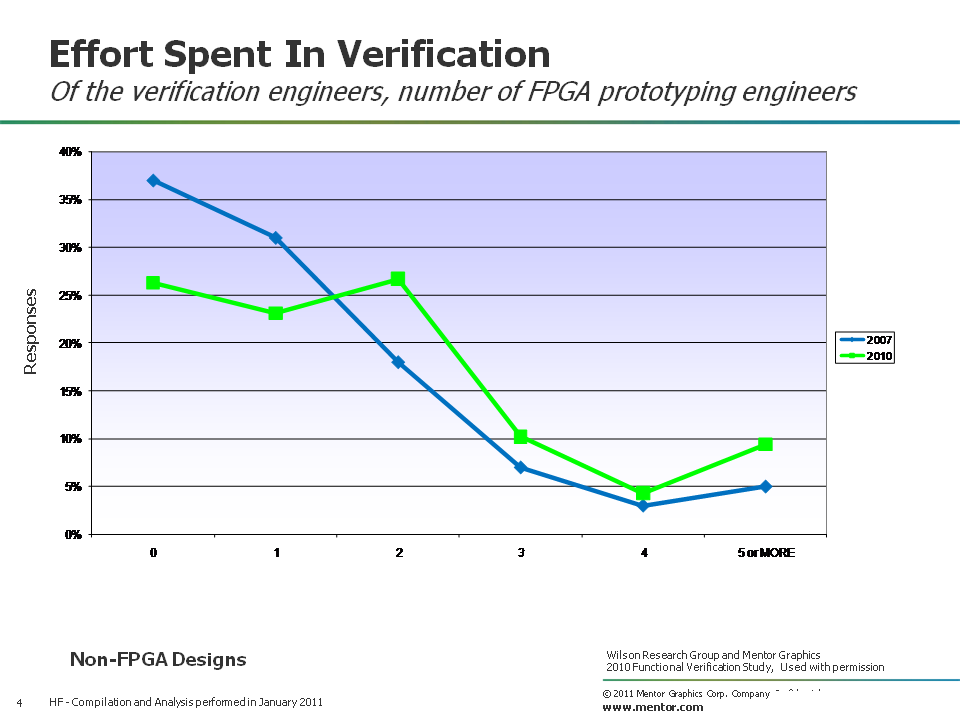

Figure 4 presents the trends in terms of number of verification engineers focused on FPGA prototyping. In 2007, the median number of verification engineers focused on FPGA prototyping on a project was 1.42, while in 2010 the median number increased to 2.04. Although FPGA prototyping is a common technique used to create platforms for software development, it can be used for SoC integration verification and system validation.

Figure 4. Number of verification engineers focused on FPGA prototyping

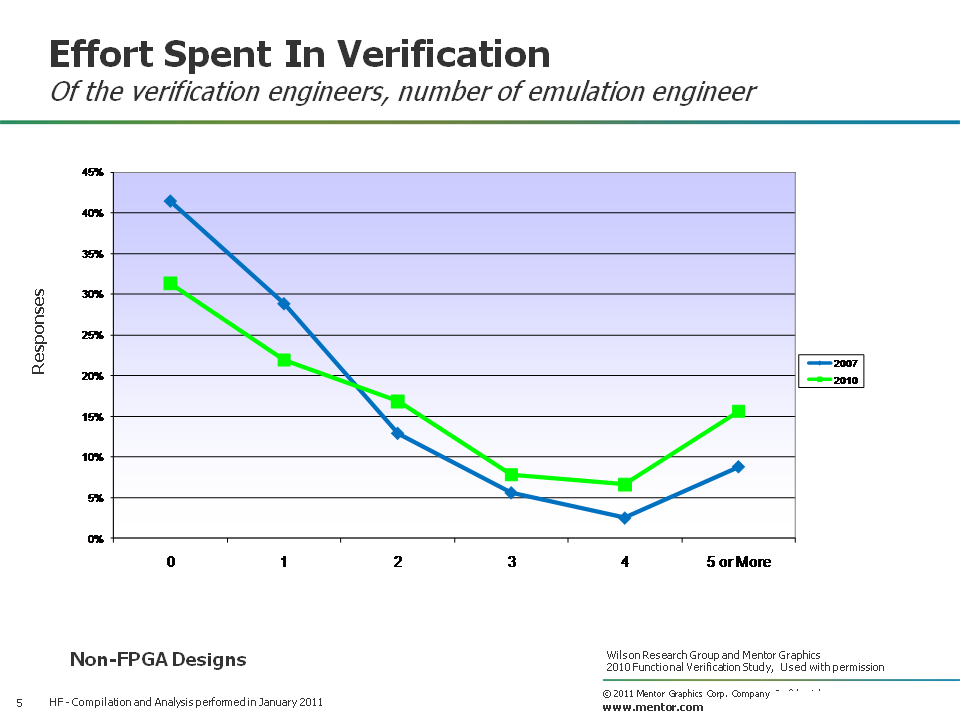

Figure 5 presents the trends in terms of number of verification engineers focused on hardware assisted acceleration and emulation. In 2007, the median number of verification engineers focused on hardware assisted acceleration and emulation on a project was 1.31, while in 2010 the median number increased to 1.86.

Figure 5. Number of verification engineers focused on emulation

A few more thoughts on verification effort

So, can I conclusively state that 70 percent of a project’s effort is spent in verification today? No. In fact, even after reviewing the data on different aspects of today’s verification process, I would still find it difficult to state quantitatively what the effort is. Yet, the data that I’ve presented so far seems to indicate that the effort (whatever it is) is increasing. And there is still additional data relevant to the verification effort discussion that I plan to present in upcoming blogs. However, in my next blog I shift the discussion from verification effort, and focus on some of the 2010 Wilson Research Group findings related to testbench characteristics and simulation strategies.

Comments

Leave a Reply

You must be logged in to post a comment.

Hi, Harry – are we going to see any verification language usage trends in future posts? Verilog, VHDL, eSpecman, Vera, SystemVerilog, eRM, VMM, AVM, URM, OVM, UVM?

Hi Cliff–yes I am still planning on presenting the language trends in a future post. Before I do that I need to discuss the trends in simulation and testbench strategies. I meant to get to it this past week, but work got in the way 😉 My plan is to release a new blog Monday morning. Thanks for your patience!