Supercharge your CFD simulations with GPUs – more hardware and more physics

The more things change the more they stay the same

As an unintended consequence of trotting many miles of the Thames Path during marathon training, I discovered that right on my doorstep was a piece of Siemens history. The Siemens Brothers Co. Ltd. of Woolwich, London had a large site in the Woolwich Dockyard area, Southeast London. Even more interesting to me was, in the 1920s, amongst many other things, this factory was manufacturing dry cell batteries! These batteries were predominantly used for radios and in cars.

Why do I find this so interesting? Well, if we fast forward 100 years, batteries in the automotive sector are the topic of interest . Of course, not for the radio or spark plug like those old Siemens Brothers’ batteries but to drive the propulsion of the vehicle itself – and there is still at least one Siemens representative in the Royal Borough of Greenwich (and hundreds more worldwide) who wants to help!

Battery Design with Simcenter STAR-CCM+

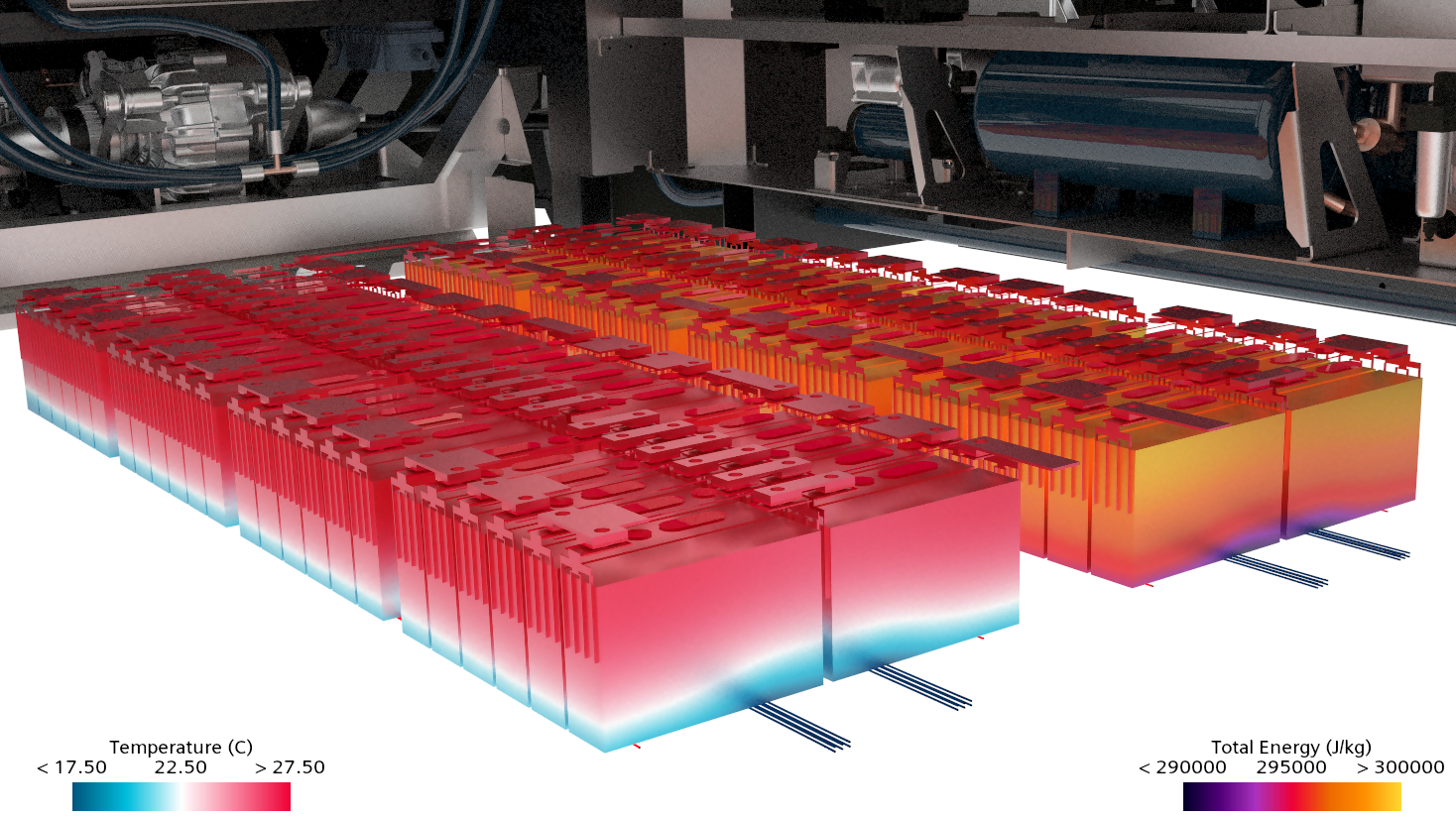

With Simcenter STAR-CCM+, designers of E-Powertrains can delve into detailed Cell Design, Thermal Runaway and Thermal Management of battery packs with one single tool, accelerating all aspects of electric vehicle powertrain design.

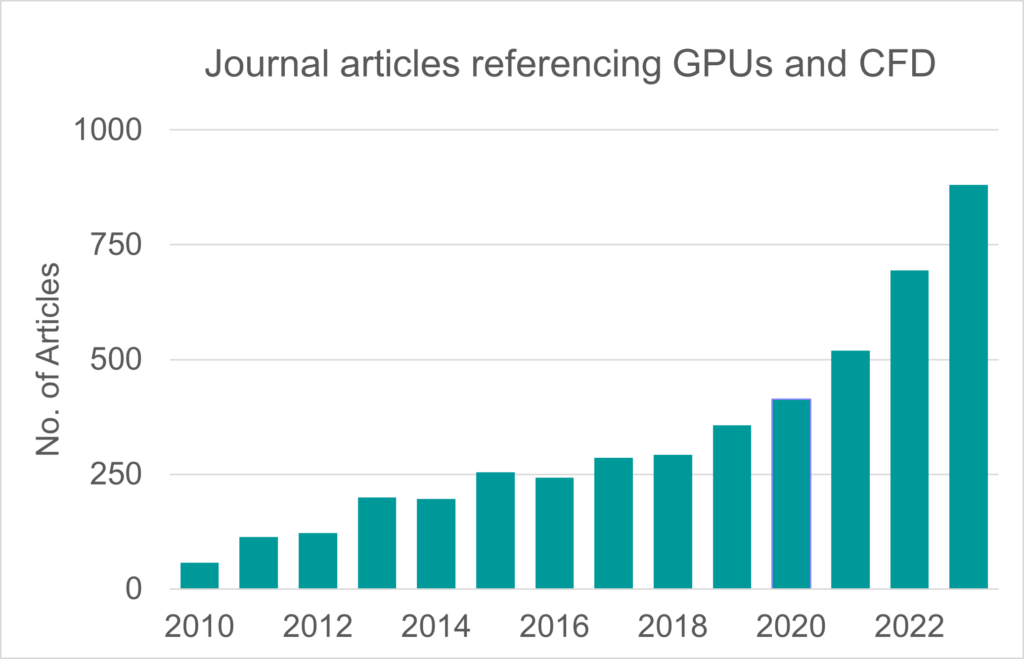

At the same time, the demand on engineers continues to rise, with the need to increase the throughput of simulations within tight timescales. GPU hardware is an increasingly important piece of the puzzle option to achieve such a goal. Out of interest I looked at the number of publications referencing “GPU accelerated CFD” over at ScienceDirect. Perhaps not truly a scientific approach, but the results indicate that the interest in the space of CFD simulations with GPUs is growing at an accelerating pace.

So, what’s new?

GPU and CFD – More hardware and more physics

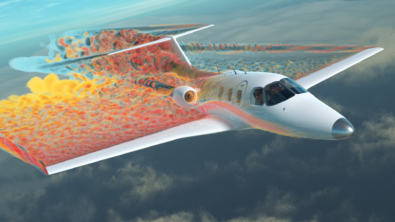

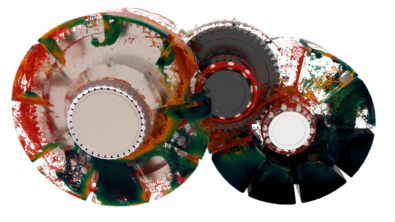

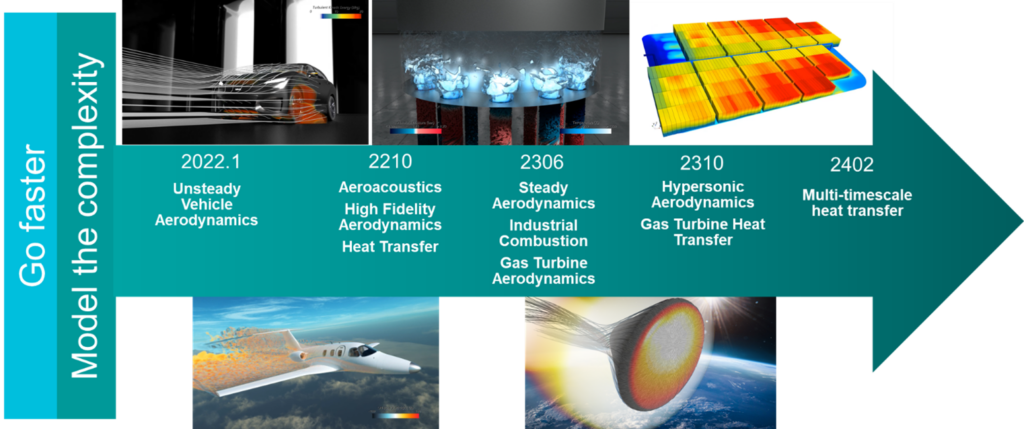

In Simcenter STAR-CCM+ 2402, we have taken even more strides in both the range of GPU hardware that can be used as well as the physics that can be modelled natively on GPUs, extending into detailed thermal management! Here’s a refresher on some of the applications that can be performed natively on GPUs with Simcenter STAR-CCM+:

Introducing AMD GPU functionality

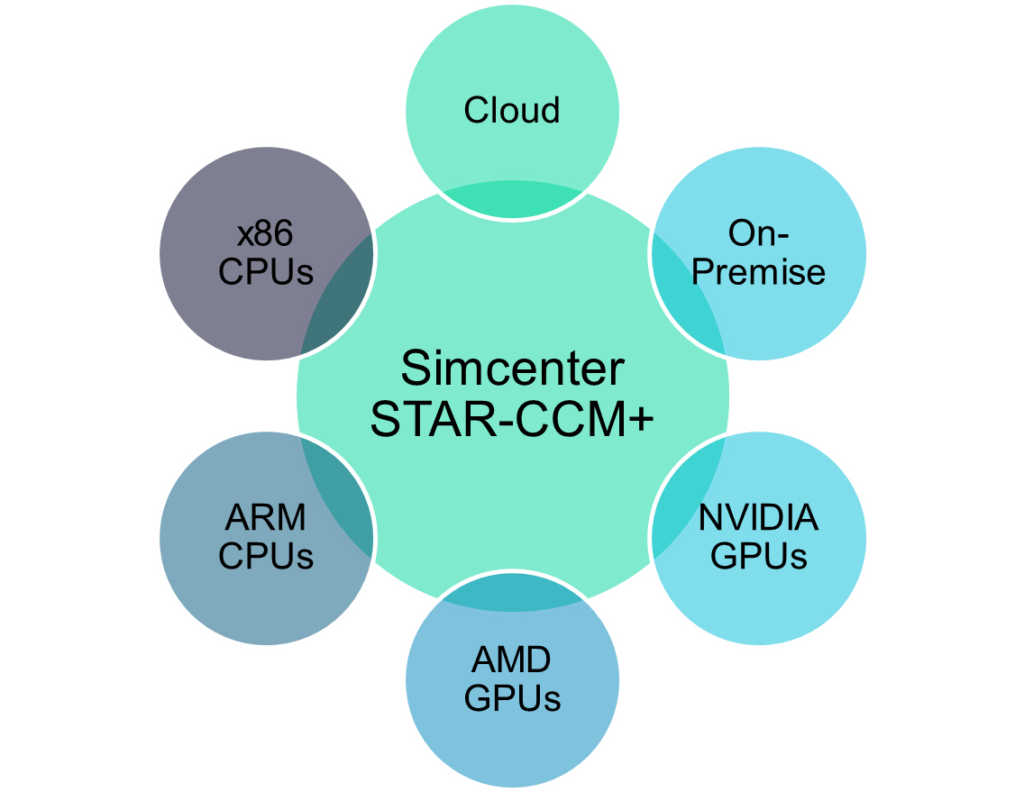

Let’s start with the hardware first and head from Southeast London to the Silicon Valley and the AMD Headquarters. Why? Because Simcenter STAR-CCM+ 2402 now supports using AMD GPUs for GPU-native computation! This represents a step-change in broadening the scope of available GPU hardware that can be leveraged. In particular, we are recommending the AMD Instinct™ MI200 series (MI210, MI250 and MI250X). The Instinct MI100, Radeon™ PRO W6800 and Radeon PRO V620 are also supported. With this introduction, Simcenter STAR-CCM+ is in a unique position of being the only multi -purpose CFD code that can run on a full suite of CPUs and GPUs . I’ve spoken before about how GPU architecture is redefining traditional CFD design practices, and this is another step on that journey. We are excited to continue working with AMD to expand the hardware options even further in future releases (such as the Instinct MI300 series) and continue to look for even more optimization in performance of CFD simulations with GPUs.

Thermal management CFD simulations with GPUs

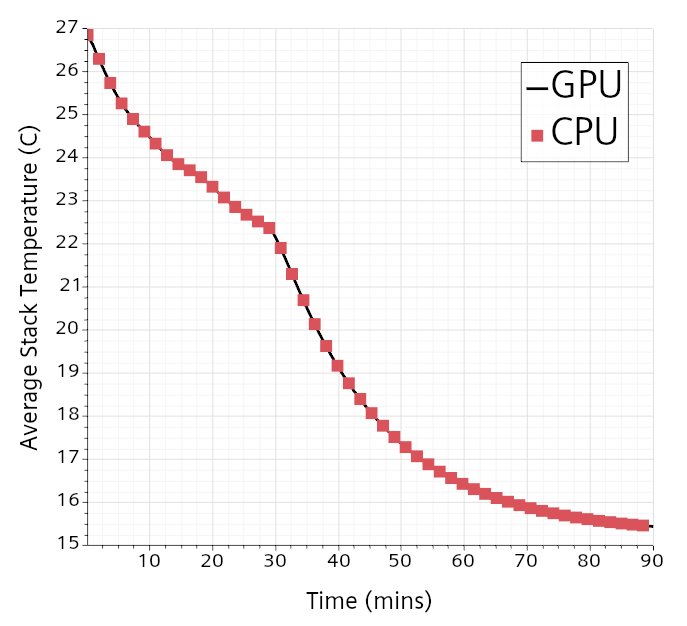

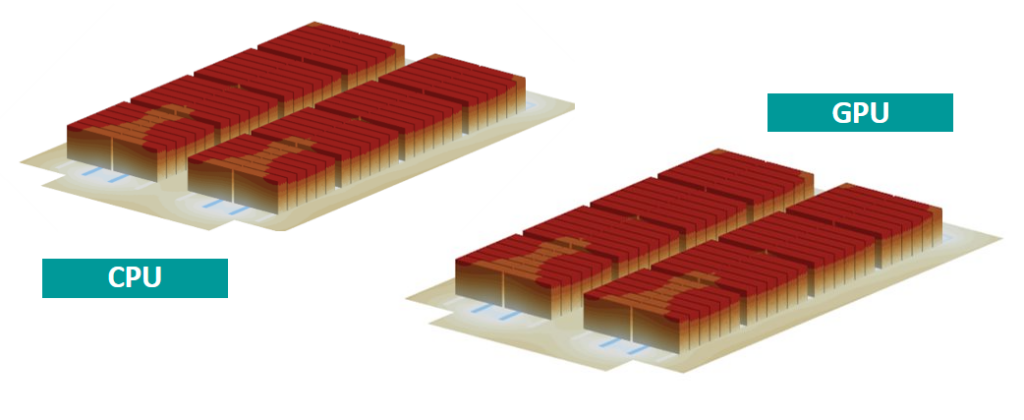

Armed with some AMD MI210 GPUs, and my nugget of local Siemens history, I decided to simulate the thermal behavior of a battery pack leveraging a Conjugate Heat Heat Transfer (CHT) methodology. Luckily for me, a set of newly added GPU-native functionality made this possible in Simcenter STAR-CCM+ 2402.

Multi-Component Solid model for CHT on GPUs

Firstly, the Multi-Component Solid model. This allows for differing material properties to be easily applied to complex assemblies of different parts. In this example, I can then easily define different materials for positive and negative terminal connectors as well as busbars under one physics continuum. Although, we are only using two materials (Aluminium and Copper) in this battery example, full vehicle thermal simulations can have many more different materials to specify, and having the multi-component solid capability native to GPU is another step in being able to leverage the speed, cost and performance benefits of compared to traditional CPU architectures.

Mapped Contact Interfaces for CHT on GPUs

The second GPU-native functionality added in Simcenter STAR-CCM+ 2402 is the ability to leverage Mapped Contact Interfaces (both Implicit and Explicit) in CFD simulations with GPUs. Again, this is a key enabler for modelling thermal management, especially when dealing with different timescales.

[As a reminder, multi-timescale refers to thermal analysis where different components or domains have significantly different response times. For modelling the thermal behaviour of the battery pack, it specifically refers to the difference in response times between the fluid / coolant channel (reaches a steady state within milliseconds) and the solid components / battery stacks (reaches a steady state in order of seconds)].

Using an Explicit Mapped Contact Interface, the heat transfer from the coolant channel is mapped onto the solid domains. The heat generation due to charge/discharge of the battery is modelled here as a volumetric heat source. By mapping back and forth between fluid and solid at fixed intervals, we can model how the coolant impacts on the temperature of the battery pack in a computationally efficient manner.

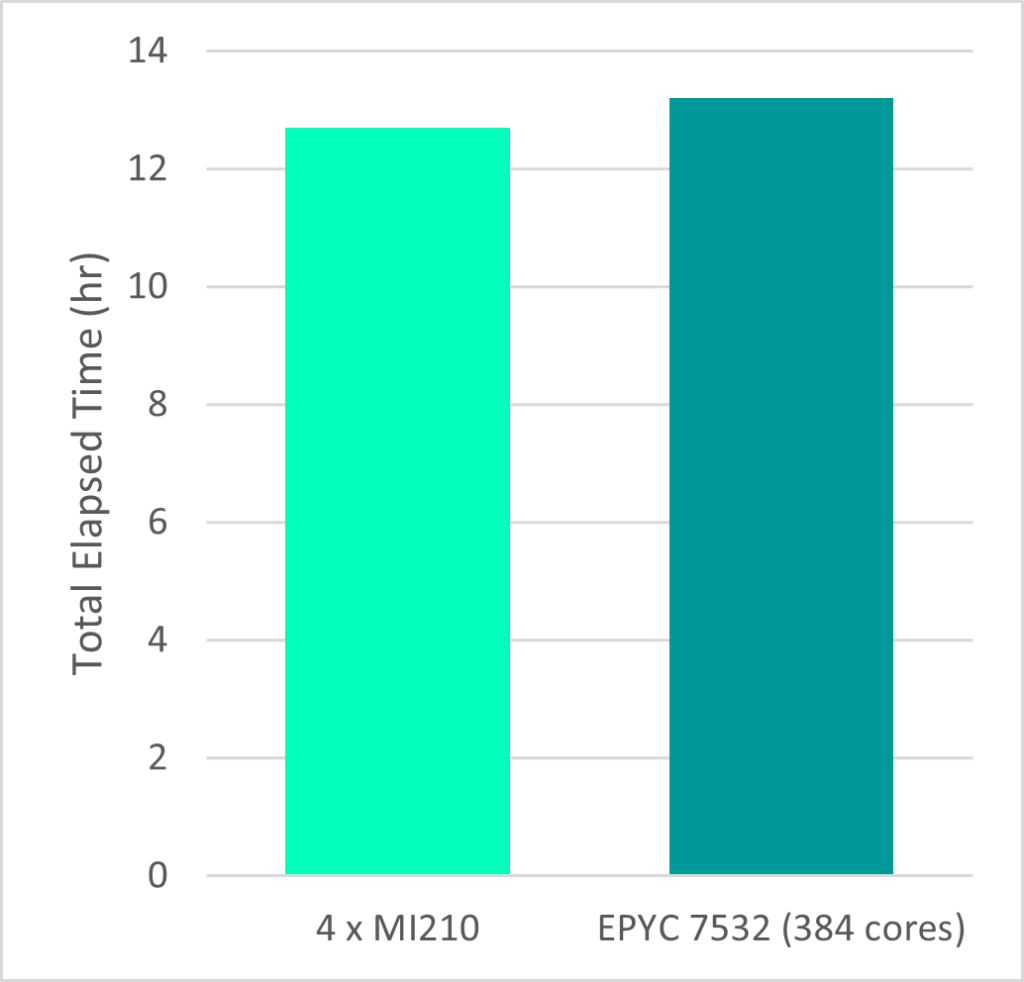

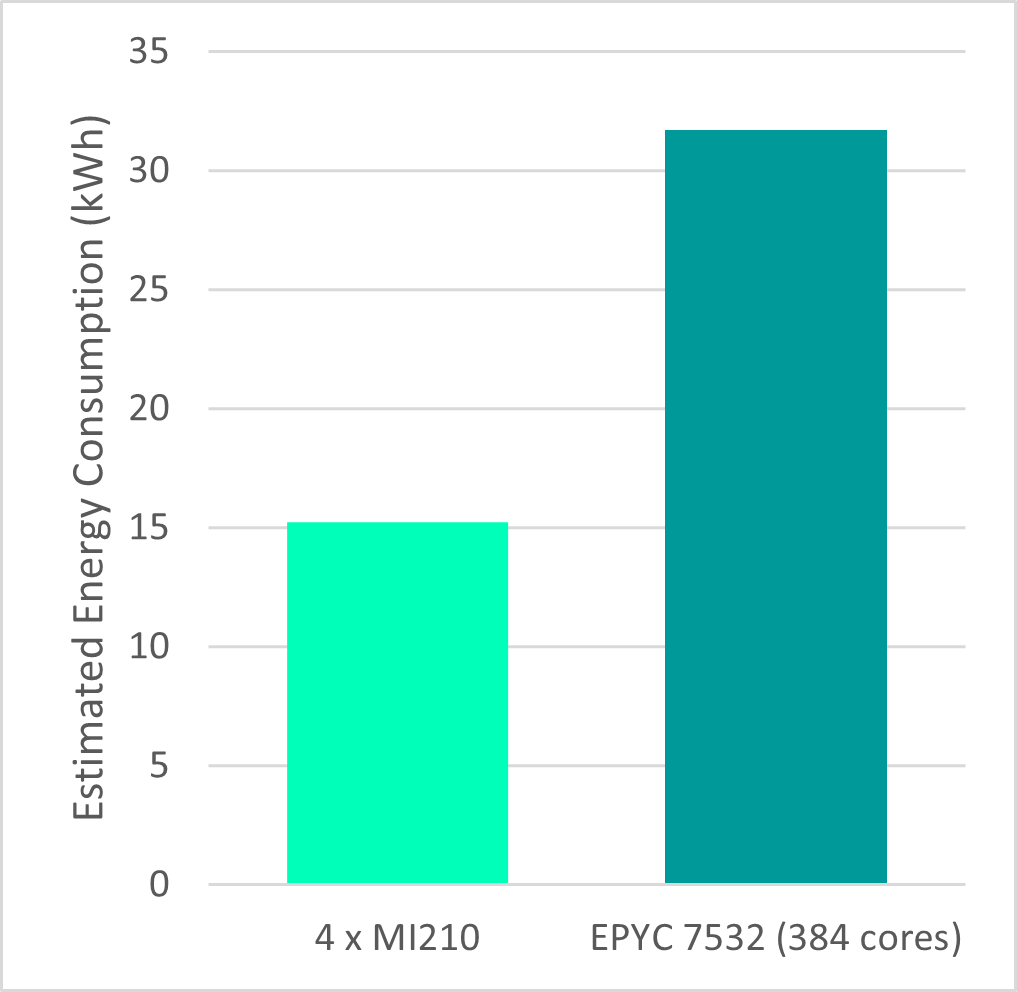

Performance assessment AMD GPU vs AMD CPU

Looking at a comparison between AMD MI210s and AMD EPYC 7532 CPUs, we can see that the use of GPUs is giving performance equivalent to 100s of CPU cores. Additionally, estimating the energy consumption for each configuration (using listed TDP values), we can see that using GPU hardware can significantly reduce energy overheads when running these simulations.

As a reference, the 16kWh saved here could propel a battery electric vehicle (BEV), on average, for 80km and the energy savings after a year would be enough to power your BEV around the globe with energy to spare [1]!

While these numbers indicate the opportunities that running CFD simulations with GPUs offer, we recognize that there is no silver bullet to run your CFD simulations. That being said HPC clusters with decent CPU technology will continue to have it space and will remain an important computation powerhouse. As such our strategy is supporting a wide range of compute hardware while ensuring a seamless transition between those. This includes that you can rest assured you obtain consistent results regardless whether you run your job on GPUs or CPUs.

Supercharge your CFD simulations with GPUs

Just as the post-industrial landscape of Southeast London has changed over the years, so too is the landscape of HPC for Computer Aided Engineering applications. The application of new hardware architectures to CFD is offering the opportunity to truly supercharge your simulations: reducing turnaround time, running costs and hardware costs. Ultimately, this means more simulation throughput, more designs and better products. Now to ponder what engineering challenges Siemens will be working on 100 years from now… either way I’m confident simulation will be firmly to the fore!

For the near future, all you need to do is keep your CFD-hungry (AMD) GPUs fed for a little longer… Until the big feast begins, when Simcenter STAR-CCM+ 2402 arrives on February 28.