Accelerate multiphase CFD with GPU-native Volume Of Fluid (VOF) and Mixture Multiphase (MMP) solvers

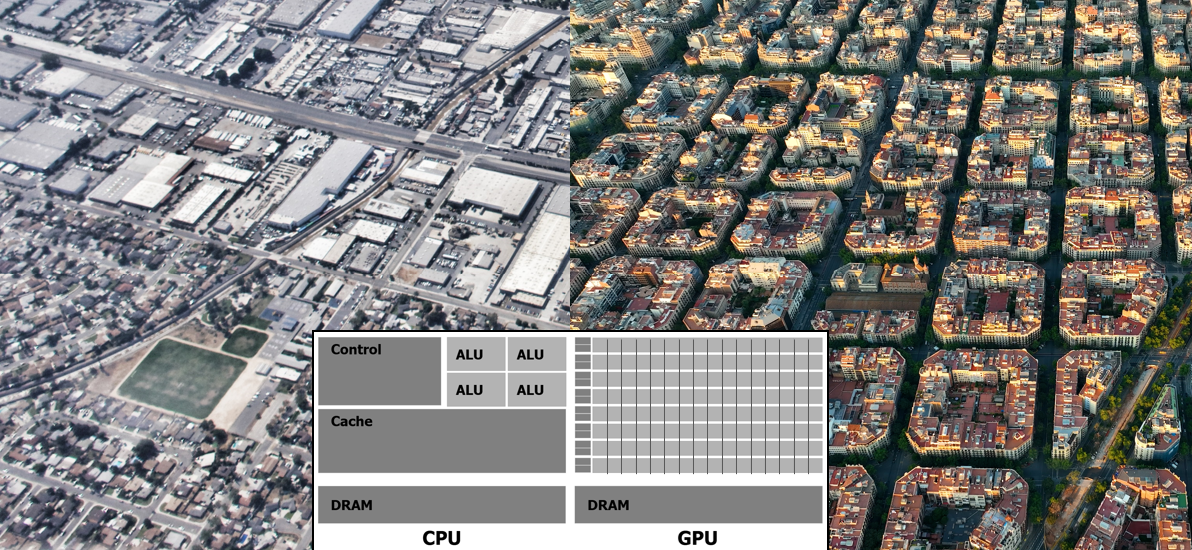

Last summer I had a brief visit to Barcelona, it was the first time I had been since 2004, somehow I had forgotten about this place for more than 20 years. The first thing you notice when coming in to land in Barcelona is the way the streets are laid out – most of the old city is in a dense grid of repeating square blocks. When you get down to street level you see the advantage of this layout, each city block is almost identical but beautiful, with apartments, cafes, restaurants, shops and separated by tree-lined streets. Each block is so well catered for there is little need to travel beyond its confines. It is only when you need to visit something out of the ordinary such as the Sagrada Familia or the airport that you need to go further.

The city planning of Barcelona may date back to the mid nineteenth century but it has a lot in common with modern Graphics Processing Units (GPUs). GPUs consist of thousands of identical cores each of which are designed to work in isolation on massively parallel tasks which can be subdivided in such a way that each core can work independently. This design differs from that of the traditional Central Processing Unit (CPU) which is laid out more like a US city designed for flexibility but with the assumption that you are going to need a car and some time to get where you are going! GPUs are designed to keep everything local to minimize the electron miles and latency.

Computational Fluid Dynamics (CFD) is well suited to GPU architecture because everything is local to the computational cell, there is no need to communicate with distant cells. Reducing the electron miles brings three main benefits: simulations can be run faster; the energy consumed per simulation is much lower; and the hardware footprint is much lower.

Being able to run Simcenter STAR-CCM+ simulations on GPUs is nothing new, but in Simcenter STAR-CCM+ 2602 we take a giant leap forward by adding Volume of Fluid (VOF) and Mixture Multiphase (MMP) to the list of GPU-native solvers. The multiphase capability supported on GPU in this release is very impressive , including phase change models such as evaporation, boiling and cavitation, acceleration techniques such as implicit multi-step, and multiple regime support with MMP-LSI. Let’s see some examples of the benefits running on GPU can bring to a range of applications.

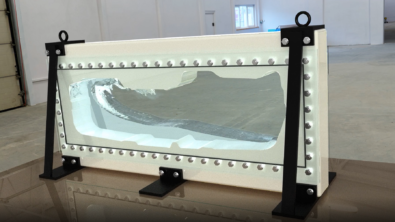

Run faster tank sloshing simulations

Solver: VOF

Mesh: 5.6M uniform static trimmed mesh (AMR not yet compatible with GPU)

Timestep: 5e-4s with Dynamic Sub-Stepping (target CFL 0.5)

Motion: Sinusoidal lateral motion

CPUs used: 192 CPU cores (AMD EPYC 7532)

GPU used: 1 NVIDIA RTX 6000 Ada

Our first example is a tank sloshing case based on an automotive fuel tank. As Engineers, we want to know how the center of gravity moves as the tank sloshes because of the loads it will transfer to the vehicle and the effect that will have on the vehicle stability and dynamics. Tank sloshing is also a concern in cryogenic applications where boiling commonly occurs which is also supported in this release.

In Simcenter STAR-CCM+ we use the same solver for the CPU and GPU versions, which means that if cases are well converged identical results can be expected. In our tank sloshing example, that is exactly what we see with the free surface movement with time being almost identical (as with real experiments, transient VOF cases are stochastic in nature and so no two runs will ever be totally identical to the point of every droplet matching). The center of gravity movement compares well with experiment in both the CPU and GPU runs.

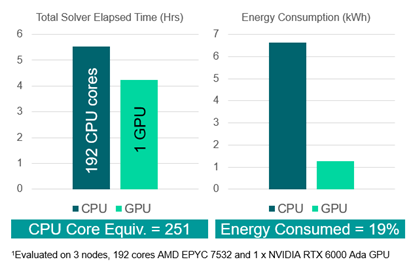

The benefit of running on GPU can been seen most clearly when we compare runtimes. The single GPU was significantly faster than the 192 CPU cores. In fact, it would have taken 251 CPU cores to match the speed of the GPU (a metric known as CPU core equivalence).

When we compare energy usage, the benefits of the GPU can be clearly seen as it only used 19% of the CPU equivalent, reducing the cost of running and the carbon footprint.

Speed up propeller cavitation simulations

Solver: VOF plus Schnerr-Sauer cavitation model

Mesh: 4.4M static trimmed mesh (focused on near propeller region)

Timestep: 5e-6s with 3 volume fraction sub-steps

Motion: MRF

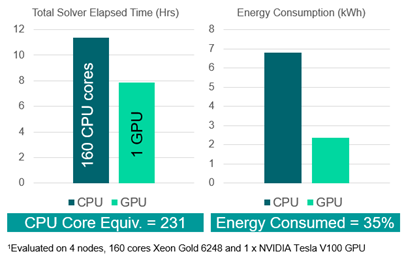

CPUs used: 160 CPU cores (Intel Xeon Gold 6248)

GPU used: 1 NVIDIA Tesla V100

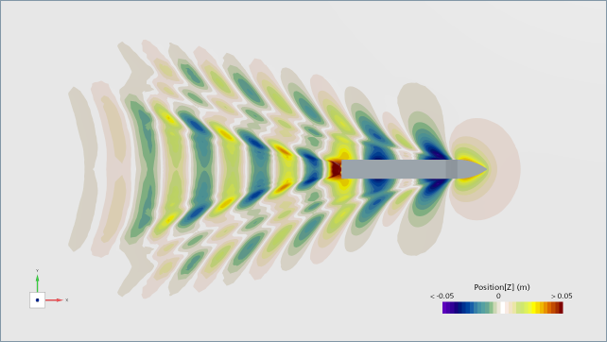

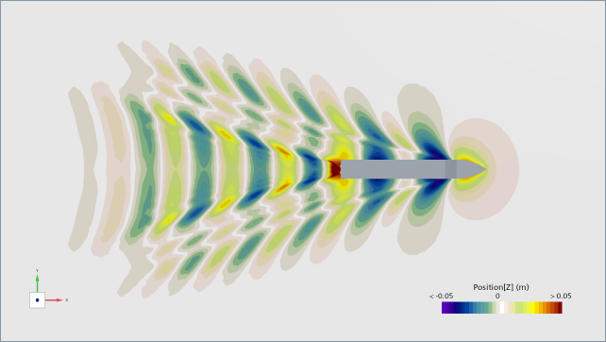

Our next example is a marine propeller operating at a condition where cavitation is expected. This gives us a chance to try out some of the comprehensive physics included alongside VOF on GPU in this release. In this case, we used the Schnerr-Sauer model for cavitation. The model predicts the growth and collapse of vapor bubbles due to low pressure on the propeller surface. These bubbles coalesce to form larger vapor pockets that fill the tip vortex and convect downstream forming a classical helical pattern. The results of this simulation on CPUs and GPU are shown below. They are identical as expected.

The single GPU completed the run in about 70% of the time taken for the 160 CPU cores giving a CPU core equivalence of 231. As with the previous example, the energy consumed in completing the run is also much lower with the GPU consuming just 35% of that used by the CPUs.

Accelerate marine resistance predictions: Kriso Container Ship (KCS)

Solver: VOF plus VOF waves

Mesh: 28M static trimmed mesh

Timestep: 0.02s

Motion: None

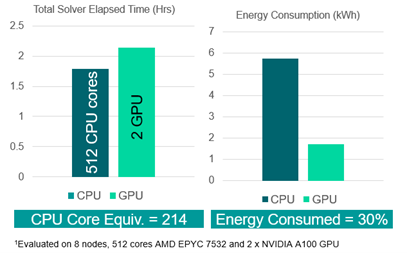

CPUs used: 512 CPU cores (AMD EPYC 7532)

GPU used: 2 NVIDIA RTX 6000 Ada

Staying on the topic of marine applications, our next simulation is a resistance calculation for the Kriso Container Ship (KCS) test case. Correctly predicting the drag in these examples requires accurate capturing of the free surface waves both around the vessel and downstream. This simulation is made possible on GPU by support for VOF waves in this release.

Once again, the results from CPU and GPU are indistinguishable. Comparing the runtime, the 2 GPUs were slightly slower than 512 CPU cores giving a CPU core equivalency of 214. The energy consumption of the GPU was only 30% of that of the CPU cluster.

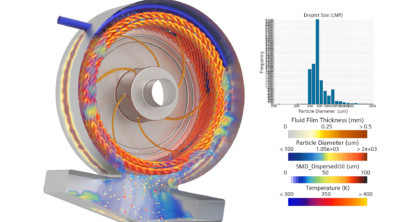

Run E-Motor cooling studies faster

Solver: MMP-LSI

Mesh: 4.16M static polyhedral mesh

Timestep: Adaptive timestep targeting max CFL of 2 with 10 sub-steps

Motion: Rigid Body Motion (with Metrics Based Intersector and PDE Wall Distance)

CPUs used: 160 CPU cores (Intel Xeon Gold 6248)

GPU used: 1 NVIDIA Tesla V100

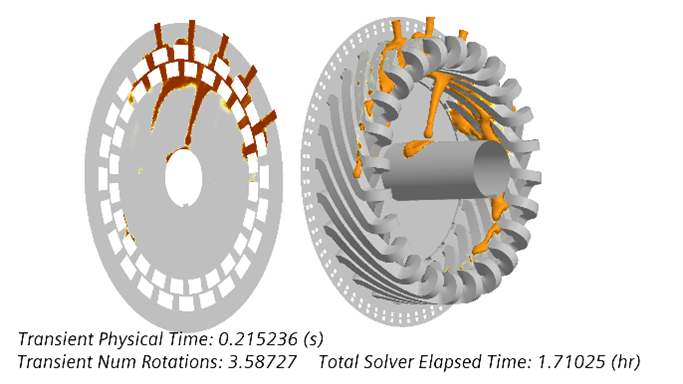

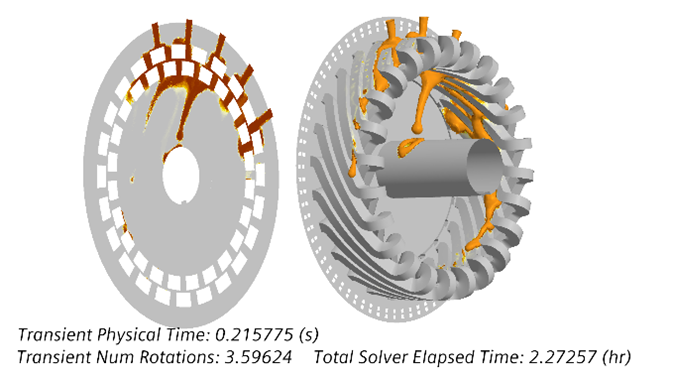

Our last example is an electric motor similar to the type found in electric vehicles. These motors require cooling with a dielectric fluid (oil) which in this motor is injected from stationary inlets at the top of the machine onto the copper end-windings. Optimizing the cooling in an e-motor is key to maximizing the performance and efficiency. This simulation uses Mixture Multiphase (MMP) with Large Scale Interface (LSI) modeling to allow for both resolved jets and dispersed mixtures of sub-grid droplets to coexist. The simulation also includes relative motion (Rigid Body Motion with sliding interfaces).

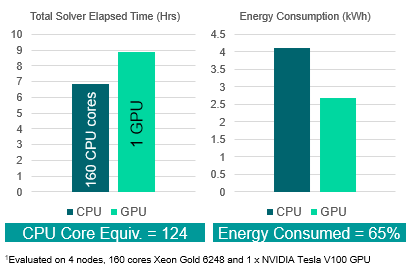

The results again show very good agreement between CPU and GPU runs. In this example the single GPU was a bit slower than the 160 CPU cores giving a CPU core equivalence of 124 and an energy consumption 65% of that of the CPUs. This is not as good as the other examples due to the need to re-intersect the sliding mesh every timestep (this is a non-local operation and so not as well suited to GPU). Nevertheless, this is still a very good speed up.

Multiphase CFD with GPU: a new world of fast, efficient multiphase simulation

These four examples represent the tip of the iceberg of what is possible for GPU accelerated multiphase simulation from this February in Simcenter STAR-CCM+ 2602. Barcelona style high performance multiphase computing on hardware that would fit on your desk awaits! Vamos, amigos!