Part 11: The 2018 Wilson Research Group Functional Verification Study

ASIC/IC Low Power Trends

This blog is a continuation of a series of blogs related to the 2018 Wilson Research Group Functional Verification Study (click here). In my previous blog (click here), I presented our study findings on various verification language and library adoption trends. In this blog, I focus on low power trends.

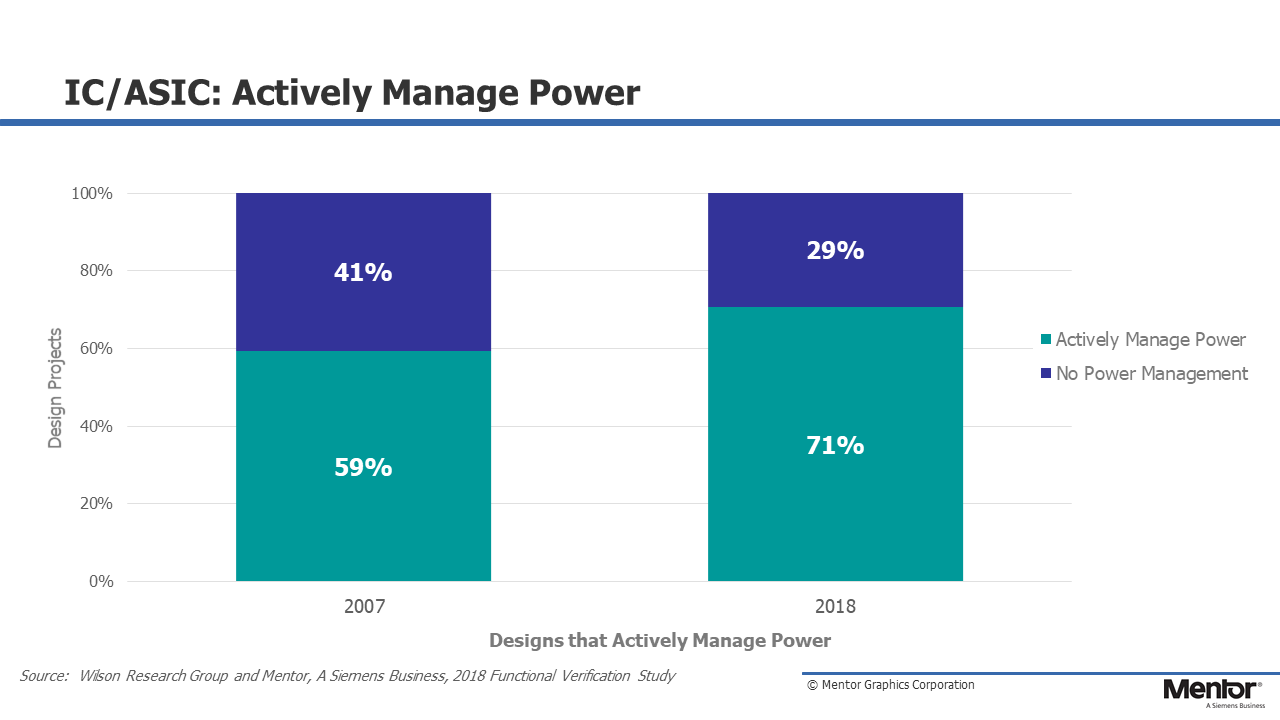

In figure 11-1, we see that about 71 percent of today’s design projects actively manage power with a wide variety of techniques, ranging from simple clock-gating, to complex hypervisor/OS-controlled power management schemes. This is essentially unchanged from our 2014 study.

Figure 11-1. ASIC/IC projects working on designs that actively manage power

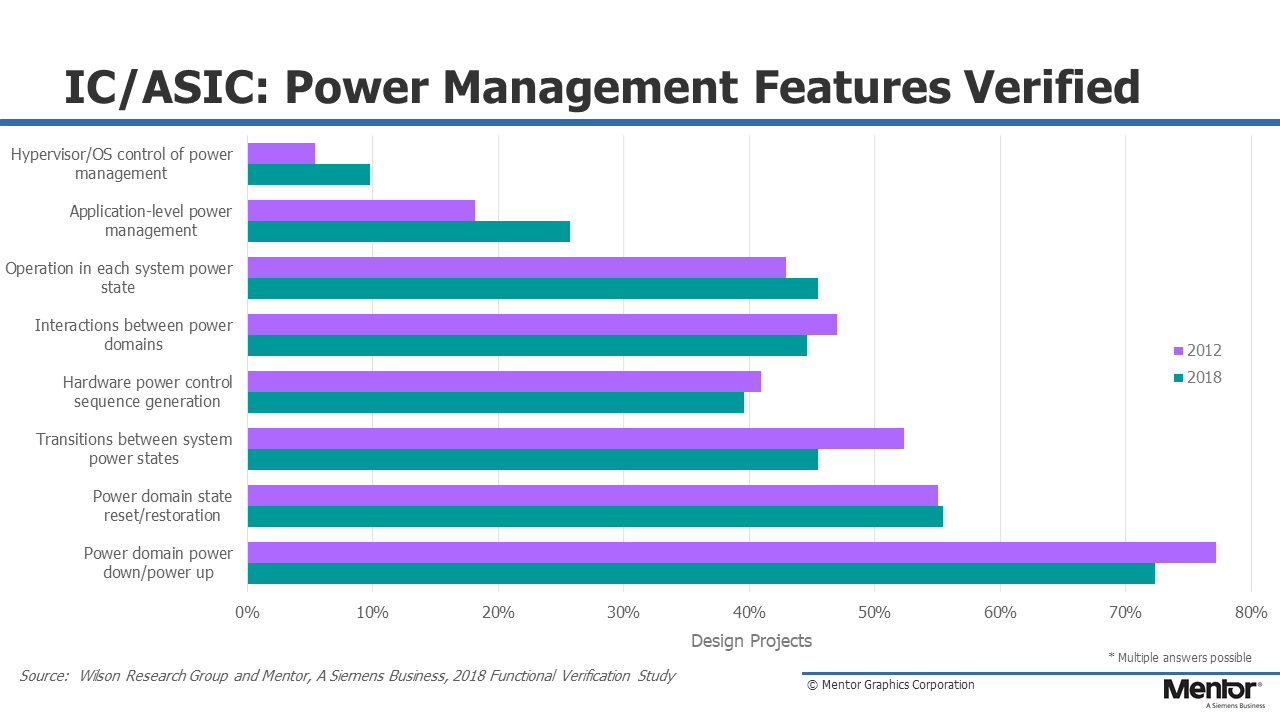

Figure 11-2 shows the various aspects of power-management that design projects must verify (for those 71 percent of design projects that actively manage power). The data from our study suggest that many projects, since 2012, have moved to more complex power-management schemes that involve software control. This adds a new layer of complexity to a project’s verification challenges, since these more complex power management schedules often require emulation to fully verify.

Figure 11-2. Aspects of power-managed design that are verified

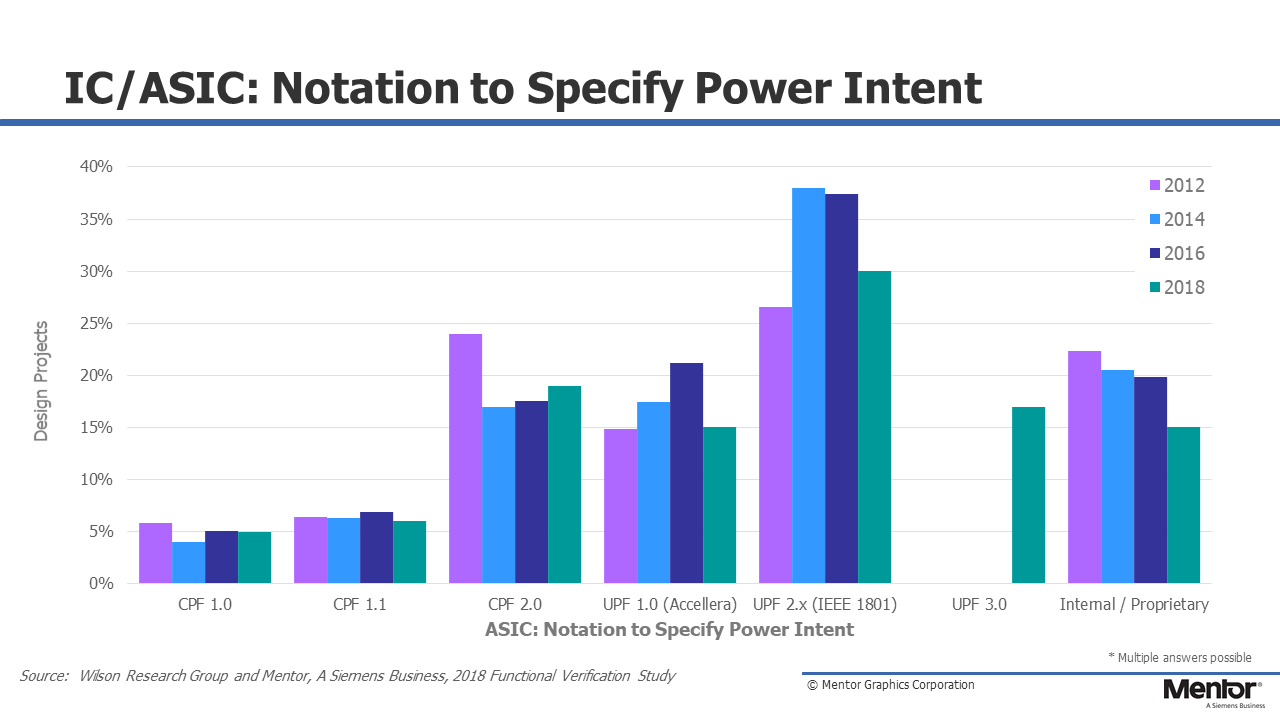

Since the power intent cannot be directly described in an RTL model, alternative supporting notations have recently emerged to capture the power intent. In the 2018 study, we wanted to get a sense of where the industry stands in adopting these various notations. For projects that actively manage power, Figure 11-3 shows the various standards used to describe power intent that have been adopted. You might note that the 2018 study was the first time we tracked the new UPF 3.0 standard. Also, some projects are actively using multiple standards (such as different versions of UPF or a combination of CPF and UPF). That’s why the adoption results do not sum to 100 percent.

Figure 11-3. Notation used to describe power intent

In an earlier blog in this series, I provided data that suggest a significant amount of effort is being applied to IC/ASIC functional verification. An important question the various studies have tried to answer is whether this increasing effort is paying off. In my next blog (click here), I present verification results findings in terms of schedules, number of required spins, and classification of functional bugs.

Quick links to the 2018 Wilson Research Group Study results

- Prologue: The 2018 Wilson Research Group Functional Verification Study

- Understanding and Minimizing Study Bias (2018 Study)

- Part 1 – FPGA Design Trends

- Part 2 – FPGA Verification Effectiveness Trends

- Part 3 – FPGA Verification Effort Trends

- Part 4 – FPGA Verification Effort Trends (Continued)

- Part 5 – FPGA Verification Technology Adoption Trends

- Part 6 – FPGA Verification Language and Library Adoption Trends

- Part 7 – IC/ASIC Design Trends

- Part 8 – IC/ASIC Resource Trends

- Part 9 – IC/ASIC Verification Technology Adoption Trends

- Part 10 – IC/ASIC Language and Library Adoption Trends

- Part 11 – IC/ASIC Power Management Trends

- Part 12 – IC/ASIC Verification Results Trends

- Conclusion: The 2018 Wilson Research Group Functional