What DFT history teaches us

By Stephen Pateras, Mentor Graphics

Two DFT-related rules for success are as true today as they were 30 years ago

Reading Dan Strassberg’s 1988 article “Pioneering engineers begin to adopt board-level automatic test generation” (PDF) made me realize that there are two DFT-related rules for success that are as true today as they were 30 years ago.

First, it’s important to implement the right amount of DFT. If you implement too little, your test cost will likely grow and you’ll reduce your test coverage. If you implement too much, you risk incurring unnecessary area overhead and a larger hit on the design schedule. As described in the article, it was difficult back then to get designers to implement full scan design or any other DFT, as the average ASIC size was only around 10,000 gates. Savings in test generation time and improvement in test coverage over sequential test generation were not yet significant enough to justify the extra 10% area overhead needed for scan. This trade-off quickly changed thanks to Moore’s law. By the early ’90s, ASIC sizes had already grown by an order of magnitude making scan design a necessity.

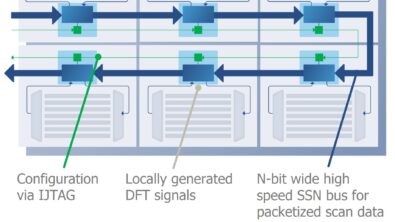

The next test paradigm came a decade later when scan-based ATPG alone could no longer keep up with growing design sizes. ATPG compression was introduced early in the 2000s and originally provided around a 10× reduction in test pattern volume and test time. These dramatic results more than justified the necessary decompressor and compactor logic added to the design.

With today’s designs having some 100 million or more gates, a new test-generation paradigm is underway. The relatively new hierarchical ATPG compression approach breaks up the test generation problem into smaller manageable pieces. Going hierarchical typically results in an order of magnitude reduction in test generation time and the required compute memory resource.

The second DFT rule for success is that you should choose the right DFT solution for each application. For example, Strassberg explains in his article that there was a strong reluctance to apply scan testing to boards as many non-scannable components would have to be replaced or additional scannable components added.

A more recent example of the second DFT rule is the long battle between ATPG and logic BIST. Each solution offered various advantages and disadvantages. In particular, logic BIST had the significant advantage of not requiring any test generation time and did not require any pattern storage on the tester. However, for logic BIST to work the design could not have any X-states and you typically had to add a large number of test points to the design to ensure high fault coverage. These requirements resulted in large area overheads and a possible impact on functional performance. For these reasons, logic BIST was long relegated to a niche set of designs.

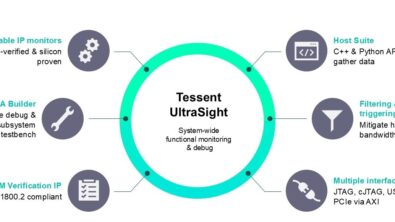

This all started changing about a year ago with the explosive growth of automotive designs. The reliability-driven in-system test requirements specified within the automotive ISO 26262 standard are driving rapid and widespread adoption of logic BIST. Although the constraints of using logic BIST have not substantially changed, the automotive requirements have changed the playing field and made this DFT solution a must-have capability.

As Alphonse Karr famously said two hundred years ago, “The more things change, the more they stay the same.” Like most technologies, DFT continues to evolve. Choosing what DFT solutions to use will, however, always require some careful consideration.

Author

Stephen Pateras is product marketing director for Mentor Graphics silicon test products.

Liked this article? Then try this –

White Paper: Improve Logic Test with a Hybrid ATPG/BIST Solution

This article was originally published on www.edn.com

Comments

Leave a Reply

You must be logged in to post a comment.

Great observation, Steve. My only concern is that your discussion is for component level test and testability. In mentioning the needs of the automobile industry we need to widen your two DFT-related rules to boards and systems. At the IC level the repair is straight-forward. If you find a chip to be bad, you replace it. For boards and systems, diagnostic resolutions also enter the picture and isolating to the root cause of the problem is the greater challenge. Yet, while much has been devoted to IC-level DFT and BIST, at the board and system levels it is still difficult to convince designers and (more so their managers) to invest in boundary scanned devices. Fortunately, with IC vendors making many (if not most) chips with boundary scan, we often get DFT without asking for it. Despite this, our effort at DFT is still an uphill battle – as you know – since you and I have been fighting it together for decades.