Infinite problems, finite solutions: Adjoint optimization at full speed

Achilles and the Tortoise – a timeless paradox

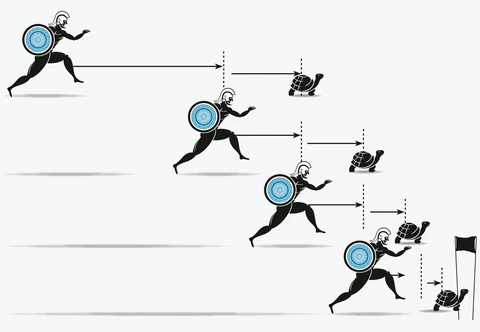

Long before adjoint optimization was a thing…Achilles and the Tortoise stand on a dusty runway under the hot sun, locked in a curious footrace towards a distant flag waving in the breeze. As they debate their purpose and what lays ahead, Zeno, the very creator of their fate, steps forward. A knowing smile on his face, he begins to share how he envisions the racecourse.

Zeno: Since the Tortoise is slower, he gets a head start of ten rods. The race begins. In a few bounds, Achilles reaches the spot where the Tortoise started.

Achilles: Hah!

Zeno: Now the Tortoise is just a rod ahead. Achilles catches up again.

Achilles: Ho ho!

Zeno: But in that instant, the Tortoise had advanced slightly. Achilles covers that too.

Achilles: Hee hee hee!

Zeno: Yet the Tortoise inches forward once more. This game must be played infinitely, meaning Achilles can never catch the Tortoise.

Tortoise: Heh heh heh heh!

From: Zeno, Achilles and the Tortoise (almost) in Gödel, Escher, Bach – Douglas R. Hofstadter

During my last holiday break, I was reading this lively exchange between the three guys, and my mind immediately went to the adjoint optimization I was trying to perform. The solver, like Achilles chasing the Tortoise, was stuck in an endless loop – stalling residuals, slow progress, and the promise of convergence just out of reach. The process felt like it could go on forever, with each step taking infinite time to complete, as if convergence was always just beyond the horizon.

But what if Achilles didn’t have to take every step to reach the flag? What if, instead of inching forward, he could leap over the inefficiencies of thought, collapsing the infinite into the finite? Can we break the infinite loop?

Chasing convergence

The breakthrough for adjoint optimization

Yes, we can!

Driven by our vision to deliver efficient and reliable solvers that push the boundaries of flow simulation, we have tackled some of the challenges limiting adjoint workflows performance, leaping over the inefficiencies of convergence. Simcenter STAR-CCM+ 2502 introduces significant algorithmic improvements to the second-order adjoint solver, enabling it to handle complex flows with major robustness.

A small detour for Achilles: adjoint and the adjoint optimization explained easy

In order to understand the way an adjoint based optimization works imagine you would like to become a six-star medal winner, i.e. finish all big marathons of this earth successfully.

In a nutshell adjoint optimization for CFD (and six star medaling) is an algorithm that works like this:

- For a given object we simulate the internal or external flow simulation using CFD

(You run any marathon)

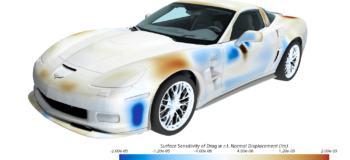

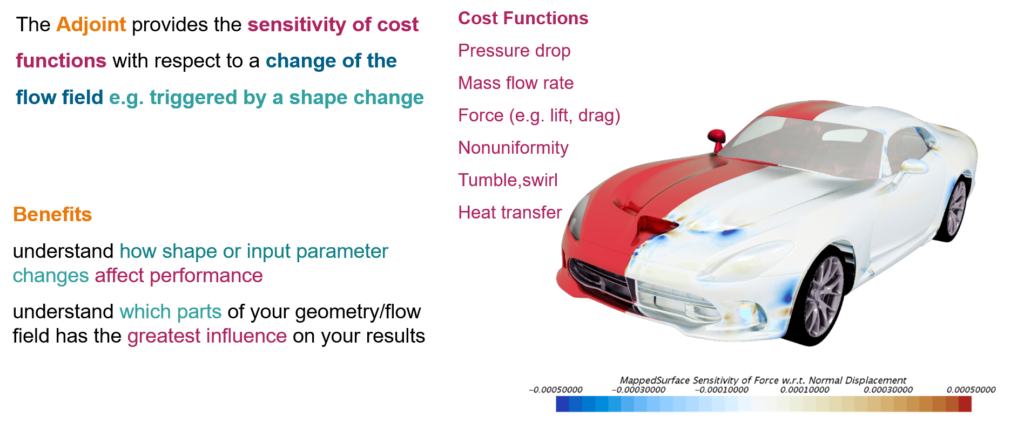

- Then we assess something called the adjoint: A mathematical function that gives us an idea of which local surface area has which impact on the cost function, e.g. the lift or drag, the pressure loss or the flow rate. This requires a deeply converged solution with really low residuals – at the heart of what this blog is all about.

(For the marathon the adjoint goes like this:

- Next, comes the actual geometric optimization step: we let the CFD tool and mesher morph the areas that have the largest impact on the performance/cost function by moving surfaces of the geometry inwards or outwards in accordance to the adjoint sensitivities.

(You start training those parts of your body that were showing the biggest impact on your run time, detected by those parts that hurt the most. Yes, pain can be a good teacher!)

- In the Simulation we start running the CFD with the newly formed now slightly modified (morphed) design. So you are back at step 1 but with an updated domain. This time, the cost function – when reassessed – should show an improved value

(You are going to run the next Marathon after training the most relevant parts of your body. This time your finishing time should have improved.)

- We do these steps 1 to 4 in loops until the geometric limitations of morphing are reached and/or the cost function does not change significantly anymore.

Achieving convergence with second-order adjoint has always been a tough challenge, particularly for simulations involving intricate and turbulent flow dynamics. Slow progress or even stalling residuals were common hurdles, often demanding user intervention to fine-tune setups and stretch optimization studies to the edge of feasibility.

The solver now overcomes these obstacles with ease. Cases that previously struggled to converge achieve results faster and more reliably, even in the most demanding scenarios—and all without user intervention. The example below features the computation of adjoint surface sensitivities of Total Pressure ratio (with respect to Normal Displacement) for the NASA High-Efficiency Centrifugal Compressor HECC.

The improvement is clearly shown in the residuals plot. In earlier versions of the code, achieving second-order convergence was a struggle, with residuals stalling and progress halting altogether. In contrast, the new solver delivers a smooth and efficient convergence, dropping residuals by 10 orders of magnitude in just 80 iterations…and breaking up the infinite loop!

Speed and efficiency

This leap in convergence rates brings a game-changing value to users: faster turnaround times.

Since most adjoint simulations rely on convergence-based stopping criteria, the ability to reach these thresholds more quickly significantly shortens the overall computing time. What once needed hours of computation can now be achieved in a fraction of the time, enabling more optimization iterations and quicker delivery of results.

As proven in the examples below, the improvement can deliver speedup factors up to 3x, depending on the complexity of the problem. For particularly challenging scenarios that previously did not converge at all, the speedup is (theoretically) infinite 😊

Preserving accuracy

While the concept of an “infinite speedup” is, of course, more thought-provoking than practical, it serves to highlight a crucial added value: the ability to achieve robust convergence without sacrificing precision. In the past, first-order adjoint was often the fallback solution when second-order methods struggled to converge. Although reliable, this comes at a cost—reduced accuracy in sensitivity calculations, ultimately leading to suboptimal optimization outcomes.

The new advancements cut this compromise. By enhancing the performance of the second-order adjoint solver, users can now achieve convergence with both reliability and precision. Sensitivity analyses keep their full second-order accuracy, delivering results that are not only robust but also high-fidelity. This ensures that even the most challenging flow scenarios—where reliability and accuracy are paramount—can be tackled effectively, unlocking the true potential of adjoint-based optimization. This is shown in the plots below, comparing the adjoint residual behavior for the Eclipse business jet and the NASA HECC test cases, in first and second order.

The real-world impact – from philosophy to engineering with adjoint optimization

To illustrate the benefits of these improvements to real-world engineering applications, an adjoint optimization study was performed on the aerodynamics of a business jet in cruise condition.

The goal of the adjoint optimization is to improve lift by redefining the shape of the jet’s horizontal stabilizers. Using a shape optimization workflow, the surface morphing is driven by the adjoint sensitivities and constrained to a maximum displacement of 1 mm per step. This ensures realistic modifications while keeping aerodynamic integrity for the entire airplane. The process consists of four adjoint optimization steps, progressively morphing the surface mesh of the stabilizers towards the final shape.

Results highlight the power of the enhancement for adjoint optimization: 5% increase in lift, with a 30% saving of computing time for the whole adjoint optimization process (4 cycles of volume meshing, primal and adjoint solving), compared to earlier versions of Simcenter STAR-CCM+.

Breaking free from the infinite adjoint residuals loop

Just as Achilles breaks free from the infinite loop to win the race against the Tortoise, adjoint simulation no longer struggles with endless stalling residuals. The latest advancements in Simcenter STAR-CCM+ 2502 combine speed, robustness, and accuracy of the adjoint solver altogether.

Whether it’s shaving hours off an adjoint optimization loop or ensuring the highest fidelity in sensitivity results, we are committed to delivering products that ensure a smooth and efficient path to engineering success.