Beyond traditional engineering simulations: How Machine Learning is pioneering change in engineering

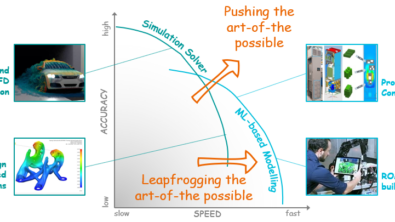

In our previous discourse on Speedy Simulations, we underscored the transformative power of algorithmic advancements on Computer Aided Engineering (CAE) tools. Research and innovation continued to provide an exponential growth in predictive capability, particularly in the realm of Machine Learning (ML) over the last 2-3 years. Thereby, ML technologies are not just incremental enhancements; they hold the promise to revolutionize our understanding and application of simulation in engineering. This ML revolution comes at a good time. It helps enabling the ambitious industry targets to rapidly decrease time to market as well as to significantly increase sustainability achievements.

Today, generative Artificial Intelligence (AI) is making waves across numerous sectors, even influencing the way we create pictures (c.f., Figure 1), draft blog posts, or access complex information – among many other things. Thereby, AI can deal with very different data structures being processed by ML models: images of pixels and channels to be processed with convolutional neural networks, natural language as sequences of phrases/words in language models, or interconnected groups (nodes) in a network to form graphical structure for graph neural networks, as a few examples. Without a doubt, these innovations (and others) will cross-pollinate into FEA/CFD applications to accommodate our typical data structures to be ideal for ML modeling.

Today, the synergy between ML and modern design processes is in its embryonic stages, but early results are promising. As technology continues to evolve and we discover innovative ways to harness data, the future of design, powered by ML, looks bright and boundless. While we narrow our focus to the simulation itself in this blog post, it’s worth noting that the broader area of generative engineering offers its own plethora of innovations, which will be covered in future blog posts. For example, Large Language Models (LLMs) in Computer Aided Engineering tools will reimagine user experience.

Industrial Adoption and Challenges of ML in CAE Today

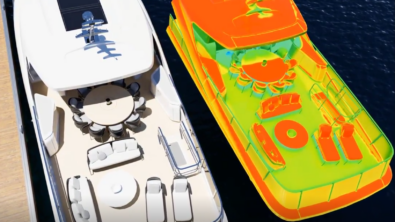

In CAE applications, today ML is most often used for classification and regression problems, which involves predicting a target variable based on one or more input features. Thus, models take past data, learning patterns in this data, and predict future outcomes. This is particularly attractive, when one has collected a large set of simulation data from historic designs or simulation models do not require heavy computations. Its adaptability and precision have found applications across a spectrum of industries, from combustion engines (c.f., Figure 2) to simulation-supported operations. Corresponding solutions can be found in todays CAE tools, e.g., Simcenter ROM builder.

However, the broader the scope of predictions one wishes to explore, the more data is needed. This is rooted in the basic challenge of making accurate ML models that generalize well, i.e. avoid overfitting. To illustrate this, let’s look at the Midjourney system, which refined its abilities using over 100 million images. A staggering figure that emphasizes the hunger these ML models have for data. The hunger of ML for big data is one of the primary reasons, why its footprint in engineering remains comparatively small. Engineering data, often proprietary in nature, is shared seldom beyond organizational boundaries. This poses a challenge for most ML models that required vast datasets to learn from. Accessing such extensive data, e.g., to realize foundational engineering models, seems almost impossible today. In order to overcome this challenge novel technologies are required.

The Opportunity: Merging ML with Engineering Simulation

Merging ML with engineering simulations (and vice versa) offers a unique opportunity: to avoid the enormous data-hunger of most ML technologies. Thus, the ambition in doing so is no smaller than to revolutionize our understanding and application of ML in engineering with the goal to repeat the success in other fields. Though, compared to the broader domain of ML, this is a relatively young field still being quite research centric. Let us review in the following the most prominent emerging concepts driving the fusion of ML and engineering simulations pioneering change in CAE tooling.

Infusing Knowledge into ML Models

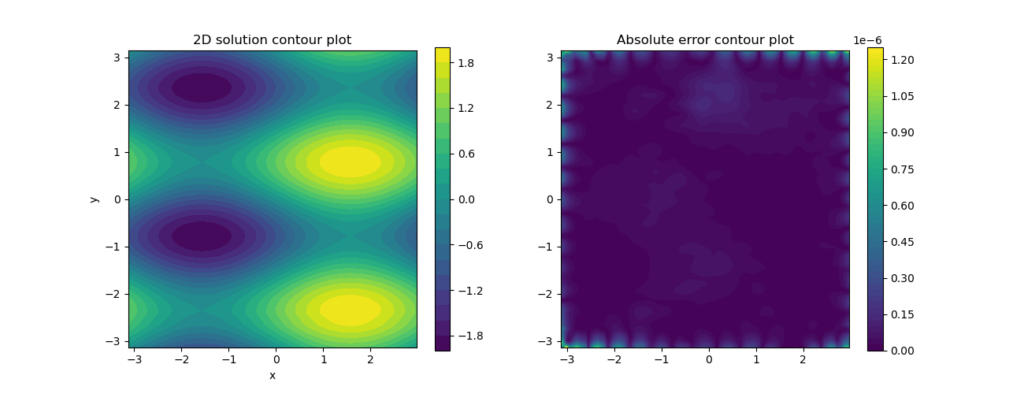

A first attempt to overcome data constraints is the inception of Physics-informed Neural Networks put forward by George Karniadakis in 2017. This concept builds upon the preliminary ideas presented by Isaac Lagaris in 1998, contextualizing them within the capabilities of modern ML frameworks. At its core, the idea advocates for the integration of physics knowledge as equations during the learning phase, such as the Navier-Stokes Equation for fluid dynamics predictions. Essentially, this physics insight serves as a regularizer (a very specific ingredient in most ML techniques to improve training) facilitating one-shot learning that mirrors the functionality of traditional simulation solvers.

Implementing such solutions might be straightforward, however their efficacy in comparison to conventional simulation solvers remains an area of contention (Keep in mind, today’s solvers are highly optimized). To understand this in granular detail would entail diving deep into mathematical intricacies. But with the overarching trend to harness not just physics knowledge but also mathematical and algorithmic insights from conventional simulations, the picture might change. For example, recent approaches suggest to enhance ML models and architectures, such using Domain Decomposition. Similarly, cutting-edge methodologies like the Random Feature Method proposed by Weinan E are presenting novel ways to enrich physics-informed ML technologies with more classical solver technologies (c.f., Figure 3).

While being an area of contention today, it is yet too early to take a conclusion whether physics-informed ML methods have a significant benefit when it comes to computational efficiency. Supported by our own research, we believe that future innovations will bring significant impact infusing engineering knowledge in ML models.

Augmenting Computer-Aided Engineering with ML

Instead of enriching ML solutions by means of additional physics or engineering knowledge as sketched above, another potential to synergize them is to augment CAE tools by means of ML.

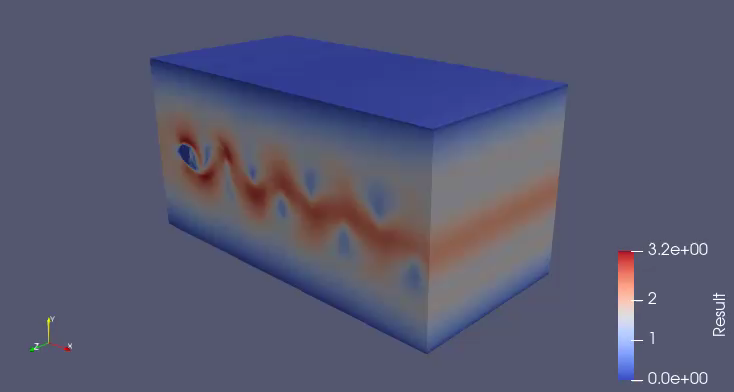

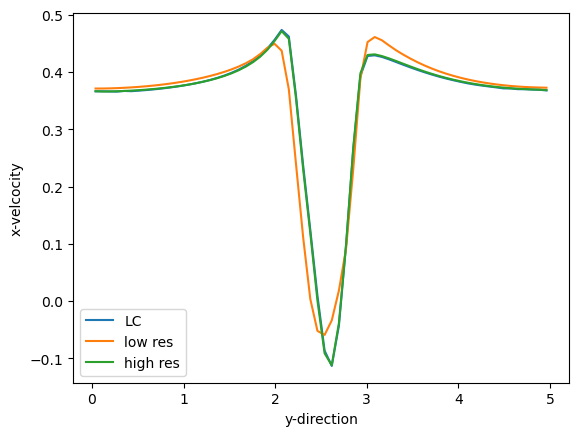

Although it might seem avant-garde, this approach has historical precedence. Consider turbulence models like RANS. Due to the computational demands of resolving turbulent structures, heuristic models, based on human ingenuity and informed by experimental data, were introduced decades ago. They enabled computational fluid dynamics to predict turbulent flows without being forced to resolve the turbulent structures in direct simulations, which require extremely long computational times. Now, with the capabilities of ML, similar paradigms are being explored. One of the pioneering papers has been Machine Learning Accelerated Computational Fluid Dynamics, which is very much inspired by turbulence modelling concepts but allows improved predictions beyond turbulence modelling. Providing a higher accuracy by introducing a learned correction in a low-resolution model, allows to get the same accuracy faster since low resolution models can be computed faster (c.f., Figure 4).

However, these concepts are not without controversy as evidenced by discussions like An Old-fashioned Framework for ML in Turbulence Modeling by Philippe Spalart. But despite controversies, there’s a palpable momentum towards the integration of these ML-driven solutions into mainstream simulation tools.

And it does not stop on the modelling side. The very same concepts can be used to augment the underlying mathematical solvers speeding up these, as we have shown in a recent study on A Neural Network Multigrid Solver for the Navier-Stokes equations (c.f., Figure 5).

Differentiable Solvers: Bridging ML and Simulation

Beyond the two opposing views how to fuse ML and simulation technologies sketched above, a revolutionary facet in this journey is the concept of differentiable solvers, sometimes referred to as Solver-in-the-Loop training or Neural Ordinary Differential Equations. The key idea of these approaches is to include the simulation solver explicitly in the training loop rather than just considering data produced by the simulation. That is, the loss function of the ML model does explicitly include an implementation of a solver. Although rooted in longstanding mathematical principles of constrained optimization (the simulation solver being the “constraint”), the approach has been groundbreaking. It exponentially augments training capabilities, enhancing robustness and data efficiency. Our recent work, Operator inference with roll outs for learning reduced models from scarce and low-quality data, is a testament to its potential in the realm of reduced order modeling. Some commercial platforms, like Simcenter STAR-CCM+, have already integrated differentiability (via adjoint capabilities), signaling the impending widespread adoption of such techniques.

Concluding Thoughts

This post merely skims the surface of the recent advancements championed by the ML community as well as innovation teams at Simcenter. The interplay between ML and CAE is unveiling a multitude of possibilities. While many of these innovations await rigorous validation, their emerging influence on products like Simcenter’s ROM builder is undeniable. The fusion of ML and simulation is not just a transient phase; it’s a journey that promises a future of accelerated discovery and enhanced precision. Thus, keep an eye out for our upcoming posts on how ML will reshape modeling in the imminent future.

P.S. Embracing ML isn’t confined to our tools. Our organizational transformation is a testament to our commitment to ML, evidenced by this article crafted with the assistance of ChatGPT.

Disclaimer

This is a research exploration by the Simcenter Technology Innovation team. Our mission: to explore new technologies, to seek out new applications for simulation, and boldly demonstrate the art of the possible where no one has gone before. Therefore, this blog represents only potential product innovations and does not constitute a commitment for delivery. Questions? Contact us at Simcenter_ti.sisw@siemens.com.