HLS verifies artificial intelligence for ADAS in autonomous cars

Artificial Intelligence (AI) involves the use of neural networks and you might have heard of terms like CNNs, DCNs, GANs, SNNs and wonder what they mean. Fundamentally, they are nothing but a set of algorithms that are designed to recognize patterns. These algorithms help cluster and classify huge volumes of data.

Here are a few popular neural networks in the AI space; each has at least some relevance to work on ADAS and automated driving applications:

Recurrent Neural Networks (RNNs): Connections between nodes for a directed graph along a temporal sequence – for pattern recognition such as handwriting or speech recognition

Deep Convolution Network (DCNs): Analogous to the connectivity pattern of neurons in the human brain, specifically inspired by the visual cortex – for pattern recognition for image, video and natural language processing

Generative Adversarial Network (GANs): Two neural networks contesting with each other in a game theory exercise – used to generate photorealistic images

Spiking Neural Network (SNNs): Mimics natural neural networks and includes the concept of time along with neuronal and synaptic states – for information processing and modeling of the central nervous system

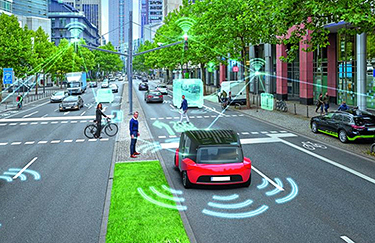

Beyond AI, driving without human intervention requires a sophisticated framework of image sensors. These sensors include LiDAR, Radar and cameras, which together continuously generate a high volume of data in real time about the environment surrounding the car. Neural networks help the car create meaningful information about this data and react in real-time.

Implementing machine learning (ML) algorithms in hardware is challenging. To achieve accuracy, an inference chip for autonomous vehicle needs to address the following challenges:

- Performance: A single high definition camera can capture a 1920×1080 image at 60 frames per second; a car can have 10 or more such cameras

- Power: AI inference can a massively power-intensive operation, especially because of the high volume of accesses to remote memories

- Functional Safety: Safety is the overarching and comprehensive requirement, so the need to detect functional safety issues that might creep in because of various hardware faults can also command significant compute resources

The biggest challenge in creating such a chip is the turnaround time for the traditional ASIC design flow. It takes somewhere from several months to a year to implement a new ASIC hardware.

My colleague, David Fritz, talks through the basic steps in creating an inferencing engine in this interview with Semiconductor Engineering editor Ed Sperling.

Initially, an autonomous system architect or designer relies on tools like TensorFlow, Caffe, MATLAB and Theano to aid in capturing, collecting and categorically verifying data in a high-level abstract environment. These high-level deep learning frameworks allow exploration of a multitude of parameters to explore, analyze and select the optimal solution for the algorithm.

Once the algorithm is determined, the designer then captures the flow in C++ or SystemC. The next step is to start designing the actual hardware algorithmic block for autonomous applications. The most efficient way is using High-Level Synthesis (HLS) to generate RTL from C++ or SystemC.

HLS has in fact been around for decades with various input specification languages used in the high-level specification of a given problem, decoupled from clock-level timing. Indeed the basic idea for HLS, which has found new life with the rise of AI, is to separate functionality from implementation with powerful capabilities for targeting and implementation at any time. As a result, HLS accelerates algorithmic design time with a higher level of abstraction resulting in 50x less code than RTL. That means smaller design teams, shorter development time and faster verification.

The next is step is verification, which includes formal property checking and linting as part of this flow to ensure that the source code is “clean” for both synthesis and simulation. Tools are also required that can measure code coverage, including line, branch, and expression coverage. The goal is to achieve RTL that is correct by construction by precise consistency of representation and simulation results between the C++ algorithm and synthesized RTL.

The Catapult® HLS Platform and PowerPro® solutions from Siemens is the industry’s leading HLS platform. Catapult empowers designers to use industry-standard ANSI C++ and SystemC to describe functional intent and to move up to a more productive abstraction level. The Catapult Platform provides a powerful combination of high-level synthesis paired with PowerPro for measurement, exploration, analysis, and optimization of RTL power and verification infrastructure for seamless verification of C++ and RTL. Indeed HLS is ultimately part of a much larger verification story, on that requires simulation of various automated vehicles systems, from the chip level on up through various vehicle subsystems and even beyond the vehicle to simulated urban environments.

Our PAVE360 program, announced in May 2019, is among our prominent recent examples of this vision, extending digital twin simulation far beyond processors and allowing secure, multi-supplier collaboration in a way that’s both unique in the market and essential in advancing AV technology. For more on PAVE360, see this TIRIAS Research whitepaper, written by Jim McGregor. (Jim and David appeared as guests on this episode of Autonocast, as well, to talk about PAVE360; Catapult comes up briefly, as well).

And to learn more about HLS, please download our whitepaper High-Level Synthesis for Autonomous Drive. Find out how algorithmic-intensive designs for autonomous vehicles are a perfect fit for HLS – and how the methodology has been successfully adopted by major semiconductor suppliers like Bosch, STMicroelectronics, and Chips&Media in the automotive space. Nothing abstract about names like that.

About the author

Andrew Macleod is the director of automotive marketing at Siemens, focusing on the Mentor product suite. He has more than 15 years of experience in the automotive software and semiconductor industry, with expertise in new product development and introduction, automotive integrated circuit product management and global strategy, including a focus on the Chinese auto industry. He earned a 1st class honors engineering degree from the University of Paisley in the UK and lives in Austin, Texas. Follow him on Twitter @AndyMacleod_MG.