Part 2: How Digital Twins Help Scale Up Industrial Robotics AI

Training a robotic task with Reinforcement Learning

Artificial Intelligence (AI) and Machine Learning (ML) are technologies that enable robots to perform many tasks they could not have done before. However, applying, validating and deploying these technologies for industrial robotic systems is a big challenge. This is the second in a series of posts about how digital twins of the factory equipment and product can help applying Artificial Intelligence and Machine Learning methods to industrial robotics applications in a much easier and faster way. If you haven’t read Part 1, we recommend starting with it before continuing to this post.

The promise of reinforcement learning for industrial robotics

Industrial robots deployed today across various industries are mostly doing repetitive tasks, executing programs with a finite and predetermined set of logical conditions usually written by robotic programming professionals. The overall task performance depends on the accuracy of the specific robot controller. The ability of robots to handle unstructured complex environments is limited. Picking of previously not encountered objects or the insertion of novel parts in assembly tasks are examples for such environments.

Endowing robots with human-like abilities to perform motor skills in a smooth and natural way is one of the important goals of robotics, with a huge potential to boost industrial automation. A promising way to achieve this is by equipping robots with the ability to learn new skills by themselves, similarly to humans. However, acquiring new motor skills is not simple. For robots, the continuous exploration space is large – a robot can be at any given position at any given time and interact with its environment in infinite ways. This means that large amounts of data and long training times are required.

Reinforcement learning (RL) holds promise for solving such challenges because it enables robots to learn behaviors through interaction with their surrounding environment and ideally generalize to new unseen scenarios.

What is Reinforcement Learning?

In the previous post, we mentioned a Machine Learning method called Supervised Learning that is known to work very well with certain tasks such as detecting objects in images. However, when the environment is less structured or the task is very complex, then even the training data preparation is sometimes a task that is too hard for a human or the best strategy cannot be determined in advance.

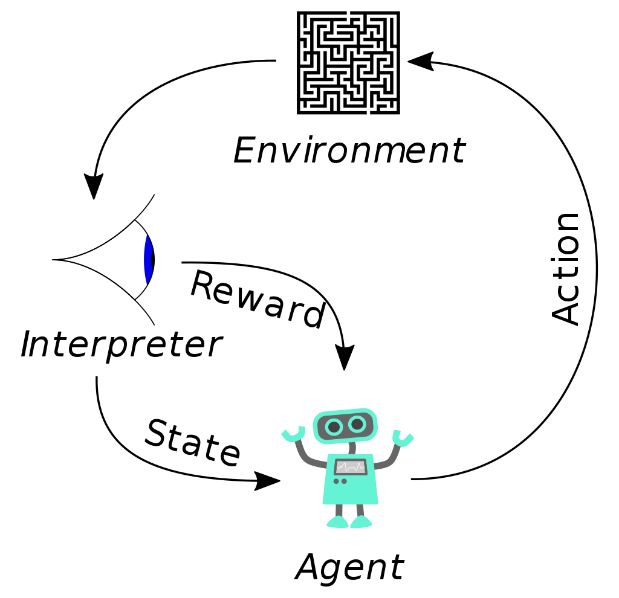

Reinforcement learning (RL) differs from supervised learning by not requiring labeled input/output pairs to be presented. Instead, reinforcement learning is the process of learning from trial-and-error, analogous to the way that babies learn. The robot (or more generally, Agent) explores its environment, trying different actions that result with different outcomes. The goal in RL is specified by the reward function, that acts as positive reinforcement or negative punishment depending on the performance of the robot with respect to the desired goal.

source: https://en.wikipedia.org/wiki/Reinforcement_learning

The challenges of scaling up reinforcement learning

Over the past few years, RL has become increasingly popular due to its success in addressing challenging sequential decision-making problems. Several of these achievements are due to the combination of RL with deep learning techniques. However, deep RL algorithms often require millions of attempts before learning to solve a task. If we tried to apply the same methods to train our robot in the real world, it would take an unrealistic amount of time, and likely cause damage to the robot, equipment and product. For example, Google has shown that deep RL can train a robot for bin picking simply through trial and error. However, several months of training on a robot farm where multiple robots attempted the task were required to achieve a desirable result.

Digital twins and simulation to the rescue

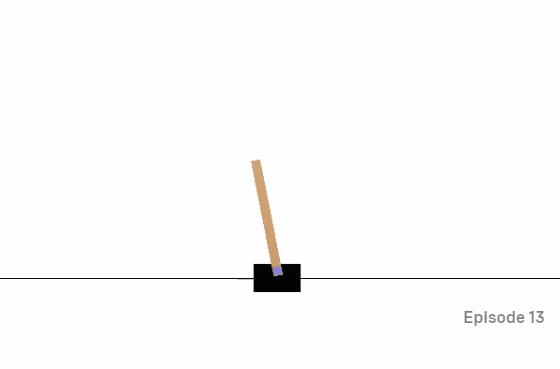

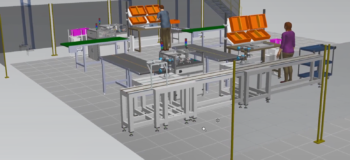

Training the digital twin of the robot in a virtual simulation environment is an emerging approach to accelerate the time taken to train robots and reduce the cost incurred by training with the real hardware and product. As an example, Open AI Gym is a software library that is used to set up various simulation environments for training and comparing RL algorithms. It provides an open-source interface to reinforcement learning tasks.

The goal is to prevent the pole from falling over.

source: https://gym.openai.com/envs/CartPole-v1/

While industrial robotics simulation has been well-established for a long time, recent advancements have made significant progress in transferring capabilities learned in simulation to reality (Sim2Real) – transferring robot skills acquired in simulation to the real robotic system. Yet, Sim2Real poses significant challenges to the robot simulators, because unlike simulating traditional robotic control systems that requires an understanding of the robot’s kinematics and dynamics, a reinforcement-learned control policy requires also constant (and sometimes very accurate) multisensory feedback from its environment.

If a robot can “feel” by having a force-torque sensor mounted on its end-effector and a camera enables it to “see,” then all these sensory inputs must be properly represented and simulated within the virtual environment.

To overcome these challenges, a robotics simulator should have the following key ingredients:

- Physics simulation capable of imitating the contact dynamics of the robot with its environment and between other objects in the robot’s environment. Additional specialized physics simulation models may be required based on the handled materials (soft/bending parts like wires, rubber bands, cloth etc.).

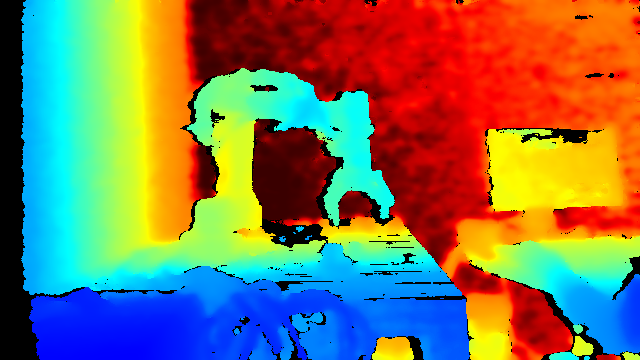

- A virtual camera (2D or 3D), according to the real camera model specifications. The virtual camera should generate realistic camera images with proper material texture and lighting conditions. This includes simulating typical properties emerging from the camera specs such as field of view, pixel noise etc.

- A framework for easy and intuitive scenario setup. This one could be challenging, as most simulators and RL algorithms require high skill in programming, Machine Learning and physics modeling to operate, and thus are commonly used mainly by research groups. Our answer to this challenge is to encapsulate accumulated knowledge and algorithms, coupled with simulation models into basic building blocks, or “skills”, that can be sequenced together to achieve complex and dexterous goals such as insertion of novel parts in assembly tasks. More on this topic in our next posts.

The reward function is based on the ability to achieve a stable hold on the part.

Accurate contact dynamics are needed in order to make the Sim2Real transfer.

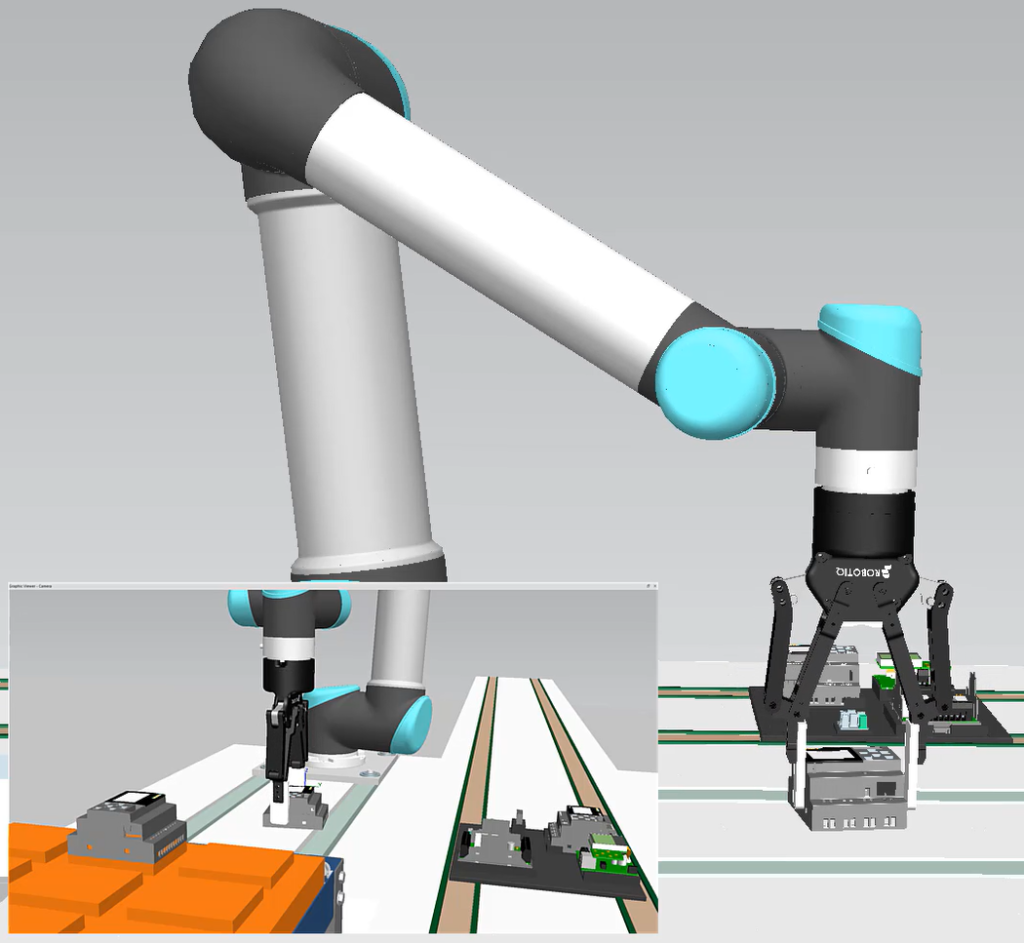

Tecnomatix Process Simulate Robotics RL Tools

Tecnomatix Process Simulate robotics and automation programming and simulation software from Siemens has recently been added several cool features that will help accelerate deployment and research of RL based robotic solutions. In the previous post, we shared details about how to set up a virtual camera and use it to automatically generate labeled images for training supervised learning algorithms. In the following video you can learn how to:

- Set up a virtual 3D (RGBD) camera

- Define a goal for a robotic part insertion assembly task

- Train a reinforcement learning algorithm in python

Watch the video!

In the following video you can learn how to use process simulate .Net API in order to:

- Define an environment for robotic part insertion tasks

- Create an observation message that will be sent to the agent

- Customize your own step and reward functions

- Set the epoch termination condition and collision handling

- Reset the epoch or the entire environment

Watch the video!

In the next post we will dive deeper into Sim2Real and share some of the latest advancements that may help bridge the simulation to reality gap. Stay tuned!

Liked this post? please comment and share!

Siemens – where today meets tomorrow!

Comments

Leave a Reply

You must be logged in to post a comment.

Hi, I am very interested in your research. But the information about Tecnomatix API is very limited. Can you share your code with me about this reinforcement learning case?

Can you help me? I really need your help