A new V&V co-simulation framework for autonomous UAV!

In May 2020, a commercial drone test flight was conducted near Riga International Airport in Latvia. During the test flight, the company lost communications with the drone and track of its location.

This 26 kg drone had enough fuel to stay in the air for 90 hours. It led to severe consequences, such as the closure of airspace over an international airport.1 While in Arizona, a military drone of more than $1M got lost, which caused heavy financial repercussions.2

Unmanned aerial vehicles (UAVs) are meant to perform different kinds of missions, which is one of the key aspects of drones. This broad spectrum of applications requires drone manufacturers to develop a modular platform adaptable to mission needs. Hence the need to customize both hardware and software components, specifically the guidance, navigation and control (GNC) algorithm.

During the verification and validation (V&V), one shall ensure the software meets the specifications and requirements to fulfill its intended purpose. Testing GNC on a real drone can be time-consuming and expensive. The alternative to reducing the cost is to use a simulation tool to conduct a preliminary virtual test campaign.

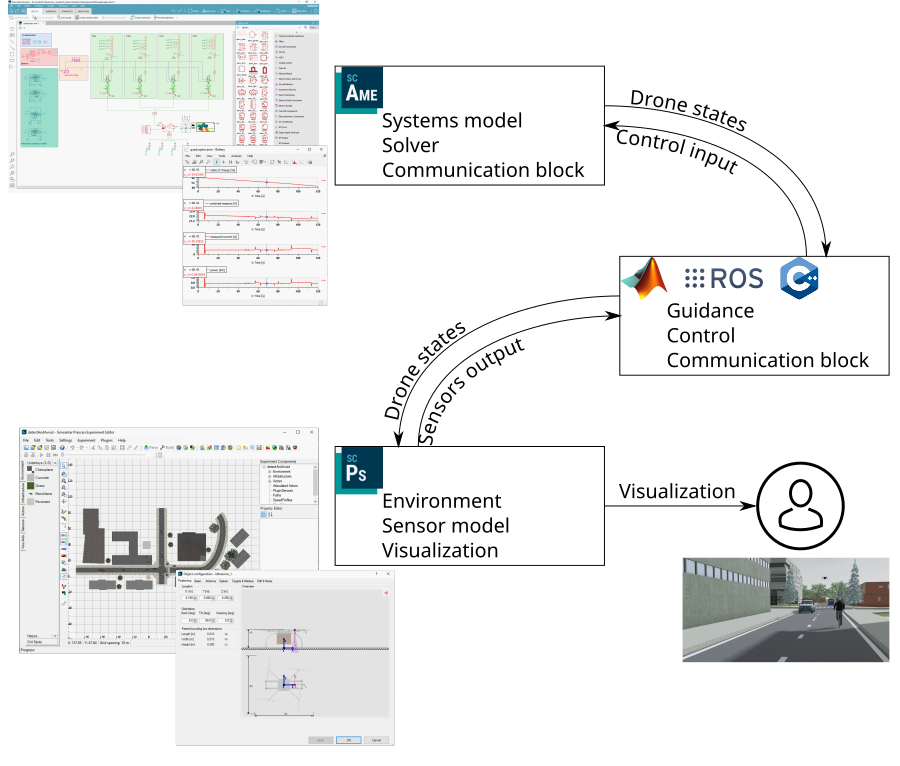

Co-simulation framework for V&V

Simulation models must provide high-fidelity results while CPU time remains acceptable (enough for real-time execution) to bring added value. The plant model of the drone, its environmental perception as well as the controller shall be simulated.

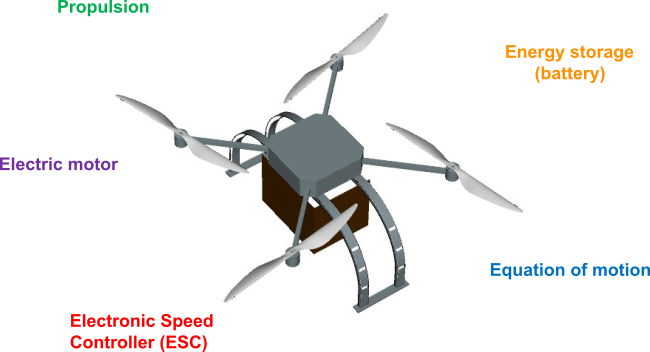

Let’s begin with the plant model: since the drone encompasses various physical domains such as mechanical (equation of motion, propulsion), electrical (motor, battery) and control, it falls into the category of complex multi-physical system. Consequently, a tool handling multi-physics is required to model those different subsystems.

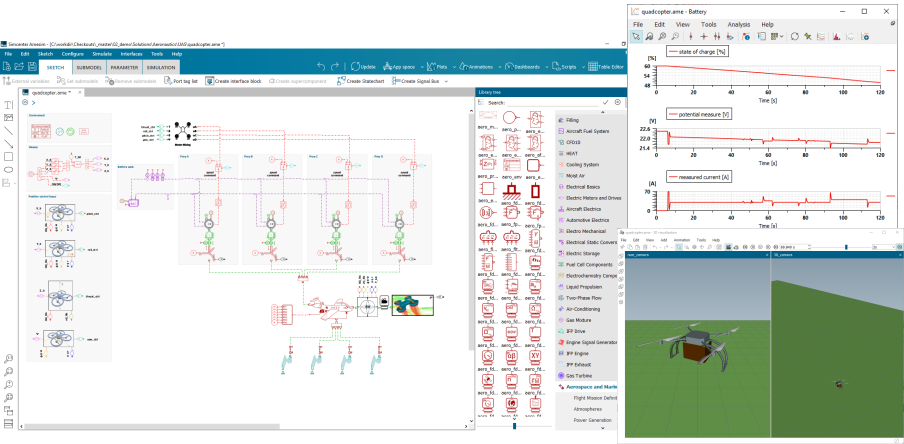

Simcenter Amesim is dedicated to the modeling and simulation of dynamic and multi-physics systems. The tool enables to achieve performance analysis as well as performing virtual system integration. Another key benefit is the scalability modeling approach, since the model accuracy increases as the design matures (i.e., tabulated approach or analytical equation). Furthermore, Simcenter Amesim is an open mechatronic simulation platform with various interfaces with Functional Mock-Up Interface (FMI) and MATLAB Simulink. In addition to the real-time applications support, software in the loop (SIL) and hardware in the loop (HIL) simulation can be performed.

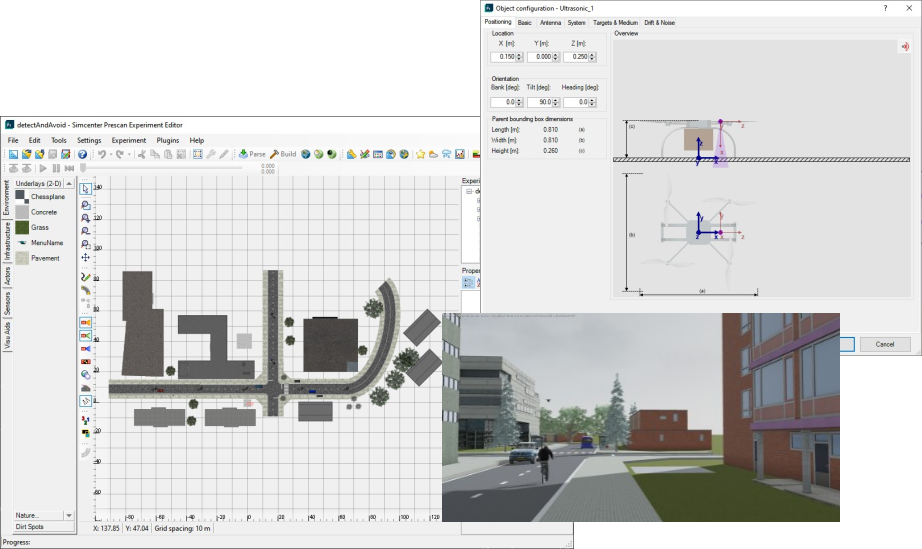

To perform autonomous missions, the drone must correctly perceive the environment. Hence the modeling of both the 3D environment and drone’s sensors via Simcenter Prescan. This tool is dedicated to modeling the vehicle’s surrounding environment (road, building, pedestrian, other vehicles) and vehicle’s sensor simulation (several levels of fidelity from ideal to physics-based). Changing the lighting and weather conditions (sun position, rain, snow, fog) can be achieved via a simple click.

The objective is now to couple the drone plant model (UAV subsystem performances), the environment perception model and the GNC algorithm together. Three variants have been developed; the difference is based on how to connect the GNC algorithm:

- GNC in MATLAB Model-in-the-loop analysis (early design stage of development)

- GNC in C++ Software-in-the-loop analysis (test the controller code)

- GNC as ROS node Use off-the-shelf autopilot abstraction and HIL analysis

Let’s use this co-simulation framework to conduct GNC algorithm testing on a virtual test bench. Two scenarios coming from the logistics use case from the COMP4DRONES European Project are run. This Electronic Components and Systems for European Leadership Joint Undertaking (ECSEL JU) project aims at providing a framework of key enabling technologies for safe and autonomous drones.

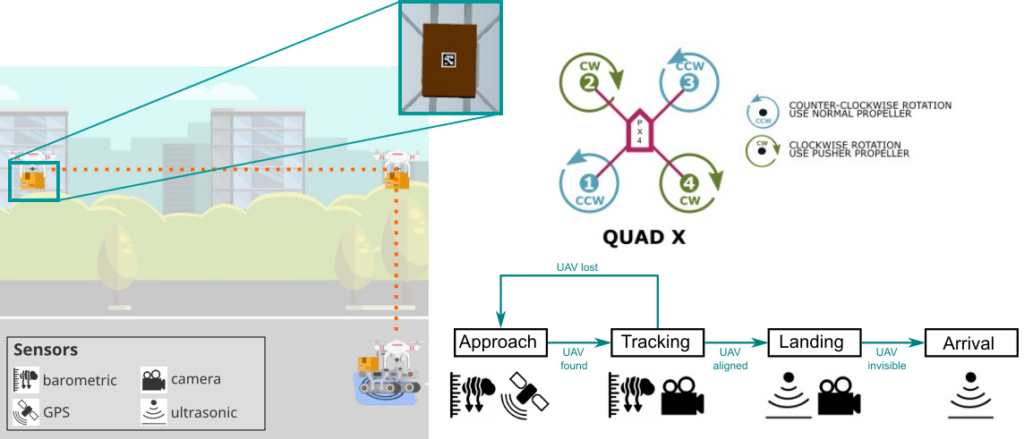

Precision landing use case

In the first scenario, the drone is used on a hospital campus to deliver a parcel containing a sample of spinal fluid (that must be handled with care) from one building to another. The drone must land precisely on a waiting droid equipped with a camera pointing upward. To precisely detect the drone position, a QR code marker is printed on the bottom side of the package. The droid will guide the drone to precisely land on it thanks to the camera.

The simulation focuses on the landing part of the mission and shall ensure a precision of less than five centimeters.

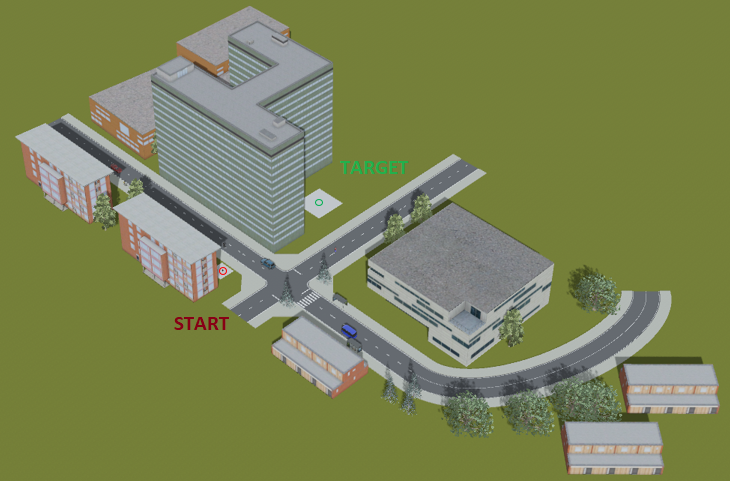

Detect and avoid use case

In the second scenario, the drone evolves in an urban environment and shall move between buildings. A laser imaging detection and ranging (LiDAR) sensor is used to sense the environment. In this scenario, the algorithm verifies if the obstacle is in front of the drone or next to the drone. It then computes a waypoint the drone must fly towards.

Conclusion

Using simulation tools offers several benefits to verify and validate GNC algorithms since boundary conditions such as light, wind or air temperature can be easily changed. It supports the validation of the control algorithm, which can be virtually tested under numerous flight conditions. One can also identify the operating range where the system reaches critical conditions. Finally, critical safety conditions can be assessed and mitigated (such as motor failure, wind disturbances…). Using this novel co-simulation framework to test algorithms virtually leads to a more effective test campaign.

You might also be interested in…

- Podcast | eVTOL aerodynamic design through digitalization

- Webinar | Urban air mobility

- Podcast | eVTOL power density and thermal management

References

1 BBC (2020) Latvian drone fueled for days goes missing, restricting airspace.

2 The Register (2017) US military drone goes AWOL, ends up crashing into tree 623 miles away.