A Simcenter personal consultant realized with Generative AI

You could do it all yourself, and it’s not as if you haven’t been doing so for many years. Trawling through user documentation, installation guides, knowledge-based articles, all in an attempt to find the answer to a specific question you have in mind. Using a simple text Search feature might take you to a relevant pdf document, the rest though is up to you. Tiresome, manually intensive and rarely pleasurable. What if you could just call on a Personal Consultant, a Digital Helper, to answer your question directly? Just ask it what you want to know and it then provides the appropriate summarized response for you. Whereas this was still fantasy even 2 years ago, today with the advent of Generative AI LLMs (Large Language Models) this is now becoming reality.

Good ideas, hampered by immature technology

It’s not as if humanity hasn’t attempted automation solutions for otherwise menial tasks before. Take the wheel, horse and cart, much easier than walking. For success there has to be a balance between the desired automation and the capabilities of technology to satisfy those needs. There are various examples of failed attempts, good ideas that were attempted way before technology was sufficiently matured to truly realize them. Maybe not ‘failed’ as such, but often fell well short of user expectation.

In recent years Siri and Alexa had gotten closer to what a user might expect from a Personal Consultant, but it wasn’t until the release of ChatGPT in November 2022 that everyone’s expectations changed. A step change in the ability to learn from vast amounts of text data, understand complex questions, remember previous interactions within a session, and generate comprehensive, coherent, and contextually appropriate responses.

What might be the job description of a personal consultant?

Whereas a Personal Assistant might act on your behalf, a Personal Consultant is there just to answer your questions. To be able to do so it must have access to all relevant information resources. From a simulation software perspective this would be all the user documentation, installation instructions, knowledge-based articles, community discussions, technical reference material etc. A wealth of information that you would otherwise have to datamine yourself. The personal consultant would need to be available 24/7 (that’s OK, computers never sleep), would have to understand any language, recognize equations and images to construct their response, and guarantee that the answer provided is valid and trustworthy.

So in terms of job requirements for a Simcenter Personal Consultant, it would have to be proficient in all of the following:

- 24/7 Support

- Customized or Tailored for Simcenter users (filters)

- Trustworthy answers (no hallucination)

- Multilingual

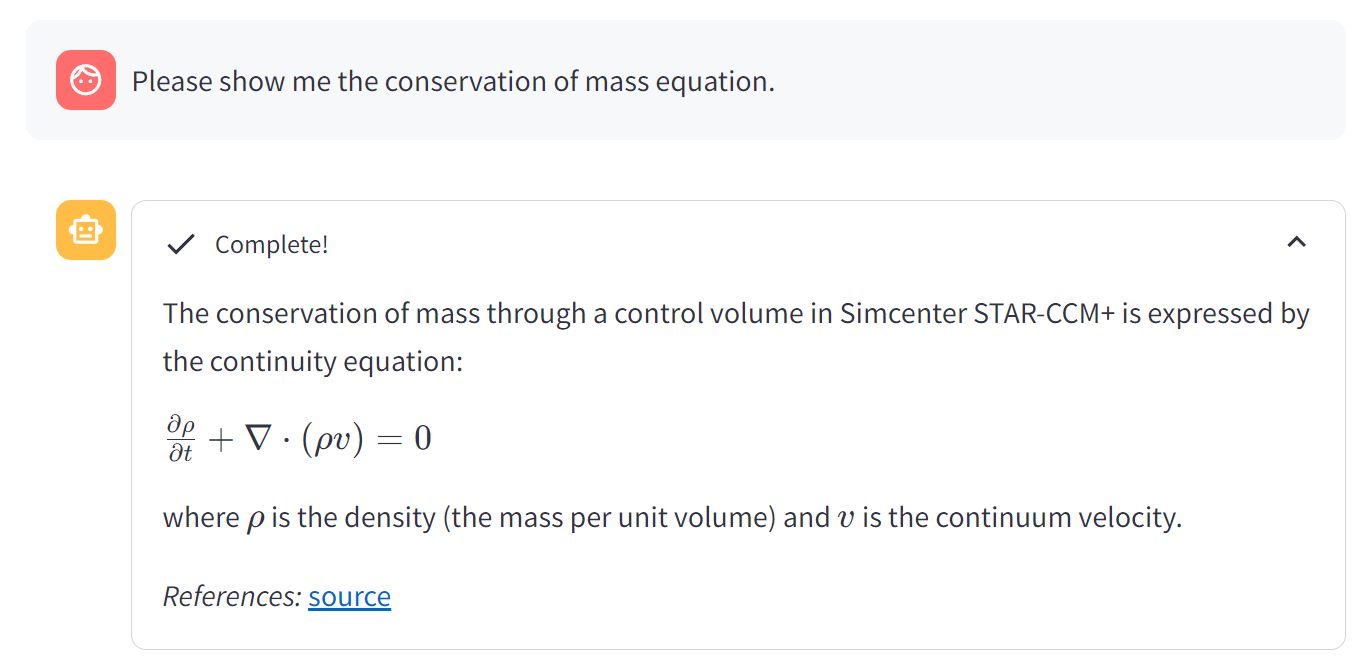

- Equation Recognition

- Image Support

Beyond simple text responses

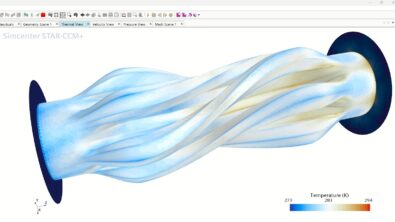

A standard LLM will just provide a summarized text response based on the text-based corpus of information available to it. Which is fine until one appreciates that that corpus of information it can access also includes equations and images. As these are so often used in product documentation, a Personal Consultant has to be able to act as a physics simulation expert to be able to correctly understand and perceive equations, and images, and be able to report them as necessary based on the request prompt.

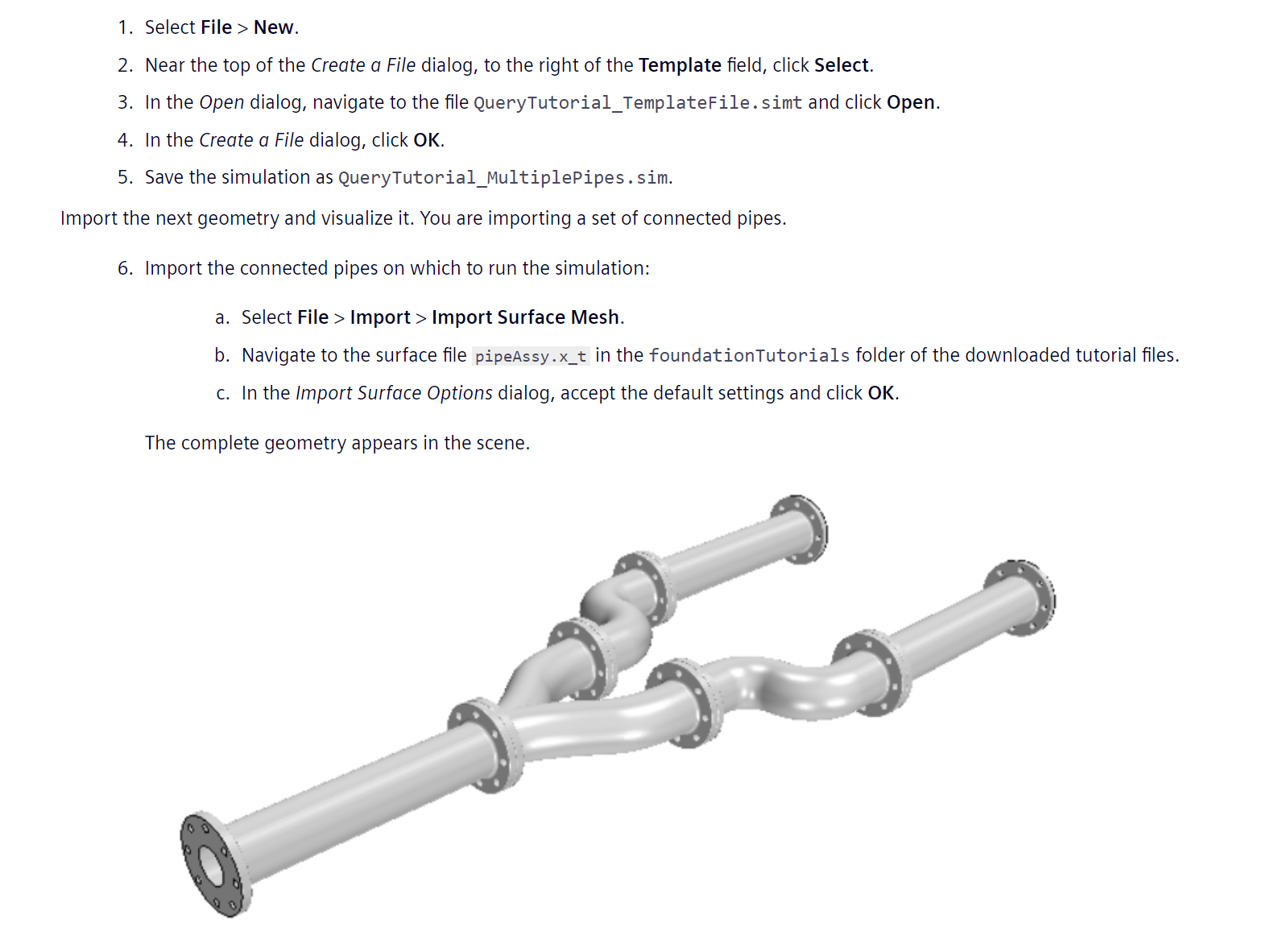

Another potential area for exploiting the capabilities of your consultant is in image recognition. Some LLMs can identify quite a lot of information from the image. Whether it is a formula, a geometric object, or mathematical plot, an LLM-based consultant can explain it to the user and also be tagged for inclusion in a search. As an example, if the user is searching for a template simulation file for ‘pipe systems’, the chatbot can use the information from the available training data, which contains a picture with a pipe and its derived text description ‘template simulation file’, even though there is no ‘pipe system’ phrase mentioned in the text.

How can you trust your personal consultant?

The ability of a LLM to take a prompt question, then provide a summarised response from a provided documentation set (including links to the original documents) using a RAG (retrieval augmented generation) approach is already quite standard. This in the context of the rapid change that LLM technologies are currently undergoing. However, LLMs can be notoriously non-deterministic. Ask it the same question twice and you might well get differing responses each time. The variability of a response can be controlled, but at the expense of achieving a more natural form of the response. They are also liable to ‘hallucinate’ where they respond with incorrect, misleading, or entirely fabricated information. To better guarantee LLM responses there is the need to evaluate the response to judge, or even quantify, its validity. The next wave in the maturation of Personal Consultants that utilize LLM technologies will be focused on evaluation and indication of response validity and trustworthiness. Some level of certification will be demanded.

Trustworthiness metrics

In the development of LLM augmented Personal Consultants, trustworthiness needs to be further refined. In doing so the performance of a range of differing LLMs might be compared and contrasted. So what is trust and how might it be quantified?

Two terms might be considered: ‘Faithfulness‘ and ‘Relevance‘.

- Faithfulness is taken to be a measure of how correct, or accurate, the answer is, in the context of the corpus of information that is available to the LLM. Basically whether the answer is factually correct or not. Consider this prompt question: ‘In which version of STAR-CCM+ the first version of the SPH solver fully integrated?‘ (the correct answer is Simcenter STAR-CCM+ 2402):

- High-faithfulness response: The first version of the SPH solver fully integrated in STAR-CCM+ 2402

- Low-faithfulness response: The first version of the SPH solver fully integrated in STAR-CCM+ 2306

- Relevance is a measure of how well the question is answered, how closely the response relates to the intention of the question. Consider this prompt question: ‘What is new in STAR-CCM+ 2402?‘:

- High relevance answer: STAR-CCM+ 2402 introduces support for blade fillets in the Turbomachinery structured mesh capability, enhancing accuracy in simulations.

- Low relevance answer: STAR-CCM+ 2402 has some updates and improvements, including bug fixes and performance enhancements.

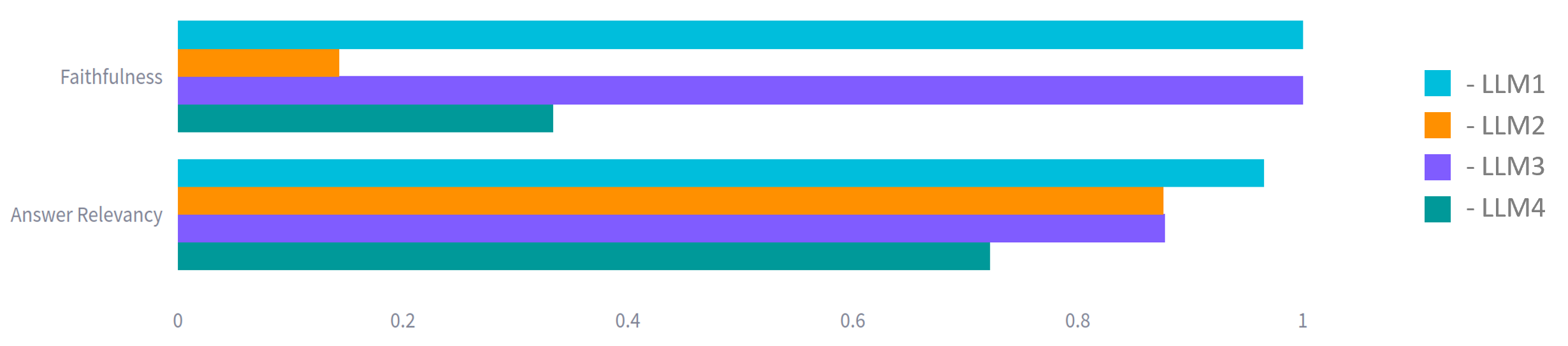

There are now a plethora of LLMs that can give some answer, the challenge is to determine which one is most appropriate to be considered for implementation. And not all LLMs are equal, here a quantified comparison of our evaluation of 4 different LLMs for a given prompt:

Whereas very established methods for e.g. unit and regression testing have evolved to support the development of classical deterministic software (that does (or should do) the same thing every time you execute it), LLMs don’t behave in the same way. The need to elaborate a testing approach that accommodates non-determinism is an emerging challenge.

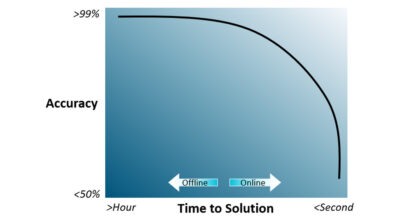

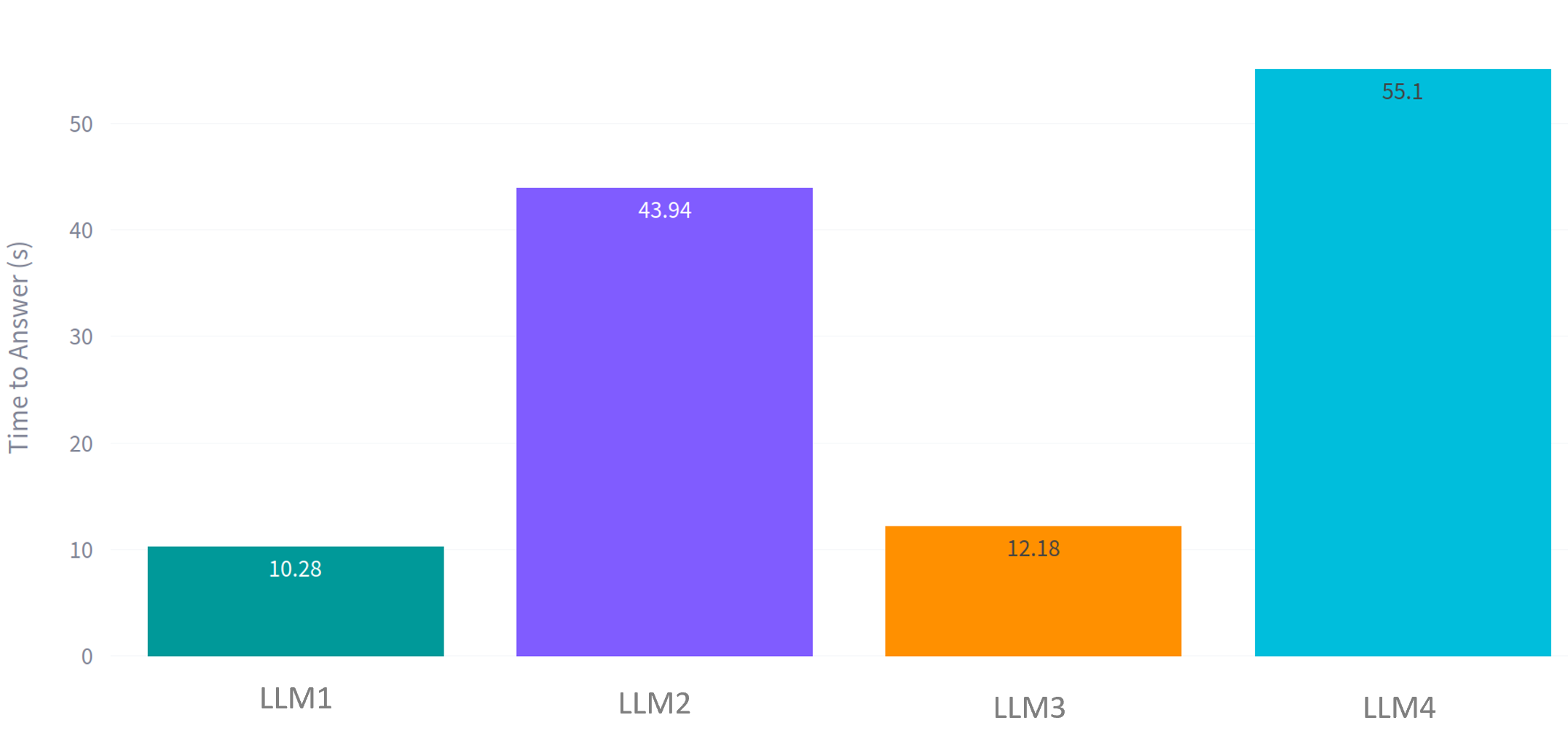

Response time

As with many things in life, ‘Good things come to those who wait.’ The varying capabilities of available LLMs, coupled with how they are invoked, what data is used to feed them, how their responses might be further refined, all come with a trade-off between time to provide a response and the trustworthiness of that response. Is an answer provided in less than a second more attractive than a better one that takes 60 seconds? What time penalty would a user be willing to suffer for not having to do the heaving lifting datamining themselves? Maybe for a single question 30s isn’t too long to wait, but if you’re having a conversation with your Personal Consultant such tardy responses would be difficult to bear. Suffice to say the response time can vary wildly depending on which LLM technology is being utilized, and how it is driven.

As is common with the expectations of simulation technology, the holy grail is an acceptably accurate answer provided instantaneously. LLMs are themselves a form of simulation, predicting an answer based on the request of it and so suffer the same trade-off.

A Generative AI Simcenter consultant to assistant innovation roadmap

Instead of trawling through user manuals and product documentation yourself, having an LLM act as a Generative AI interface between your query and the available information, is a massive step forward. In this blog we make a conscious distinction between a Personal Consultant and a Personal Assistant. A consultant will tell you information, answer your questions, maybe make recommendations, and in doing so enables you to make better decisions, faster. A Personal Assistant does more. They are sanctioned to work on your behalf, to execute your intentions, to interpret your desire and to make them a reality.

Before such time as you might trust a personal assistant, the least you’d need confidence in is in a personal consultant. ChatBots that provide a conversational and interactive interface into available documentation are the next step, but not the final one, in an ongoing automation roadmap.

Whereas a Personal Consultant will aid you in your knowledge and understanding, a Personal Assistant would in addition do the work for you. From simulation model manipulation, to managing your solution processes through to interpreting the results on your behalf. Whereas the least that needs to happen is in the certification of Personal Consultant answers, the opportunities that a Generative AI Personal Assistant might provide will continue the revolution in the way in which a user can extract as much value as possible, as quickly as possible, requiring little to no training, from our Simcenter portfolio.