Engineering simulation part 3 – Modelling

‘Everything should be made as simple as possible, but not simpler’

Albert Einstein (possibly apocryphal)

Whether Einstein said this or not, or whether it was attributed to music and not physics, the sentiment certainly holds true for engineering simulation. Embellishment of simulation models, undue model refinements with an assumption that there is then a proportional increase in the value of the simulation, is a trap that most fall into, but will always benefit from some level of circumspection.

Simple Models are Uselessly Inaccurate

…is a naive attitude towards simulation. All and every simulation model is simple, rather simplified, to some extent where assumptions and abstractions are made. A solid object is assumed to have material properties that are constant throughout its volume. A flow boundary is assumed to have a constant pressure across its area. The assumed size of an object is the same as that manufactured. Simplifications, inherent in the process of abstracting reality into a simulation model, occur always. All models are simple to some extent. Too simple and they might indeed be usefully inaccurate. Too complex and a) they will take an age to simulate and b) even then there’s no guarantee that they’re ‘correct’.

Getting a Precise Answer to the Wrong Model

As covered in Part 1 of this series, there are various ways in which a model might be inaccurate. Some are under your control, others are not. You’ll naturally gravitate towards minimising errors that are under your control, e.g. mesh resolution, solution termination criteria etc. so that you’re left with the belief that a converged conserved solution is ‘correct’. It might indeed be ‘precise’, but that should not be conflated with ‘accuracy’. Although it’s easier to manage those sources of errors that are under your control, an equivalent effort needs to be invested in ensuring that your model is a good enough representation of reality. Question the modelling assumptions you have made with the same fervour as you guarantee your residual reductions. Use physical measurements wherever you can. Likely you do already, indirectly, by assigning material properties from your supplied library but where do you think those values come from and what confidence do you have that they represent the materials that your product is constructed from?

Good Enough is Quicker and Thus More Desirable

Time is indeed money and reaching a ‘good enough’ solution quickly is worth its weight in gold. But what constitutes ‘good’? Sometimes it is accuracy based, often it is time based. Consider the following 2 scenarios:

- I have been asked to provide a physics-based simulation report on a late change of a product concept in time for tomorrow morning’s design review meeting.

- I have been tasked with shaving off 1.5% of our product’s primary KPI via the use of simulation.

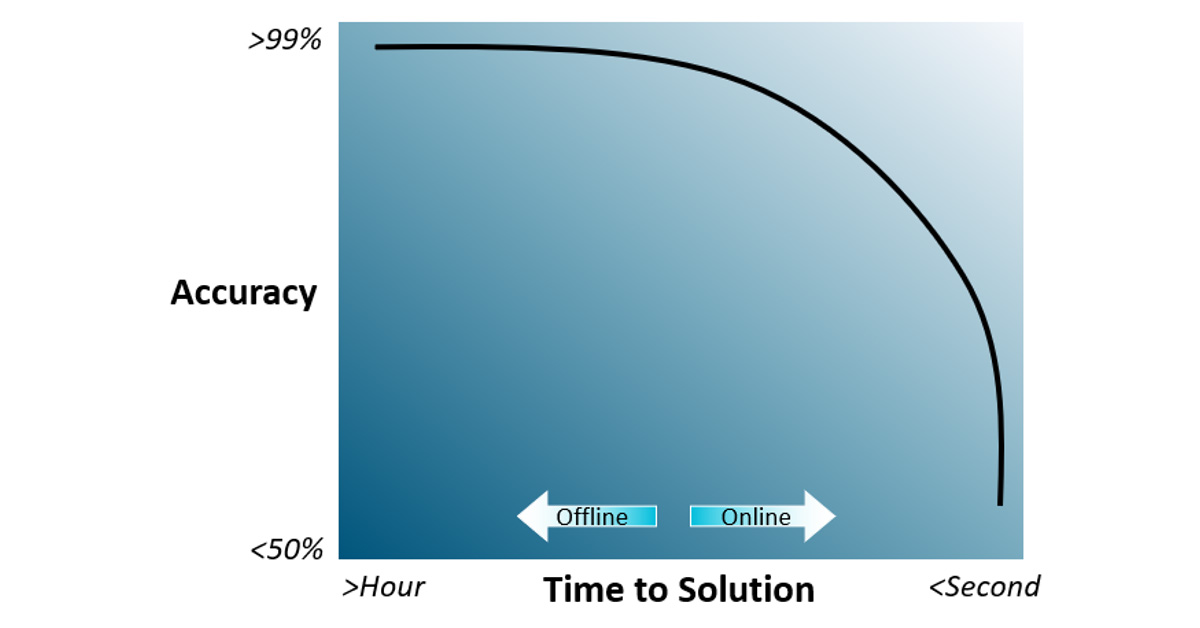

Both are valid industrial use-cases, each has their own simulation requirements and constraints. In a perfect world you’d be able to achieve absolute accuracy of a simulation in real-time. Our world unfortunately is far from perfect and therefore you are forced to balance the competing requirements of accuracy vs. time to solution.

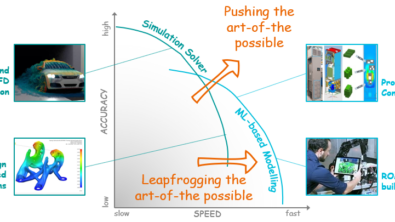

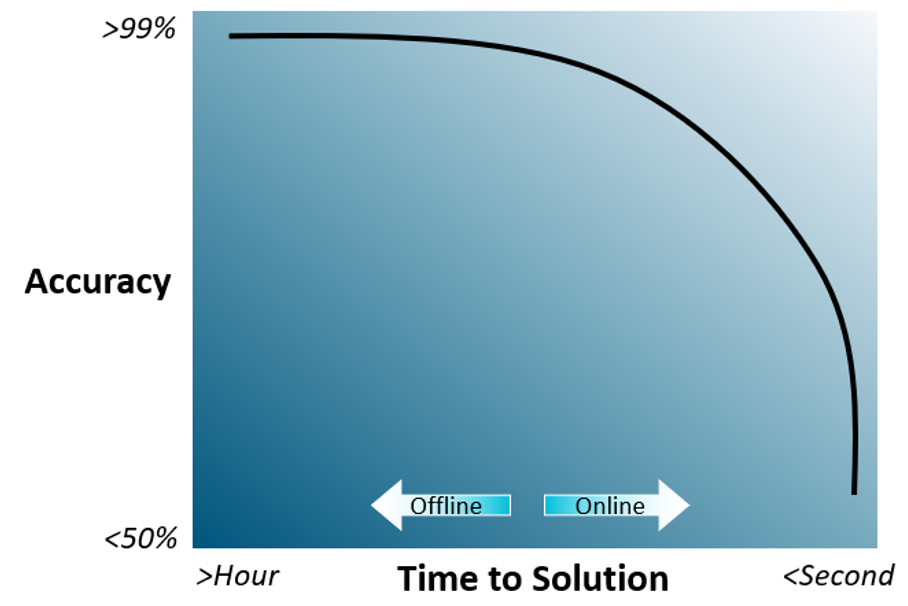

The above is intended as a qualitative representation of a Pareto front of simulation technologies available today. Markers could have been added, from classical 3D mesh discretised solution at the top left, through to system simulation and on to FLIP methods on the bottom right.

And yes, one might argue as to the accuracy of each method, but suffice to say, if you require accuracy, you are required to pay a time penalty. There are diminishing returns on productivity when you spend an inordinate amount of time chasing down the final 0.X% of your epistemic errors when your aleatoric errors completely swamp your results anyway. ‘Don’t sweat the small stuff’ in this instance doesn’t mean not to worry, just that it’s better to appreciate where your simulation efforts might best be applied.

How Inaccurate Can You Be but Still Discover Useful Simulated Insights?

…is the million $ question. Whereas an academic approach to simulation often forsakes all other non-functional aspects of modelling so as to achieve as absolute an accuracy as possible, industry often begs to differ. Obtaining a good enough solution in minutes will often have more value than obtaining an accurate solution in days.

As to how inaccurate you can (quickly) be and still demonstrate utility, is use-case dependent. Some simulation enabled insight is better than nothing, especially when you have only 3 hours to prepare for tomorrow’s design review meeting.

If you have to determine which conceptual product architecture is most preferable, then your simulation need only be so accurate so as to identify the relative performance of each concept design to the extent where you can resolve which one is better than the others.

If indeed you need to shave off 1.5% of a KPI then you’ll make the necessary adaptions to your model to ensure that the resulting accuracy is well below that design delta that you are trying to optimise. And then you’ll have to suffer the resulting time to solution penalty.

‘Time to Solution’ is a considered term. Whereas there is much focus on ‘solution time’, the time required by the solver to achieve its converged state, often as much, if not more, time can be spent on the model definition, meshing, post-processing and integration of any simulation proposed design modifications back into the PLM workflow backbone.

Maybe ‘Cost of Solution’ would be a better metric, encompassing the pre-requisite expertise a CAE user needs to have, the cost of compute resources they require, the time taken to datamine a solution and the effort required to integrate the resulting conclusions back into a project workflow.

So, ‘How Inaccurate Can You Be but Still Discover Useful Simulated Insights?’ That’s dependent, but the point being that you should be as inaccurate as possible (but not more so). In doing so you’ll be more responsive. You’ll be able to simulate more design variants in the pre-concept commit phase. You’ll be able to achieve verification faster by focussing on a) critical KPIs and b) their sensitivity in your model on the model setting or parameters.

Case in point: for your mesh sensitivity, focus only on the grid independence of your KPIs. For your solution termination parameters, focus only on the iterative flatlining of your KPIs. You might only confirm a converged solution by noting the relative reduction in residuals, but don’t fall foul of accepting a precise solution as being ‘correct’. KPIs often converge faster than your globally integrated residual errors.

There are CAE vendors who purport to provide real-time simulation capabilities. The fact that they don’t quantify the accuracy of such simulations is telling. Why wouldn’t you use a real time simulation capability in lieu of more classical simulation approaches? The reason is accuracy, a precious commodity in the CAE field, rather the relationship between accuracy and cost of solution. No vendor seems to have yet taken the bit between their teeth and committed to a quantified definition of what that trade-off entails.

Accuracy is better described in terms of uncertainty, especially when it comes to aleatoric errors. For a simulation tool to be able to provide an indication of uncertainty of its prediction it would require corresponding uncertainties of input parameters to be defined. This is where Uncertainty Quantification (UQ) comes into play. Though it doesn’t in most industrial simulations today as it requires a) knowledge of the input uncertainties and b) additional time required for e.g. Monte Carlo or any other surrogate model approach to extract parametric uncertainties. An application must have access to those uncertainties and the legislated need to require them.

It Takes Years

CAE Simulation is not yet commoditised. Whether you subscribe to Malcom Gladwell’s ‘10000 hour’ rule, the practise time required to become expert in any one discipline, or not, a simulation engineer is valued because of their ability to abstract a model and provide valuable simulation insights. The balance between accuracy and cost of solution is something a CAE engineer juggles on a daily basis. More than just knowing which buttons to press in their favoured CAE tool, decisions as to the modelling abstractions of their application, which physics are relevant for the given application, how best to consider those physical behaviours in their model, is the preserve of the CAE engineer.

Why can’t any designer who has to consider the physical behaviour or their product not benefit from CAE simulation insight today? Is it a limitation of CAE tools, with their pre-requisite of user experience and expertise? The total available market for physics-based simulation is at least an order of magnitude greater than that which is addressable today. Simulation need not, and should not, be the preserve of the CAE expert (with their 10000 hours of training).

How might this change in the future? Large Language Models (LLMs) such as ChatGPT and Bard offer conversational AI interfaces that could be applied to the automation of model definition, meshing, solving and results extraction. Such automation and thus a resulting democratisation of simulation will be a when, not if. The Luddites rebelled against textile manufacture automation in England in the C19th. Though I doubt there will be any burning of IT servers any time soon as simulation ultimately goes the same way, the opportunity for commercial CAE vendors to adopt novel AI/ML opportunities is clear and evident.

Physics based simulation should be available to all who have a need of it. It need not be the preserve of the expert, neither should it be.

Your Models are Probably Wrong

Today it’s relatively easy to get a CAE solution, it’s much more challenging to assure an accurate one. Although this blog series isn’t a playbook to follow to ensure you can achieve your required balance between accuracy and cost of solution, it is intended to instil a pragmatic attitude towards engineering simulation.

Yes, your models are probably wrong, in fact I would say they definitely all are to a certain extent. How wrong? Are they accurate enough? What can you do about it? Do you need to do anything about it? Do you have time to do anything about it?

It would be hubristic to assume CAE roadmaps are nearing their end. Quite the opposite. CAE is in its infancy, though already providing real value to industrial design practices. Despite the advances CAE tools have demonstrated in the last 50 years, especially since the late 1970s, the CAE industry has a long long way to go. Advances in AI, ML, LLM and compute hardware roadmaps will enable a user experience unimaginable today which will bring generative design into the hands of anyone who might have a need of it in the future.

Further Reading

- Engineering Simulation Part 1 – All Models are Wrong – “all models are wrong; the practical question is how wrong do they have to be to not be useful.”

- Engineering Simulation Part 2 – Productivity, Personas and Processes – “Make mistakes early and often, just don’t make the same mistake twice”