The Many Flavors of Equivalence Checking: Part 2, How SLEC Brings Automated, Exhaustive Formal Analysis to ECO/Bug Fix Verification

Perhaps the only Sequential Logic Equivalence Checking (SLEC) flow that is as common as the synthesis validation flow described in Part 1 of this series is the need to quickly verify whether an engineering change order (ECO) and/or a bug fix implements the desired correction in circuit behavior – and nothing more. Indeed, in the rush to “do something” while Management is breathing down your neck to get the project back on track, half the battle is to make sure a fix doesn’t introduce some unwanted side-effect.

Perhaps the only Sequential Logic Equivalence Checking (SLEC) flow that is as common as the synthesis validation flow described in Part 1 of this series is the need to quickly verify whether an engineering change order (ECO) and/or a bug fix implements the desired correction in circuit behavior – and nothing more. Indeed, in the rush to “do something” while Management is breathing down your neck to get the project back on track, half the battle is to make sure a fix doesn’t introduce some unwanted side-effect.

Granted, if a lot of bugs are cropping up late in the project, customers are typically willing to rerun their entire UVM testbench regression suite to make sure the all the fixes and new ECOs are effective and don’t break anything else. However, even for relatively small IPs – especially those with very complex logic – re-running the whole simulation regression suite can take days – even a full week or more. In the case of only a single bug fix or “small” ECO, the prospect of having to wait a full week for results can lead to the temptation of gambling that the bug/ECO is OK and tape out anyway. Fortunately, this is where an automated exhaustive formal approach can save the day.

Vive La Différence

In the logic synthesis validation case, by definition any differences detected between the “Specification” and “Implementation” code are clearly a bad thing. Conversely, imagine instances where it was ok if the Implementation was somehow different from the Specification – as long as the detected difference(s) were exactly what you wanted to see. For example, imagine that late in your project you needed to make a small modification to the DUT to add a new feature, or to fix a bug. Clearly the new Implementation will be deliberately different from the original, pre-ECO / buggy Specification code. Of course, to verify this new Implementation you could simply re-run your simulation testbench – which presumably would include a handful of new tests to cover the new functionality – and see if everything passes.

However, even if all the simulations were successful, the fact is that even the best constrained-random testbench cannot deliver an exhaustive analysis. Hence, you will be left wondering if the code change introduced some unwanted behavioral side-effect that you didn’t consider – i.e. in the process of fixing a bug, did you unmask another bug or introduce a whole new bug? This is where an exhaustive SLEC analysis comes to the rescue.

How SLEC Analysis Applies

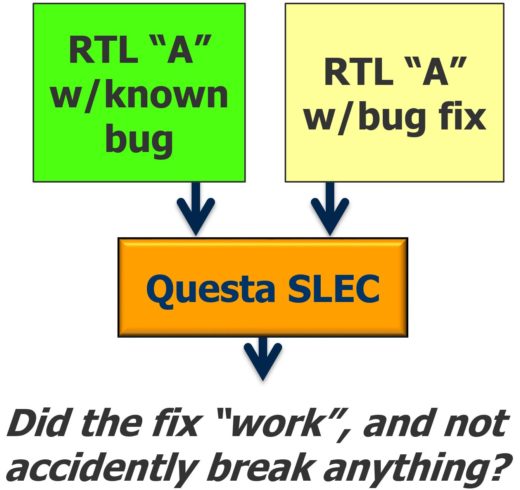

Figure 1: SLEC ECO/bug fix validation flow

As shown in Figure 1 above, the verification flow is really very straightforward: the SLEC tool is used to exhaustively compare the original RTL code with the known bug (or pre-ECO deficiency) with the new, edited / repaired code. As you would expect, the Questa SLEC app enables users to easily account for trivial mismatches of net or instance names and arbitrary levels of hierarchy before the analysis is launched; and all discrepancies are summarized in concise reports so users can quickly determine the severity of any issues that are identified. The automated, exhaustive formal algorithms will find all the behavioral differences appearing at the outputs of the two designs. Clearly, the results better be exactly what you were expecting, and nothing more.

Another significant advantage of this formal-based flow is that it typically runs much faster than the simulation approach – ranging anywhere from a few hours to a day or so depending on design size, etc. vs. re-running the whole simulation regression suite, which can take multiple days – even a full week or more.

In practice, customers still do both the SLEC and simulation flows in-tandem. They run the exhaustive Questa SLEC flow first, enabling quick iterations of the ECOs/fixes until they are satisfied the new DUT is properly fixed. Then for safety’s sake they re-run all their constrained-random tests anyway; but thanks to the formal-based SLEC analysis they are justifiably confident the full regression run will be a complete success.

But Wait, There’s More …

Now that we have a fast, effective, exhaustive RTL behavior comparison machine at our disposal, there are many other high-value verification challenges that can be completed in hours – even minutes – compared to other approaches. In Part 3, I’ll show how SLEC technology can be applied to another common challenge: low power design verification.

Until then, Vive La Différence!

Joe Hupcey III,

for the Questa Formal team

Reference Links:

Comments

Leave a Reply

You must be logged in to post a comment.

Regarding the statement:

“[Even] if all the simulations were successful, the fact is that even the best constrained-random testbench cannot deliver an exhaustive analysis. Hence, you will be left wondering if the code change introduced some unwanted behavioral side-effect that you didn’t consider.”

This doesn’t just apply to ECOs. It applies to fixes done pre-tapeout as well.

An interesting thought experiment is if one could use SLEC like this during the development phase to do “differential verification”.