AI Spectrum – Simplifying Simulation with AI Technology Part 2 – Summary

Recently I continued my conversation on artificial intelligence (AL) and machine learning (ML) with Dr. Justin Hodges, an AI/ML Technical Specialist and Product Manager for Simcenter. Previously, we covered such topics as AI’s role in improving the user experience, reduced order models (ROMs), and automated part classification. You can find a summary of part 1 here. In this second part, Justin continued to expand on the many ways AI is reshaping simulation and testing, as well as the challenges associated with building user trust in AI solutions. Here are a few highlights of that talk, but I encourage you to check out the full podcast here, or read the transcript here.

Picking up where we left off in our previous discussion, Justin expanded on the predictive abilities of AI in simulation and design, especially in enlarging the design space. To start off, he proposed the example of designing an internal combustion engine – a complex process involving many teams working on different components across a variety of competing requirements such as power output and fuel economy. Validating each individual step in the process also requires complex simulation with even more expensive simulations being run once the different teams start merging their work together. In order to address these challenges, the conventional approach uses low-fidelity models early in the design process to explore the major design decisions before developing higher fidelity models later in the processes. This approach, however, leaves a wide portion of the design space unexplored – which is exactly where AI and ML can come into play. By training an AI model on data from previously completed simulations or even real data from similar engine designs, it becomes possible for the AI to predict how a particular set of engine parameters will perform without having to run expensive simulations. This opens up a much wider design space exploration during the early stages of development, replacing traditional low-fidelity simulation with much faster running, higher fidelity AI predictions.

Going beyond simulation and validation, Justin went on to discuss the benefits of AI and ML in the test space – particularly in spotting patterns too complex or nuanced for a human to identify. As products get smarter and more complex, so too does the data generated during their testing and later monitoring. Justin gave the example of the turbine on an airplane. This is a very complicated system comprised of thousands of parts and once you start looking at global metrics – like temperature or pressure – it can be hard to understand the underlying mechanics driving them. Similarly, when analyzing the complex signals returned by analog sensors in an anomaly scenario it can be difficult to tell whether the sensor hardware itself is breaking, or if something else needs to be fixed. By feeding this kind of data into an ML algorithm, it becomes possible to identify faults before they occur, sift through datasets far too large for a human to comprehend and pick out patterns too subtle to register with even an expert.

Finally, we also discussed the issue of trust. When it comes to using AI system in critical applications, potentially even life-or-death ones, it is vital that users, both experts and non-experts, are able to completely and unerringly trust the answers that system gives. Building this level of trust is no easy task, however and will require a variety of different tools to accomplish. Justin highlighted a couple of key approaches to building trust when it comes to AI that he and his team use. Blind validation is a strong, industry agnostic approach which segments the training data for use in training and testing, as is normally done, but also for validation of the full AI-driven data pipeline after training is complete. Beyond that, there is also the option to add a level of transparency to the otherwise black-box that is AI. The goal is a gray box, where not everything is known about how results are generated but there are certain known, enforced boundaries on how the AI thinks and operates, guaranteeing a result within an expected range.

The field of AI is a constantly evolving one, with new and exciting technology constantly being developed, changing the way we do familiar tasks while also enabling new, never before seen approaches at the same time. It is exactly this type of innovation which drives the continued evolution of software packages like Simcenter, allowing them to evolve into a truly smart and assistive system capable of aiding the next generation of designers in ways we could only dream of before.

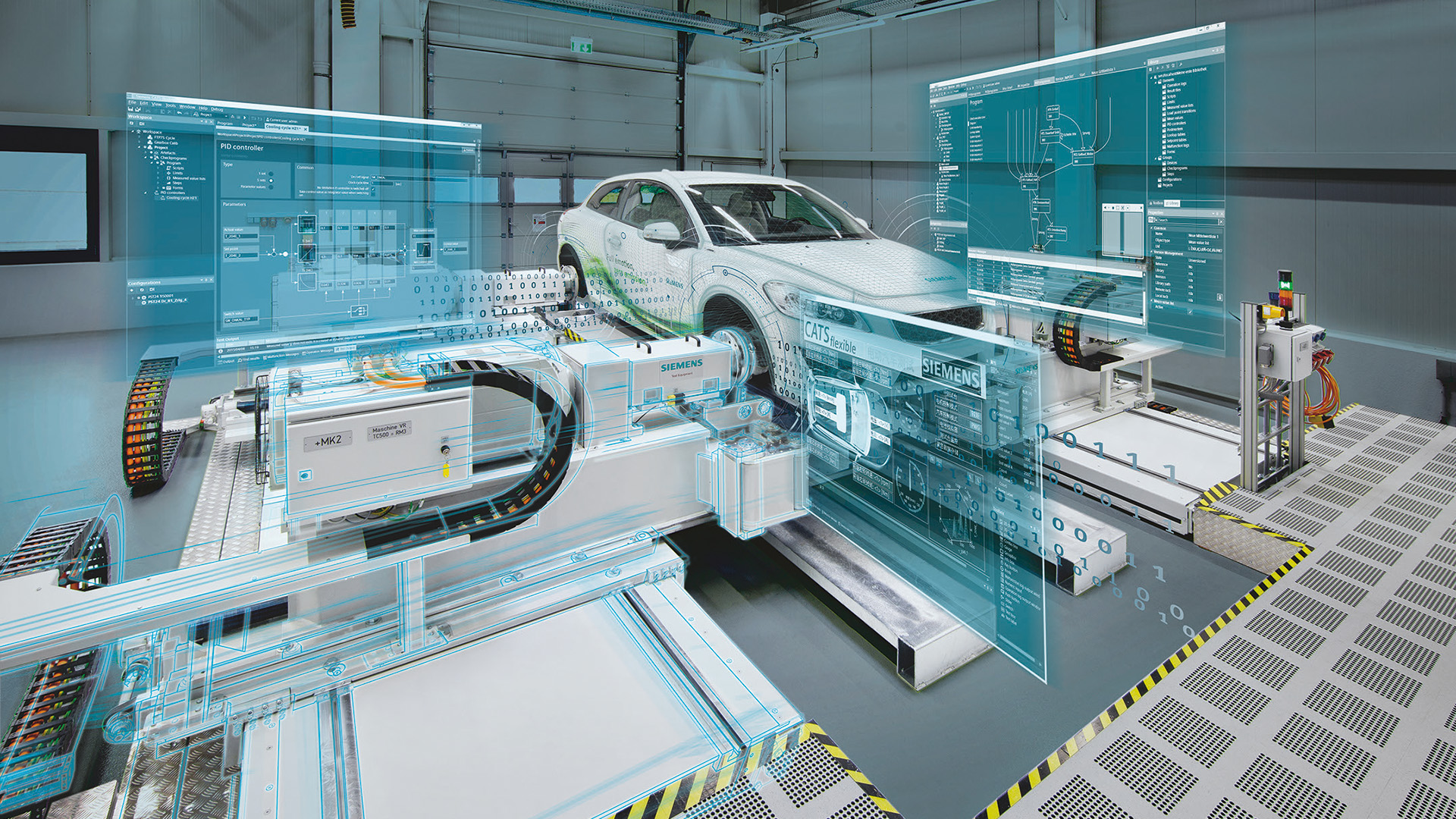

Siemens Digital Industries Software helps organizations of all sizes digitally transform using software, hardware and services from the Siemens Xcelerator business platform. Siemens’ software and the comprehensive digital twin enable companies to optimize their design, engineering and manufacturing processes to turn today’s ideas into the sustainable products of the future. From chips to entire systems, from product to process, across all industries. Siemens Digital Industries Software – Accelerating transformation.