Applying computer vision in automated factories

As a result of my interest in AI, I am naturally drawn to leaning about the human brain and how the mind works. It is clear that we are far from figuring out how human intelligence works, but based on my reading, it seems like the most understood process so far is vision. We know the science and mechanics of how an image travels through the eye and lights up places in the brain to detect and recognize an object that we see. How that recognition translates into action and how it maps into past memories is a bit murky at this time. But, we know how images are broken down for analysis by the brain in order to recognize them at least. That is why, I confidently tell my colleagues, computer vision, driven by machine learning, is so successful. Computer vision and machine learning are a key foundational technologies for automating factories today and tomorrow.

Siemens is well-recognized as a leader in factory automation and machine learning projects and tools are in abundance for design, realization, and optimization of equipment that efficiently manufactures products around the world. We divide computer vision solutions into these common areas:

- Object recognition: the system analyzes and identifies one object at a time in order to perform a task. For example, a conveyor belt at a recycling factory brings an object into an observation zone. The system can recognize that the object is either paper, plastic, or metal and then pushes the object into an appropriate bin.

- Object detection: the system analyzes and identifies multiple objects at a time. The machine learning model identifies each object at once to trigger an action. For example, a factory system that assembles a kit of parts can identify that all the required parts are within view before packaging them.

- Segmentation: the system uses a set of information in order to make decisions. For example, a small autonomous robot within the factory uses computer vision to pick objects from shelves and then finds a safe route to deposit the items in an assembly area.

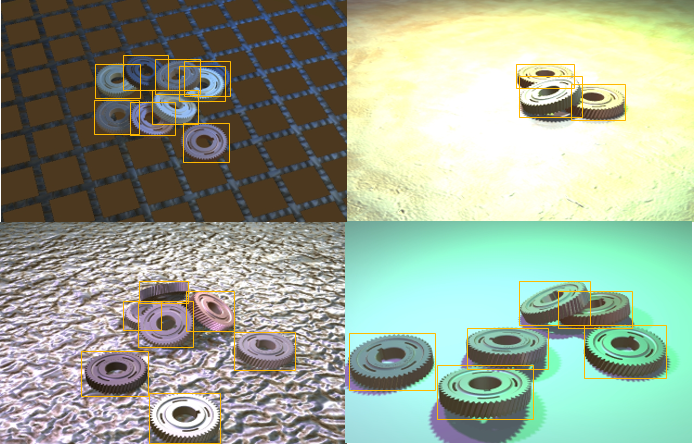

These three areas are in production today. But, there is a fourth area called 6D pose estimation that is new and exciting. In this solution the AI model detects 3 dimensions for the rotation of the object and 3 dimensions for the position of the object to determine the exact location of the object in relation to the camera. This technology is particularly interesting to solve autonomous robotic grasping issues. For example, a robot picks up various types of parts to transport them to other step on the production line. The problem is how to recognize the part and then apply the correct grasping pressure for each type of part without damaging it. The challenge here is that you need a lot of data to train the AI algorithms but you can’t afford to stop the automated production line to collect images. And, you need to gather thousands of images at different angles and lighting conditions in order to assure that each part will be successfully recognized. This is where our ability to create synthetic data comes into play.

I wanted to learn more about how we employ AI techniques within the tools that Siemens offers to design, realize and optimize factory automation equipment. So I sat down for a chat with Shahar Zuler, a data scientist and machine learning engineer at Siemens Digital Industries Software. She has a job that every engineer would envy: she spends her days connecting the worlds of advanced robotics simulation with AI. Listen to our conversation here.