Eleven top tips for energy efficient data center design and operation

There is a growing awareness of the carbon footprint of data centers. The energy costs associated with operating these facilities have generated a lot of energy-saving initiatives across many disciplines. Let’s consider data center HVAC design.

Cooling the IT equipment in a data center can often account for more than 40% of the total energy consumption. There has been a considerable innovation on this problem, from evaporative cooling such as Munters OasisTM Indirect Evaporative Cooling for Mentor Graphics data centers in Wilsonville and Shannon to liquid cooling solutions from Iceotope.

Digital Twin during operation

Using a CFD-based thermal digital twin the design of servers and data centers is now standard practice, but using a digital twin can be just as critical during the operation of a data center, especially when planning any changes in the cooling system.

Like a building, a data center can be expected to have a life of at least 10-15 years. Over that time frame, the need for cooling innovation can be significant. To save energy, many data center operators undertake initiatives to refresh their operational data centers to take advantage of improving cooling technology. Without the ability to simulate and test the consequences of a change, some pitfalls can have catastrophic outcomes, especially in mission-critical data centers.

To show how important simulation is let’s take a look at a couple of ideas that on the face of it look like “no-brainers” from an energy efficiency point of view.

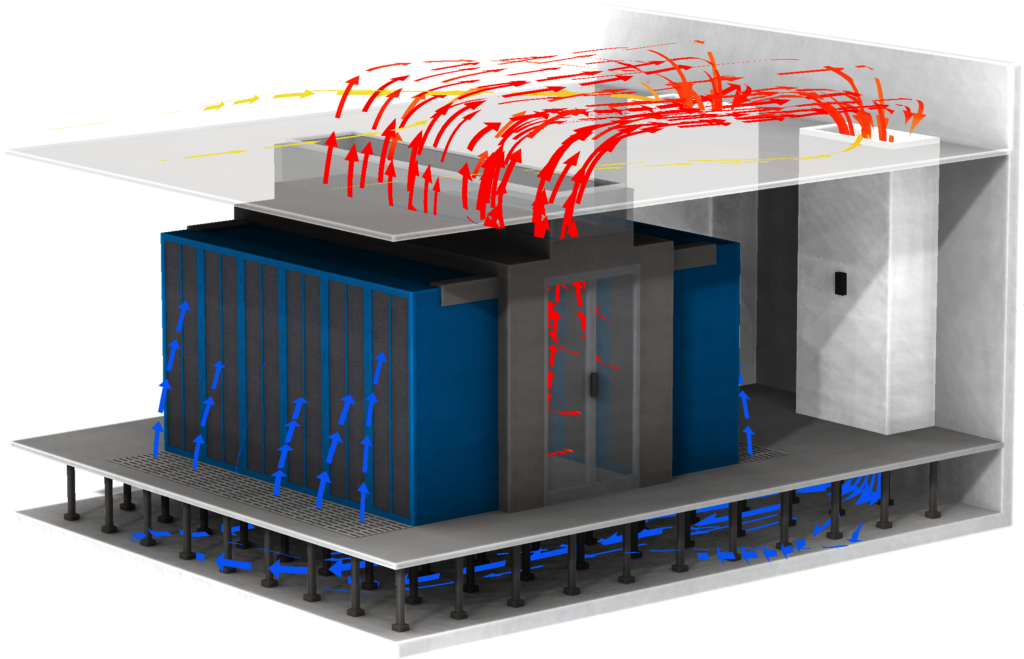

Aisle Containment

The first is the addition of Aisle Containment to a very typical traditional design of the data center with cooling air being supplied under a raised floor.

Segregating the cool supply air from the hot exhaust air can deliver significant improvements. Supply air temperatures can be raised, delivering significant energy savings from the cooling plant. However there is a hidden danger: while containment added to a cold aisle will prevent hot exhaust air being retrained into the racks in that aisle as intended, it will also prevent cool air being brought in as well. Why is this a problem?

In a traditional open data center, there is no guarantee that every cold aisle gets a perfect balance of cool air, with the volume of airflow delivered through the floor tiles matching the volume of airflow demanded by the racks in that aisle. When the aisles are open this goes unnoticed and the equipment survives by scavenging cool air from the rest of the room. Once this airflow path is cut off the equipment in those aisles will be starved of cool air and suffer if there is a flowrate deficit.

There are two potential consequences, both of which will seem counter-intuitive to a data center manager expecting to see energy consumption go down:

1) The servers overheat, causing the computer room air conditioning units (CRAC) supply temperature to be lowered so the cooling system works harder, increasing energy consumption.

2) The fans in the servers increase their speed, to draw in the required air from under the floor, again increasing energy consumption.

Fitting Electrically Commuted (EC) Fans

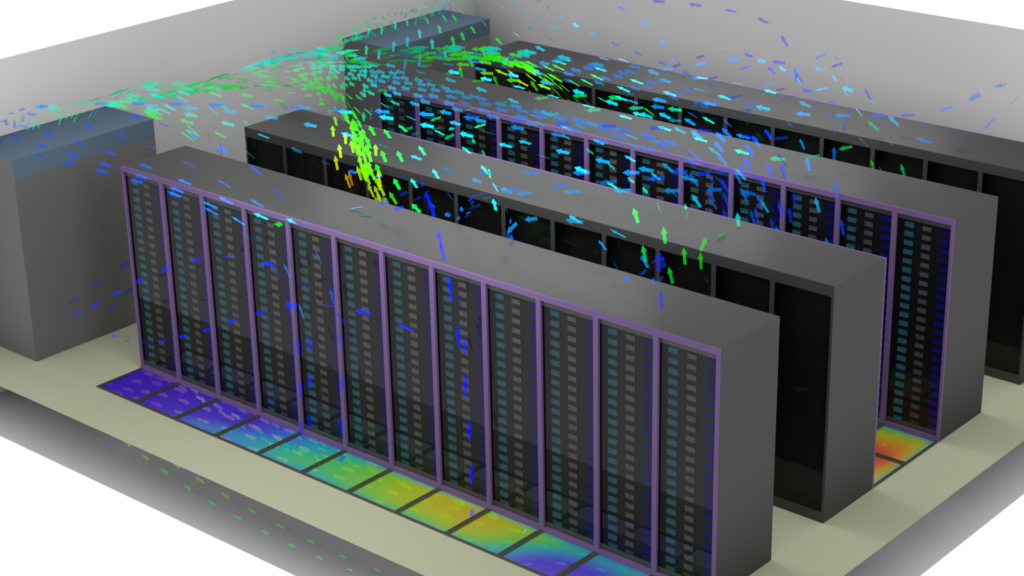

A second common example is to retrofit existing CRACs with more energy-efficient fans.

Typically these CRACs are the responsibility of the facility manager rather than the IT team. They are situated around the perimeter of a data hall or in a service corridor outside the data hall. Traditionally CRACs would have been installed with centrifugal blowers but they can be retrofitted with more energy efficient electrically commuted (EC) fans.

This can offer significant energy efficiency benefits, especially when coupled with variable speed control. Considering the purpose of the fan to simply draw air over the heat exchanger inside the CRAC then there seems to be no risk involved and so no need to simulate. However, the fans also deliver the cool air to the IT equipment in the data hall itself. The airflow in the data center can be very complex:

Changing the type of fan will significantly change where the cool air is delivered within the data hall. This seemingly innocent change can result in starving some racks of cool air that were previously well supplied, causing a thermal shutdown of equipment in the racks. This new airflow situation might not be realized until or after the retrofitting of all the CRAC fans is complete and the data center is stressed in a higher power mode at a later date, by which time it is too late.

To counter the overheating the CRAC supply temperatures could potentially end up lower than before the retrofit, increasing the energy consumed by the external chiller plant more than the energy saved by replacing the fans!

Lessons Learnt

These are just two examples of HVAC changes to an operational data center and it is not unheard of for these retrofitting initiatives to be implemented at the same time, compounding the changes to the airflow within the data center and therefore the cooling delivered to the racks.

In both situations there was an understandable desire to take advances in data center HVAC design and to apply them back into an operational data center, however the lesson learned is that airflow is complex and consequences can be counter-intuitive.

A digital twin can simulate, highlight and visualize potential problems to avoid unexpected consequences. Just as importantly it provides a safe environment for engineers to modify, test and optimize the proposed changes prior to implementation.

Now is the time for a digital twin of the data center to enable HVAC engineers to turn hope into reality, optimize cooling, maximize the potential energy savings, and avoid very costly mistakes. To learn more download our latest data center hvac design guide.