Are You Wasting Your SRAM Memory Redundancy?

By Simon Favre, Mentor Graphics

Want to know if your SRAM redundant memory elements are actually useful, or a waste of time and money? Simon Favre explains how Calibre YieldAnalyzer critical area analysis can tell you…

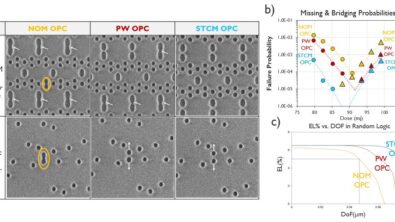

In modern SOC designs, embedded SRAM can account for a significant percentage of the chip area. The regularity of tiled structures (like an SRAM core) enhances their printability, but the high density of these structures makes them more vulnerable to random defects. Adding redundant structures helps protect against loss due to these random defects during manufacturing. However, it’s a balancing act. If no redundancy is used on large embedded SRAMs, the resulting chip yield will be poor. If too much redundancy is applied, or if redundancy is applied to memories that are too small for redundancy to matter, then both silicon area and test time are wasted. In memory redundancy, both under-design and over-design can make a chip less profitable.

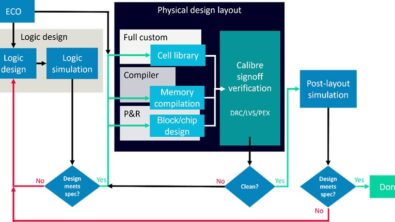

The Calibre® YieldAnalyzer tool includes functionality that uses foundry defect densities to determine the optimal memory redundancy for a given memory configuration. This analysis can be done before the chip layout is finalized. By evaluating a few different scenarios, using the largest memories planned for use in the design, the optimal configuration can be determined. The tool calculates the unrepaired and repaired yields for a memory, according to redundancy parameters given in a configuration file. To calculate repaired yield, the tool must know what repair resources are available for a particular memory configuration or instance. The Calibre® YieldAnalyzer tool supports calculation of both column and row redundancy, although row redundancy is used far less often due to its impact on access times, which are usually of paramount importance in SRAM design.

How does the Calibre YieldAnalyzer tool do this? Using its critical area analysis (CAA) capability, potential faults are evaluated by layer and defect type (e.g., M1.OPEN, M2.SHORT, single.VIA1, etc.) To calculate the repaired yield of an SRAM, the tool must know which of these layer and defect types apply to the memory core, and how many repair resources are available to effect the repairs. The tool identifies the memory core using the name of the bitcell, as supplied in the configuration file. It then divides the core into row and column “units,” based on the corresponding parameters in the configuration file. Given the redundancy resource specifications, and the specific layer and defect types, the Calibre YieldAnalyzer tool calculates the repaired yield using the mathematical technique known as Bernoulli Trials. Basically, it calculates the probability that all core units are good, then adds to that the probability of one unit being bad, two units being bad, and so on, until all repair resources are used. This is the repaired yield for that memory. For a memory that is repeated several times in an SOC design, that repaired yield is raised to the power of instance count to get the repaired yield for all of them.

Want to learn more about the details? Check out the full explanation in our white paper, “Evaluating SRAM Memory Redundancy with Calibre Critical Area Analysis.”