It Don’t Mean a Thing … Without Methodology

Okay, so not nearly as catchy a title as the inspiration, but it’s something I’ve been thinking about as I’ve been preparing to attend DVCon next week. At the end of the day, it’s really methodology that allows us to effectively deploy (sometimes complex) technology. It’s methodology like UVM that allows us to speak a common language when it comes to creating testbench environments in SystemVerilog. It’s methodology that allows us to apply patterns to solve simple problems, which allows us to focus our efforts to architect unique solutions for unique problems. This will be my 10th year attending DVCon, and I’ve really come to consider DVCon “methodology central” when it comes to learning about design and verification methodologies being developed and deployed by industry practitioners.

This year, Portable Stimulus continues to have a high degree of attention when it comes to methodology development. At DVCon, both my colleague Tom Fitzpatrick and I will present papers on Wednesday morning about methodology to adopt and productively deploy the new Accellera Portable Stimulus Standard.

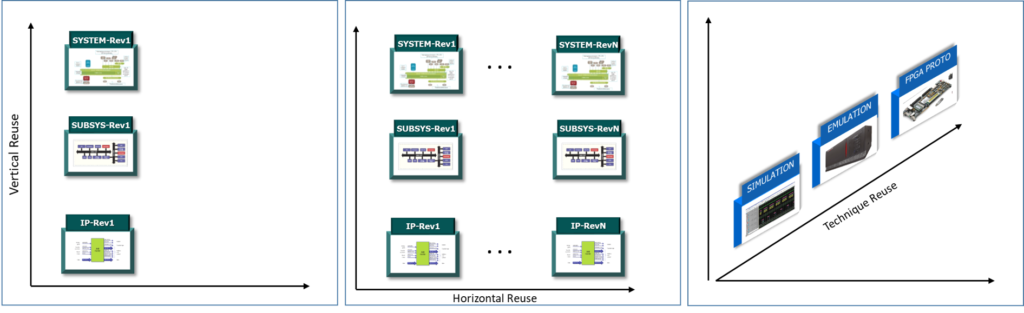

Portable stimulus is a very flexible technique with many possible applications. While this degree of flexibility is usually a good thing, very flexible technology can also be more difficult to deploy precisely because it has so many possible applications. My paper, Unleashing Portable Stimulus Productivity with a PSS Reuse Strategy, focuses on identifying and categorizing the various ways in which portable stimulus is deployed, along with the costs and benefits to deploy portable stimulus in those ways. My paper describes the costs and benefits to apply portable stimulus for vertical, horizontal, and technique reuse, as illustrated by the diagram above. Each of these has unique cost/benefit tradeoffs that merit consideration as an organization plans to deploy portable stimulus. The goal, of course, is to be able to form a portable stimulus deployment roadmap that fits your organization, moving from moderate-value/moderate-cost applications to high-value/high-cost applications

Tom’s paper tackles the question of how to do results checking in PSS. The strategies that we use for results checking vary significantly across verification environments. In our block-level UVM verification environments, checking is typically very detailed and relies on a high degree of visibility and access to the design details. For SoC-integration tests, visibility into the design is typically severely limited from within the test, and tests tend to perform end-to-end results checks. When applying Portable Stimulus, a key question becomes how to architect a result-checking strategy that scales and is portable along with the test intent. Tom’s paper offers methodology to help portable stimulus adopters architect a portable result-checking strategy that is efficient, reusable, and fits nicely with more-detailed checking that is environment specific.

If you’re attending DVCon to get an update on the latest methodology developments for design and verification, I hope to see you there! Stop by the Mentor booth on Monday evening at 5 to hear Tom Fitzpatrick share what Mentor is doing with portable stimulus. Attend the Applications of the new Portable Stimulus Standard session on Wednesday morning at 10am in the Monterey/Carmel room to learn about portable stimulus deployment methodology. And, come find me at the Mentor booth. I’d love to talk with you about the design and verification methodologies that have piqued your interest!