AV technology repurposed to help the visually impaired

Major companies and startups alike are racing to design autonomous vehicles (AV) for tomorrow. At the heart of their designs are systems that combine sensors that connect to the real world with electronics and software all under control of AI. Siemens EDA products are front and center allowing these companies to design the ICs and systems faster, cheaper, and in some cases at smaller footprints. PAVE360 provides the means to verify these systems virtually though millions of simulated driving conditions.

Because there is so much interest in this market, vendors iterate through designs to drive down component prices. For example, just a few years ago LiDAR sensors cost thousands of dollars and were large. LiDAR uses pulsed lasers to measure distances to objects which is a key factor in autonomous vehicles. I found a 70mm x 96mm-sized LiDAR sensor online for $17 that can be used for small robot projects and a small-footprint LiDAR sensor that can be used in vehicles for less than $500. This drive for small low-cost sensors is great news for the autonomous vehicle companies, because cutting dollars out of the bill of materials is important when you want to address the consumer market in volume. But, this trend is also a boon to makers and hobbyists as well. It allows them to repurpose the technology to applications vastly different than autonomous vehicle design. That is the case for some folks at Stanford University that want to reinvent the walking cane used by the visually impaired.

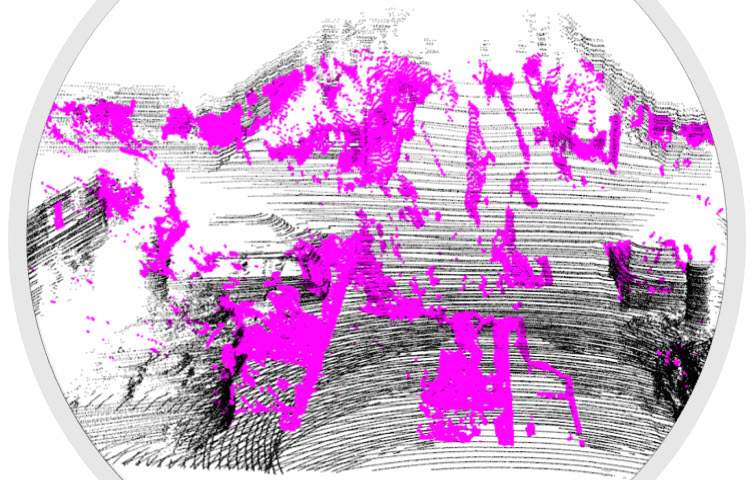

The team set out to create a cane that detects objects within the walking path and also help the user navigate around that object. At the center of the design is a LiDAR sensor to detect objects in the way and to calculate the distance and location of those objects. They use sensors like accelerometers, gyroscopes and GPS to monitor the position of the cane, what direction it is moving in and how fast it is moving toward the object.

The cane moves itself to indicate to the user how to avoid the object by using an AI technique called simultaneous localization and mapping (SLAM). SLAM seems to perform the impossible by computationally constructing a map of the area that it has never encountered before, in real time, while simultaneously keeping track of the cane location within that map. SLAM uses the sensor inputs to feed the AI algorithms and creates its solution using computational geometry, building up a topological map of the current environment.

The team worked with many visually impaired people to hone the performance and operation of the cane. These folks also stressed that they always want to be in control of the motorized wheel on the cane. The navigation nudges the user in the correct direction, but the user can ignore this guidance and move in a different direction. One cool side-benefit of all the onboard sensors is that the cane can also guide the user to a destination just like the navigation system on your phone.

The best part of this story is that the team at Stanford provides their design in open-source format with the software, a list of materials and instructions. Makers and hobbyists can build the cane for around $400, which is way less than other high-tech canes out there. The team wants these folks to tweak the design and experiment in order to improve the design, communicating back to the Stanford team with those ideas.

What improvements to this smart cane would be useful? How about adding an audio system that tells the user what obstacles the system “sees” and what it plans to do to avoid the obstacle. I imagine that the scariest aspect of walking while visually impaired is the crosswalk. The system could be specially trained to recognize crosswalks and provide audio feedback as to status of the Walk sign and more importantly, the location of cars with respect to the walking path and provide alerts as to cars making illegal turns. This is technology already in play in autonomous vehicles, just from a different perspective.

The relentless drive to make faster, cheaper, and smaller electronic components means that Siemens EDA tools are in great demand. Autonomous vehicle and smartphone requirements have driven this trend, so that anyone with the desire and background to repurpose the technology can create new products that don’t require big dollars or the backing of huge companies. This always leads to unexpected solutions which I enjoy reporting back to you.