AI Foundations: Mapping neurons to a model

Understanding the building blocks of artificial intelligence.

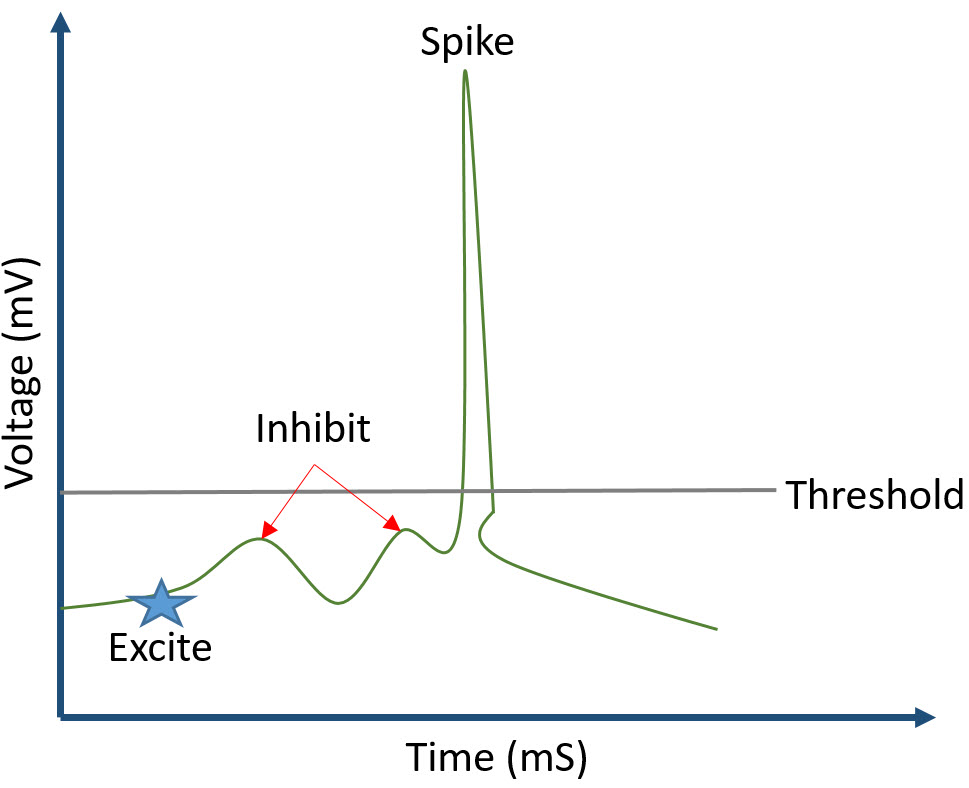

A basic construct that enables communication within the brain is the neuron. When the neuron is excited it produces an electrical spike that releases a neurotransmitter chemical that travels across a synapse to another neuron that can be connected to hundreds of other neurons.

A neuron receives inputs that constantly add (excite) and subtract (inhibit) based on what the brain is thinking about. When the total input reaches a threshold voltage, where the excitation outweighs the inhibition, an electrical spike occurs, triggering a synaptic release which allows communication to another set of neurons. Roughly speaking, a synapse is the junction between two neurons.

A particular neuron will favor inputs that predict its own output. Over time, this neuron specializes in finding a statistically frequent and unique input pattern. The neuron learns by moving along an adaption gradient defined by its synaptic learning rules. When neurons are connected in a network, that network is optimized for a particular learned item. Of course, this is a simplification, as neurons receive feedback within the network through chemicals that reinforce learning. Experiments have also shown that learning reinforcement also happens due to the phase relationship between the neuron spike and the local spiking oscillation of connected neurons.

Voltage, time, threshold voltage, oscillation, gradients, statistics, and phase relationships are all terms within the lexicon of engineering. A fact that bodes well for creating a neural model that can be implemented in hardware. But typically, a neural model is created using software first.

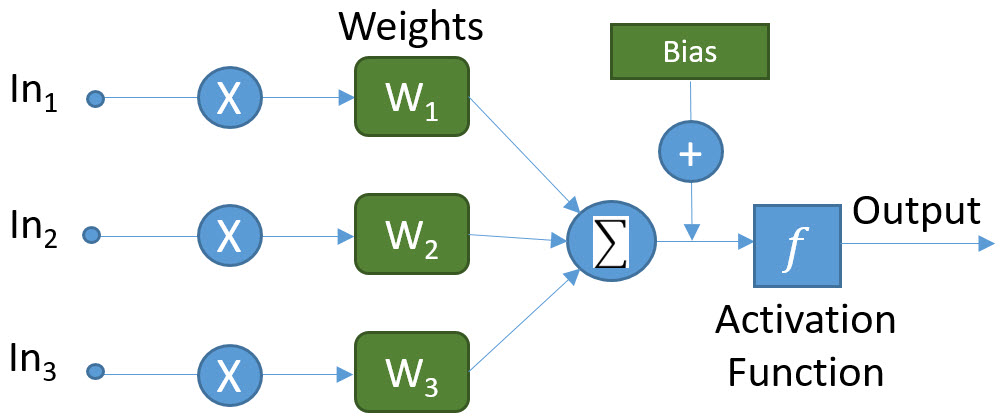

Here is a very simple model of a neuron. It consists of three inputs and mathematical functions. The weights (W) and the bias are the values that change in this model:

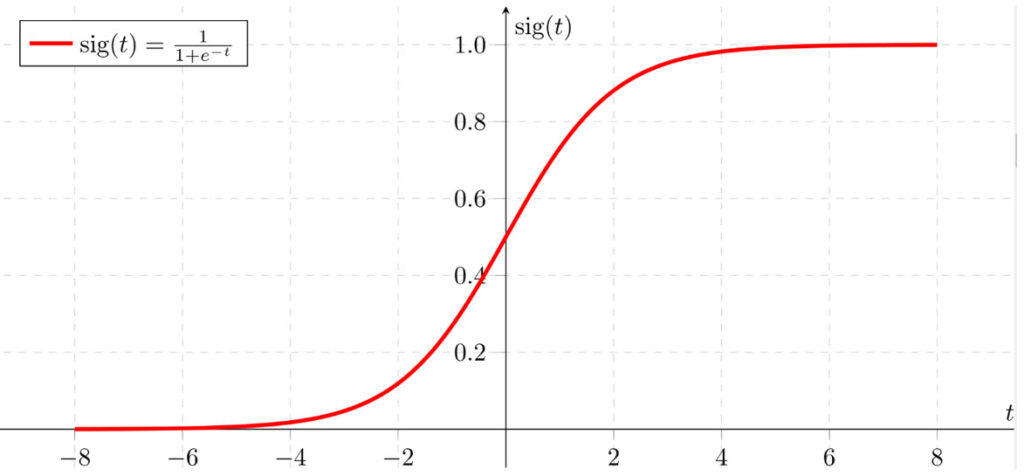

Inputs In1 – In3 are the inputs coming into the neuron from other neurons. These inputs are multiplied by their associated weights (W1 – W3). Each input has its own weight, which in the model is a constrained random number. Then, the inputs are summed and fed into an activation function. The bias is typically only used in small neural networks. It is typically set to 1 in order to shift the activation function, as it is added to the sum. For large networks, the bias makes a negligible difference and is typically eliminated. The summed value is an input to the activation function, which models the spike of the neuron if it reaches the threshold value. The implementation of the activation function varies, but typically some variation of a sigmoid function is used. The function converts the input to a number between 0 and 1. That output is fed to the input of the next connected neuron.

The sigmoid function suffers from the “vanishing gradient” problem; when the gradient at each end of the curve is almost a flat line. This means that when connected to a network, this model might get stuck because the comparison values are too close together. Over time, researchers have explored other activation functions, such as the rectified linear unit (ReLU), which is popular. This function creates a positive linear curve. Sophisticated models try different activation functions based on the types of data, the problems that they are trying to solve, or the impact on training time. Also, some functions are more compute intensive than others, depending on the input data.

Putting it all together, a simple software model of a neuron is:

- Set the bias value (typically to 1).

- Call a random function to set the values of the weights.

- Calculate the output value using the activation function.

A software model of a single neuron can be used a binary classifier because the neuron can “excite” with a value near 1 or “inhibit” with a value near 0. So, mapping the concept of a single brain neuron into a mathematical software model has limited value. Developing a model that can be used for an AI application, like object recognition, requires connecting together many neurons in a network and attending to details about handling errors and adjusting weights accordingly.