Episode 2: Understanding training vs inferencing and AI in industry

In Episode 2 of our AI podcast series (Episode 1 overview here), Ellie Burns and Mike Fingeroff got together to discuss the training and inferencing process for neural networks, how AI systems are deployed, and some of the industries that are benefiting from AI.

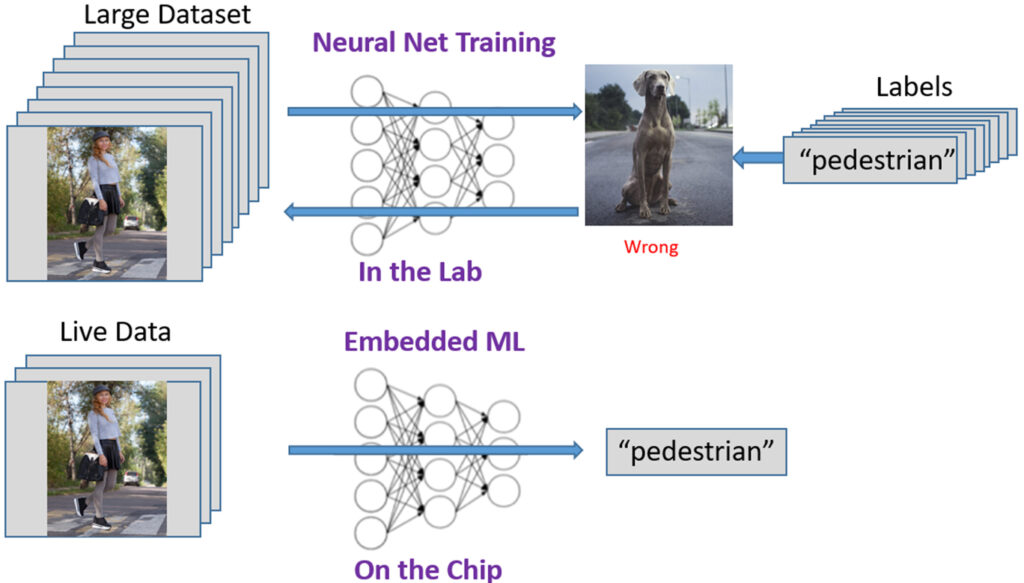

A very common AI application is computer vision. These systems must understand what they are “seeing” in order to make correct decisions. This understanding comes from deep learning which is commonly implemented using convolutional neural networks (CNN), inspired by the way brain neurons interact to recognize images. CNNs are employed in two distinct ways:

- Training: is typically performed on floating-point graphic processing unit (GPU) farms that can spend weeks processing massive amounts of training data to tune the weights of the network. This iterative process provides many images of the same types of objects with different attributes like orientations, aspect ratios, and clarity, to allow the network to “learn” how to detect and classify these objects.

- Inferencing: the CNN runs convolutions using the weights from the training in order to detect and classify objects in any image. Inferencing can be implemented in fixed-point hardware deployed in computer vision systems.

Because many computer vision systems are installed in cars, equipment, or consumer products, which cannot depend on a lab full of computers, inferencing solutions are the focus of hardware designers.

The training process is computational complex, requiring millions of computations while running on a set of images. After the training process completes, a set of weights are available for the network. Those weights need to go onto a chip (inferencing) so that the AI system can be deployed in a smart phone or some other small device. During this chip design process, engineers strip down the neural net accuracy to be as small as possible, but such that it still gets the correct answer. This reduces the computations and cuts down on the power consumption of the chip. This is important, because you can’t require the battery in the phone to be on a charger every time you want to use an AI-enabled feature.

Today’s state of the art for vision systems is the CNN that focuses on a static image. It processes one image at a time. For example, a vision system in a car is looking for potential hazards to avoid. It can eliminate image processing for elements it does not care about, like the sky, and it might only process enough data in the image frame to quickly decide on whether there is a hazard in the road in order to set off an alarm or to deploy the automatic breaking system.

AI enables autonomous driving vehicles, but AI systems are being deployed in new industries every day. For example, the medical industry is doing exciting work on AI-enabled systems that comb through MRI data to find ways to detect disease quickly, often before any symptoms are reported. Voice recognition systems are finding their way into many products as well, to assist people in language translation. The fact is, if any company has access to a huge set of data, they are finding ways to employ AI to make that data useful. That is why it is not surprising when a new AI application makes the news almost every day.

Listen to Episode 2 here.