It’s an exciting time—the rise of failure analysis for safety and yield

The last decade has been marked by a few significant changes in the semiconductor business…

First, the slowing of the traditional device scaling associated with Moore’s law, which has ushered in a flurry of investment and technology innovations. Second, the growth of new markets for ICs that demand very high quality and new modes of ensuring device safety. Add to these the continued push for higher yield and faster time-to-market, and you start to see why it’s an exciting time in IC design and manufacturing.

In this changing environment, we see both the traditional fabless chip suppliers and the larger systems companies taking a more holistic view of their ICs. End customers have detailed system-level requirements and are concerned about reliability and safety through the life-cycle of the device, especially for ICs used in automotive applications.

Many of these functional safety requirements are new to the IC design houses, and they’ve been relying on their ecosystem partners, including software providers like Mentor, to help address quality, reliability, and safety issues. This shift has caused a change in how companies like Mentor are organized internally; for example, the Tessent group formed an entire team focused on safety, and we are connecting the dots between our DFT and yield tools and other functional safety groups both within Mentor and outside.

One technology whose role is changing in this environment is failure analysis. When manufacturing test detects faults, or when a device has been returned from the field for being defective, failure engineers get to work. They use scan diagnosis, a technique to analyze the fail logs to locate the root cause of failure. Given the level of complexity and size of chips, this fail data can be “noisy.” Tessent addressed this problem by applying machine learning to the data (so-called “root-cause deconvolution”), which greatly improves the ability to locate and describe the cause of the fault.

The way companies use scan diagnosis is also changing. Scan diagnosis used to be applied in an ad-hoc manner—to field returns or only after a yield problem needs to be fixed. Today, it is applied as a systematic step during yield ramp, and ongoing yield improvement, and even as a way to modify designs to enhance the ability to diagnose the new defects that we see with advanced process technologies. Chipmakers can track defect paretos over time and extrapolate future excursions and excursion response.

Focus on faster diagnosis

Much of the Tessent technologies for volume scan diagnosis, which is scan diagnosis across wafer lots and batches that helps identify even subtle yield excursions, focuses on time-to-market. It was simply taking too long to run volume scan diagnosis on today’s large, advanced node designs because of the large amounts of data from failing test cycles.

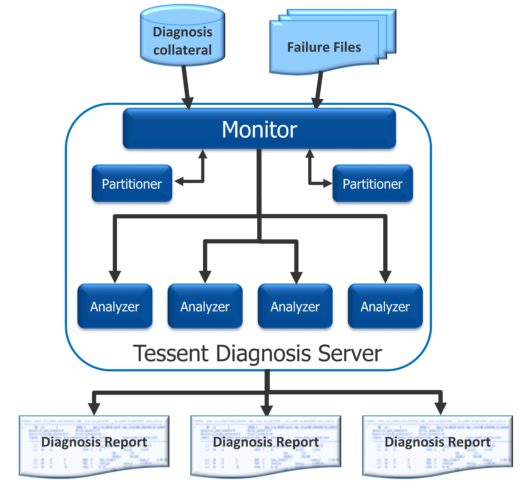

Diagnosis is performed on input failure log files from the ATE (automatic test equipment), along with a design netlist and scan patterns. The memory required to perform diagnosis is proportional to the size of the design netlist. Imagine trying to run diagnosis on a design that requires 100GB of RAM and required about an hour for each diagnosis result. If there are 11 processors available on the grid but only one has enough RAM to handle that job, then all the diagnosis runs will happen sequentially on that one machine while the other 10 sit idle.

We recently released a new technology to maximize diagnosis throughput while performing ever more demanding scan diagnosis. The technology, built into Tessent Diagnosis, is called dynamic partitioning. It works by first analyzing a fail log and then creating a partition that contains only the parts of the design that are relevant to that fail log. This partition serves as a new, smaller netlist that is used to perform the diagnosis. Using the multiple smaller input files with just the relevant fail log data means that more processes (an unlimited number, actually) can be run in parallel, using a wider range of CPUs, and getting results faster and more efficiently than ever.

The analysis and partitioning of fail logs, and the distribution of diagnosis processes, is performed by the Tessent Diagnosis Server, which works on your compute grid.

Dynamic partitioning addresses hardware resource limitations by greatly reducing the memory footprint of the diagnosis process performed by the analyzers. Typically, the dynamic partitioning leads to a 5X reduction in memory and a runtime reduction of 50% per diagnosis report. The higher volume of diagnosis you can complete, the higher the value of diagnosis.

Learn more from the following resources:

• Whitepaper: Improve volume scan diagnosis throughput 10x with dynamic partitioning

• On-demand seminar: Improve throughput by 10x using dynamic partitioning

• Live on-line seminar July 14 and July 15, 2020: Tessent DDYA—Improving the throughput of volume scan diagnosis by 10x using dynamic partitioning