From Punched Cards to ChatGPT: a brief history of Computer Aided Engineering

When we think about the history of Computer Aided Engineering the focus is usually on the evolution of computer hardware and the development of the simulation tools themselves. We talk much less about the considerable changes that have occurred to the way that we communicate with those tools.

In this blog, I will explore the history of how humans interact with computers by splitting the last 60 or so years of CAE into 3 eras, each of which has represented a step change in engineering productivity.

I do this because I think we’re at the dawn of a new era, one which will make the current era of computer communication feel as clunky as the first era. Let’s start with punched cards.

Epoch 1: Punched Cards (1890 to 1980)

In the beginning, data was physical. You could hold it in your hands. To change that data you needed to manipulate it by physically punching holes in it.

For about 90 years punched cards were the principal mechanism by which engineers communicated with machines. Even before those machines were actually computers.

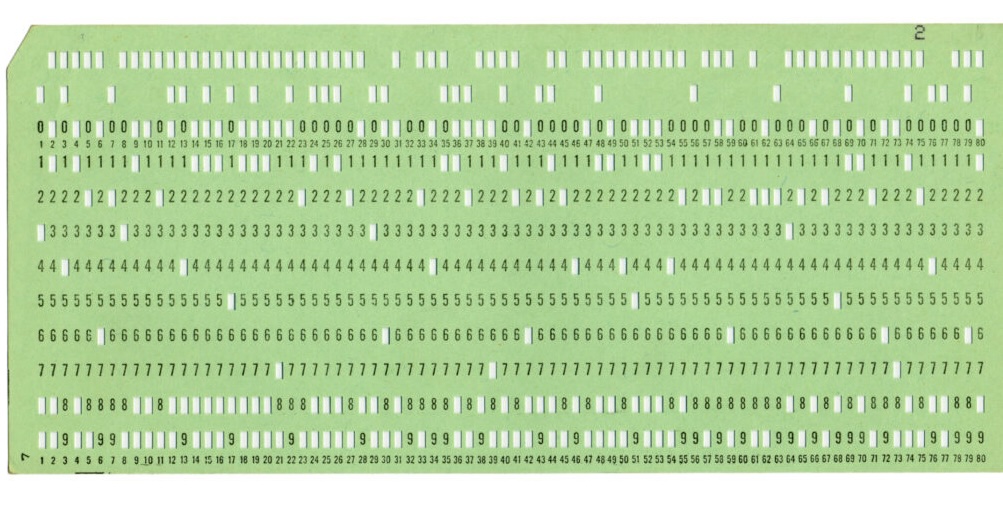

In 1890 Herman Hollerith invented a “tabulating machine” that used punched cards to count statistics from the US Census, which was able to process data at the incredible rate of 7000 cards a day. Hollerith’s Tabulating Machine Company eventually became International Business Machines (or IBM), the company that standardised the punched card format as 80 columns, 12 rows with rectangular holes.

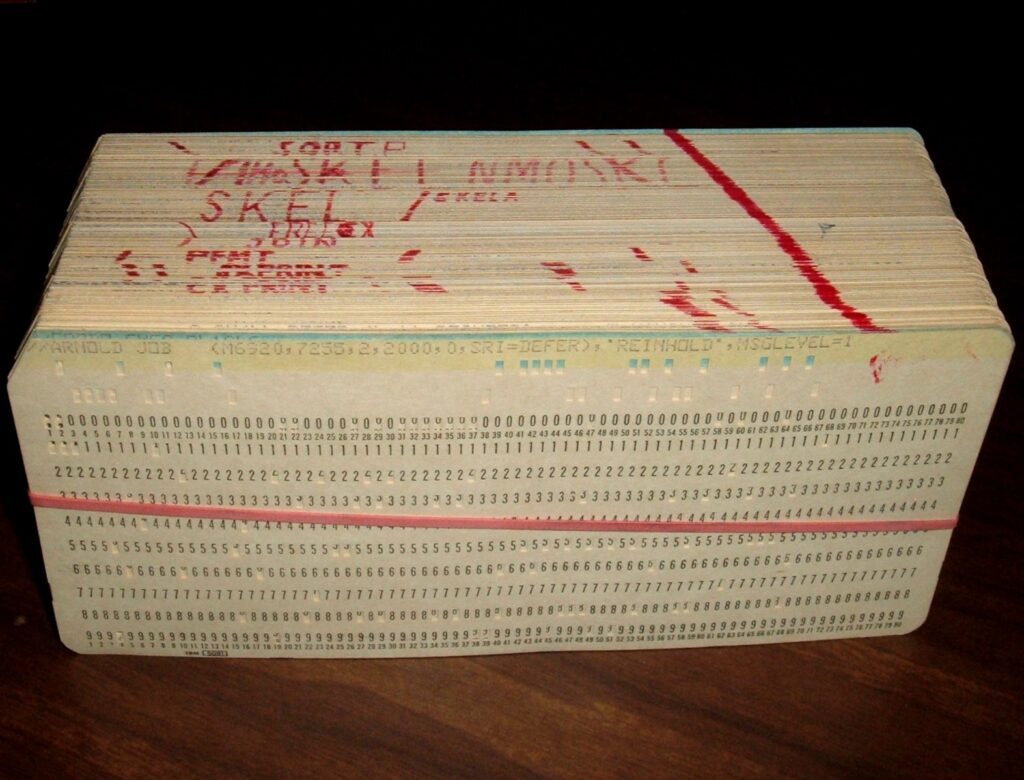

During the Second World War the Enigma Research Section at Bletchley Park was getting through 2 million punch cards a week. Enough that if you put them in a pile, the stack would be as high as the Eiffel Tower.

By 1937 IBM was printing about 5 to 10 million punch cards a day. Each punched card could encode 80 characters of alphanumeric text.

The whole arduous process is explained by Eduardo Grosclaude, who eloquently describes his experience programming computers at university in 1982:

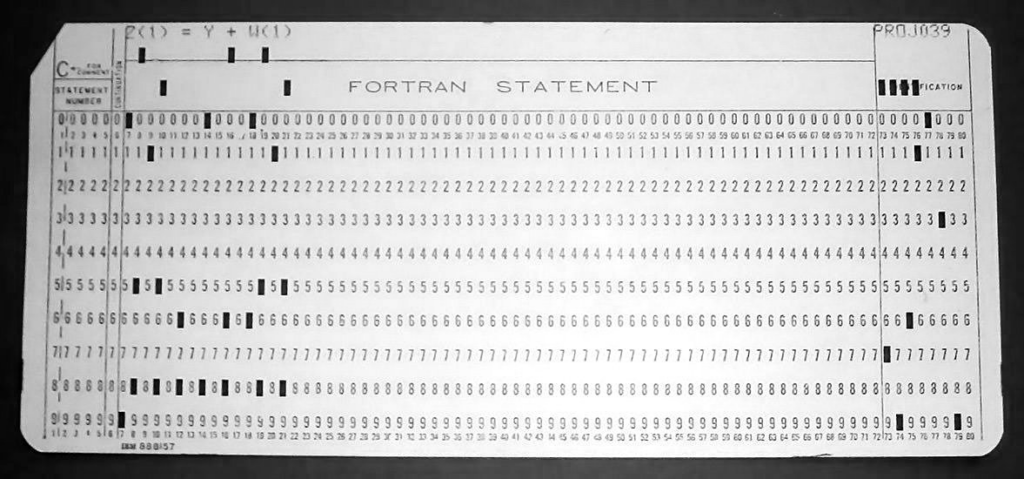

- Write your Fortran program in a coding sheet.

- Purchase an adequate amount of new punch cards.

- Fold your coding sheets, join them to the pack of cards, wrap the whole with a rubber band and deposit them in a card rack.

- At a secret moment, a secret little truck would take our work to a secret place in Facultad de Ingeniería where our cards would be punched by secret little workers.

- At a secret later time, our code and punched pack of cards would be returned along with a very long list of compiler errors. Guaranteed.

- You would interpret said list of compiler errors to the best of your knowledge, rewrite your program on a new coding sheet, rinse and repeat from step 2.

Coding (or typing) errors had to be corrected by re-punching the card that represented that line of text. Editing programmes required reordering the cards, and removing or adding cards to represent new lines of coding.

(courtesy of ArnoldReinhold – Own work, CC BY-SA 3.0 )

Until the advent of dot-matrix printers in the late 1960s output data would also be on punched cards – introducing another layer of decoding. Even then, the results were usually entirely numerical, with no actual visualisation capability for the model.

The commercial CAE software industry was born in the punched card era. The first commercial releases of Nastran and ANSYS both occurred in 1971.

Epoch 2: The Keyboard (1980 to 1990)

From the mid 1980s onward computer terminals with screens and keyboards quickly made punched cards obsolete, as engineers were able to type their FORTRAN (or NASTRAN or whatever) commands directly into the computer’s memory. This is my generation, I started university in 1990, and I’ve never seen a punch card in real life.

The keyboard era saw the birth of commercial CFD, with Phoenics released in 1981, Fluent in 1983 and STAR-CD in 1988. All of which were command line driven.

For engineers of that era, communicating with a simulation too, typically meant learning a new language. In my case that language was proSTAR (“CSET NEWS VSET ANY”), the pre-and-post processor for the STAR-CD CFD code. At the same time ANSYS introduced their “Parametric Design Language” (which still exists today) to replace punched card operation.

For my generation of engineers (in the early 90s) a computer mouse was mainly a way to navigate between windows, and not a direct input device to the CAE tool.

Computer operating systems moved from command line (DOS like) interfaces to ubiquitous windows type operating systems in the mid-1980s,The Apple Macintosh was released in 1984, Microsoft released Windows version 1 a year later. However, CAE tools were slow to follow, and command lines persisted into the mid-1990s and beyond.

Epoch 3: The Mouse (1990 to now)

It shouldn’t take too long to describe the mouse era, because we are now living at the end of it. Most engineers today spend more time with their fingers on their mouse than on a keyboard.

Most of the early point-and-click CAE tools simply placed a graphical user interface on top of the existing command line structure, with each click of the mouse generating a stream of commands in the background.

Freed from having to remember complicated command syntax, engineers would instead have to learn to navigate a (sometimes equally complex) menu system. These systems were easier to pick up for a new user of software, but not necessarily any more efficient to use for experienced users who had “learned the language”.

I managed to style out my own career as a keyboard man in the mouse era until 2006 and the release of STAR-CCM+, which was a new generation CFD code, built from scratch. These types of tools were designed to be accessed through a mouse, and were built around a process which was intended to guide the engineer smoothly through a simulation process. Realising that the game was up, I reluctantly moved my right hand from the keyboard to the mouse.

Although each of these tools is individually marketed as being “easy to use”, almost every CAE tool has a completely different interface. Being an expert in one tool doesn’t really equip you to use any of the others, and the whole experience of trying to learn a new CAE interface can be soul destroying. Many of us build entire careers in becoming “jockeys” of a given tool (in my case STAR-CD and Simcenter STAR-CCM+), and ignoring all of the others.

When it comes to automation, it’s worth noting that most of us still revert back to the keyboard, recording, writing or editing macros that can perform tasks in batch.

Epoch 4: The Large Language Model (starting soon)

Although the mouse era didn’t end on November 30th 2022, something happened that I think will permanently change the way humans (and in particular engineers) interact with computers in the future.

I’m not suggesting that the computer mouse (or indeed the keyboard) is going to disappear from the engineering world like the punch card did, but I am very confident that Large Language Models will change the way that engineers interact with simulation tools forever.

Instead of having to interact with our tools by learning their language (as in the keyboard era) or learning to navigate their user interfaces (the mouse era), in the near future we’ll simply be able to ask a Large Language Model to set up some, or all, of the simulation for us.

And, unlike in the mouse, keyboard, or punched card era, we won’t simply be issuing commands. Instead we’ll be having a two way conversation with the simulation tool, building understanding and solving problems together.

I’ve already experienced this in my own engineering career. As a FORTRAN era programmer, I’ve generally struggled to learn how to use more modern languages like Python. Since the advent of ChatGPT I don’t have to worry about that anymore. I simply have a conversation with the LLM about what I want to achieve and it writes the code for me, we debug it together and it helps me process whatever data it pumps out.

There are obstacles ahead of course, at the moment LLMs tend to work best if their output has a slightly random element to it. Repeating the same prompt several times will produce different, but still intelligent answers. There are also unresolved questions about intellectual property and data privacy.

It will take some time for the major CAE vendors to resolve these problems and write interfaces between their tools and the most prominent LLM system(s) (which might or might not include ChatGPT), but that work is already underway.

The “Hi Simcenter” proof of concept demonstrates how you can setup a complicated Simcenter Amesim simulation using a simple ChatGPT prompt:

For what it’s worth, I think that LLMs will change the way we interact as everything and not just CAE tools. We’ve seen the start of this with Alexa and Siri, where it’s generally quicker and easier to ask a virtual assistant to perform a simple command (“set and alarm”, “play a podcast”) than do it yourself. The difference is that with LLMs have more ability to understand more complicated commands with context, and for the conversation to be two-way, which reduces the chance of misunderstanding.

What I want to be able to do is ask my phone “can you book me a taxi and 11pm to take me home from the pub, let me know if the surge pricing increases that journey cost to more than £40 please” rather than have to click through multiple options on an app.

If you enjoyed reading this blog, then you’ll love the latest episode of the Engineer Innovation Podcast, in which I interview Kai Liu – the man who who is responsible for implementing LLM technology into future generations of Simcenter products: