Products

Reasons and Use Cases of integrating Large Language Models into Polarion

The integration of large language models offers endless possibilities for improving workflows within Polarion. This blog post will cover why integrating an LLM into Polarion makes sense and explore the possible applications it could offer.

Why Integrate an LLM into Polarion?

Integrating an LLM into Polarion has at least three good reasons:

- Database: Polarion serves as a data backbone, playing a crucial role in “Retrieval Augmented Generation” (RAG). RAG uses an existing database of knowledge to generate more accurate prompts. When integrating an LLM, much of the effort is focused on engineering the RAG effectively.

- Control: The integration provides transparency by clearly identifying AI-generated content and enforcing review workflows to ensure proper oversight.

- Usability: By enabling AI usage with a simple button inside Polarion, users can generate and link content instantly. This streamlines the process, eliminating the need to copy and paste from external applications like ChatGPT.

Use Cases of integrating an LLM into Polarion

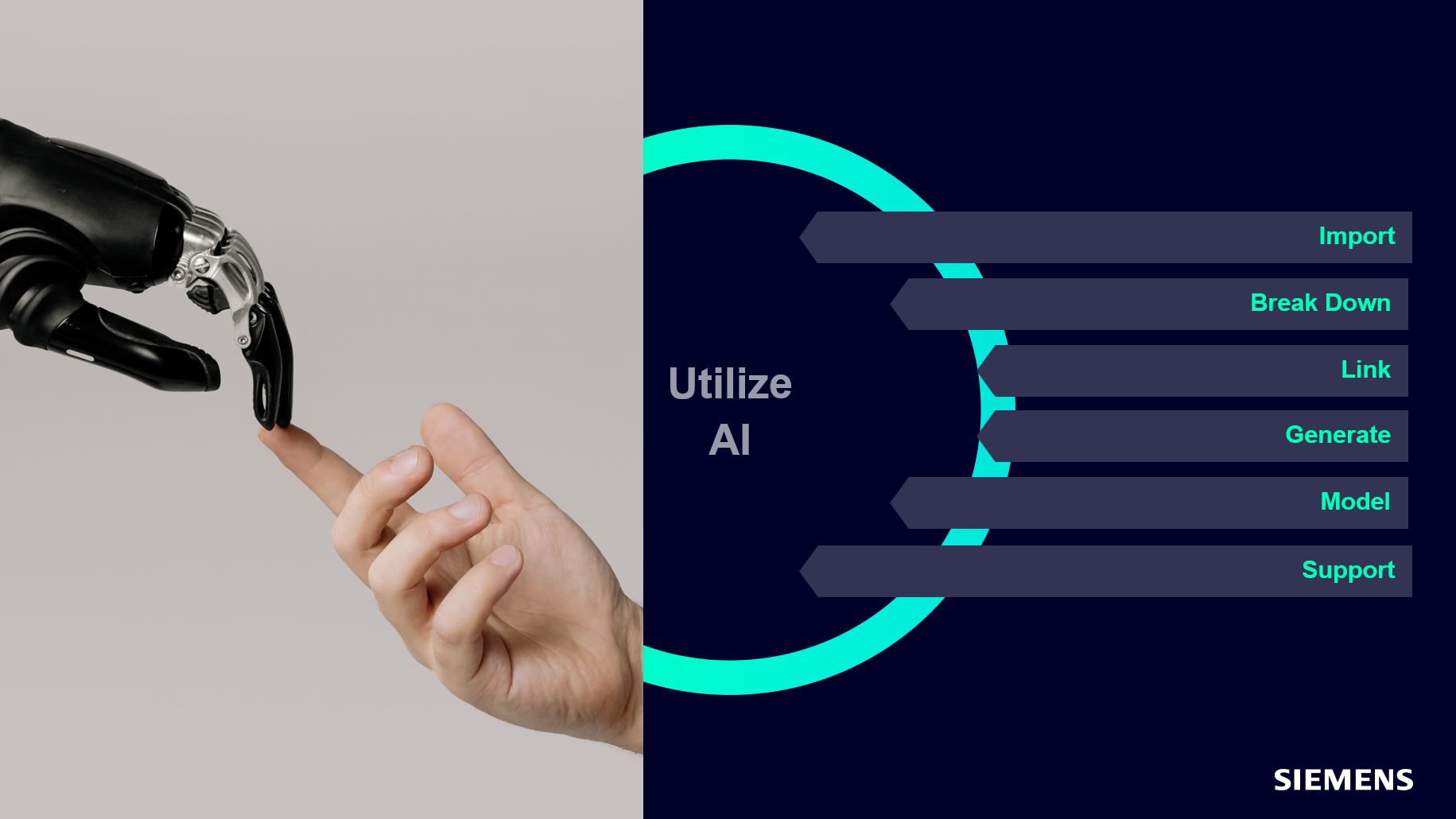

When discussing use cases for LLMs, the possibilities are almost limitless. Each customer has a unique way of working, so the potential applications vary greatly from user to user. Through discussions with our customers, we have identified over 100 use cases and grouped them into these six general categories:

- Import / Classification: Unstructured data (e.g., PDFs) can be parsed by AI and imported as structured data into Polarion. Additionally, classifications such as severity levels or requirement types can be handled by AI.

- Break-Down: Existing data, like requirements, can be refined or broken down into user stories and tasks with the help of AI.

- Link: AI can assist in finding existing or regulatory requirements, test cases, risks, etc., from past projects and linking them to newly created or imported requirements.

- Generate: AI can create new data, such as test cases or risks, providing a starting point for further refinement.

- Model: AI can model requirements visually, making it easier to communicate them with stakeholders.

- Support: AI can support various applications that check the quality of user input, such as requirements quality checks, or provide assistance through a chatbot that explains how business processes work within Polarion.

Find out more on our website about Polarion and AI: