Achieving ethical AI for industrial applications

Embedding ethics into artificial intelligence (AI) models is a difficult task requiring teamwork and thought. To explain the difficulty, I am going to use a variation of the “trolley problem” posed by Philosopher Phillipa Foot in 1967: what should an autonomous car do if the only way it can avoid hitting a pedestrian is to swerve in a way that puts its passenger at risk? Should it prioritize the life of the passenger, or the life of the pedestrian? What if there are two pedestrians? What if the passenger is elderly, meaning she/he has fewer life-years to potentially lose, and the pedestrian is young? What if the situation is reversed? What is the ethically correct decision—in every situation? Ethicists have been debating the trolley problem for decades with no clear answer. So, should we expect individual experts that work in the field of AI, be they software developers, data scientists or automation engineers, to figure it out on their own? The problem is made more difficult because unethical outcomes could result from malicious goals, lack of control/oversight, or unintended harms such as bias. No matter how complicated it is to deal with ethics in AI, we at Siemens—together with our partners—are committed to do our best to find solutions because that is what we do!

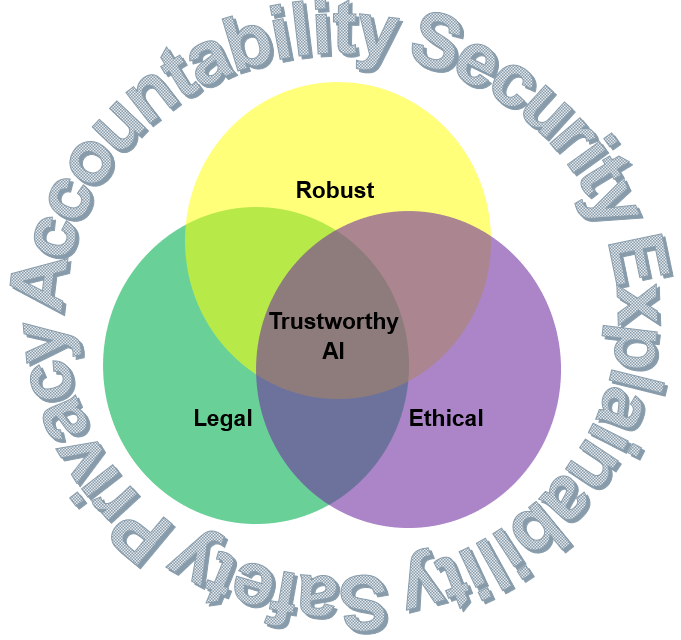

In a recent blog titled “Trust, the basis of everything in AI,” I explained that trustworthy algorithms must be robust, legal, and ethical as illustrated in Figure 1 below. This blog focuses on the ethical component of AI models.

AI has the potential to help us address critical issues such as safety, industrial efficiency, disease, hunger, climate change, poverty, and an overall improvement in the quality of life. This potential can be fully realized only if we take actions now to address the issue of trust and its most complex component: ethics. The AI-based application scenarios Siemens has been working on cover a wide range already: smart assistants, document classification, physics simulation, design and validation of autonomous systems, generative design, human-robot interaction, test effort reduction, quality prediction and inspection or predictive maintenance. However, as our customers move from application-centric to data-centric environments, there is a greater demand for broader use of AI across all Siemens products; thus, requiring more attention to the issue of ethics and avoiding reputational damage.

With upcoming data-centric ecosystems, we must be extremely careful to place ethical boundaries on AI, provide oversight, and realize that more data is not necessarily better. Bias from poor-quality data and/or data correlation (done rather easily when lots of data is placed at the disposal of a smart system) could result in illegal and unethical behavior. In addition, conflicts of interest could arise from competing departments within an organization that uses AI. Currently, we have regulations such as General Data Protection Regulation (GDPR) in Europe and ethical guidelines such as the ones at Google that separate the advertisement department from the search organization. Siemens will draft and commit to guidelines that match our own principles and products, and enhance our reputation.

From a technology development point-of-view, we must design AI systems that preserve both human life and human rights. For example, any AI model we develop should provide strong privacy assurances; otherwise, we won’t receive accurate data (because people don’t trust it), and thus the ensuing model won’t be as effective. For privacy-preserving AI, we are researching methods such as Federated Learning (model-to-data rather than data-to-model), Secure Multiparty Computation (distributing weights to many people), and Differential Privacy (introduction of random noise in data), but more work is needed to ensure appropriate rights are being preserved.

AI will be a critical building block for long-term societal prosperity if malicious as well as potential unintentional “harms” are mitigated. In an industrial environment, embedding ethical constraints in AI’s reward function, as well as ensuring it is robust technically and complies with all laws, is the most promising approach for mitigating risks and creating trustworthy AI. Siemens recognizes that ethical considerations need to be thought about at every stage of product development. We are investing in research to enhance robustness and security, explainability, safety, privacy, and accountability (includes guarding against harmful bias and unintended consequences) to make AI solutions ethical and trustworthy in a business-to-business (B2B) context (see Figure 1 below).

Let me conclude by repeating that any AI model we develop should provide strong robustness, legal, and ethical elements, so that it can be applied as effectively as possible. For that, we need innovative approaches and collaboration within a broader ecosystem of IT and engineering companies, research institutions and partners. Ethical issues such as the trolley problem, and its variations that are more relevant to the field of Industrial AI, require our collective effort to create practical solutions and ensure that AI’s full potential can be realized. Human life and human rights must remain top priorities in this arena. The bottom line is that whenever an autonomous decision or recommendation of an AI system conflicts with ethical expectations or human values, these priorities must be considered—even if doing so impacts AI’s degree of autonomy and/or performance.