Episode 2: Understanding training vs Inferencing and AI in Industry

Members of our high-level synthesis team Ellie Burns and Michael Fingeroff got together to discuss AI and machine learning (ML). In episode 2 of their 4 part podcast, they discuss how to train a neural network to perform a particular task efficiently. They then discuss the concept of mapping the trained neural network into hardware, which is called inferencing. People are often confused about the differences between these two concepts. After clearing that up, they move on to discuss how particular industries employ AI and ML within their products.

Ellie Burns

Ms. Burns has over 30 years of experience in the chip design and the EDA industries in various roles of engineering, applications engineering, technical marketing and product management. She is currently the Director of Marketing for the Digital Design and Implementation Solutions Division at Siemens EDA responsible for RTL low-power with PowerPro, high-level synthesis with Catapult and RTL synthesis with Precision and Oasys. Prior to Siemens EDA, Ms. Burns held engineering and marketing positions at Mentor, CoWare, Cadence, Synopsys, Viewlogic, Computervision and Intel. She holds a BSCpE from Oregon State University.

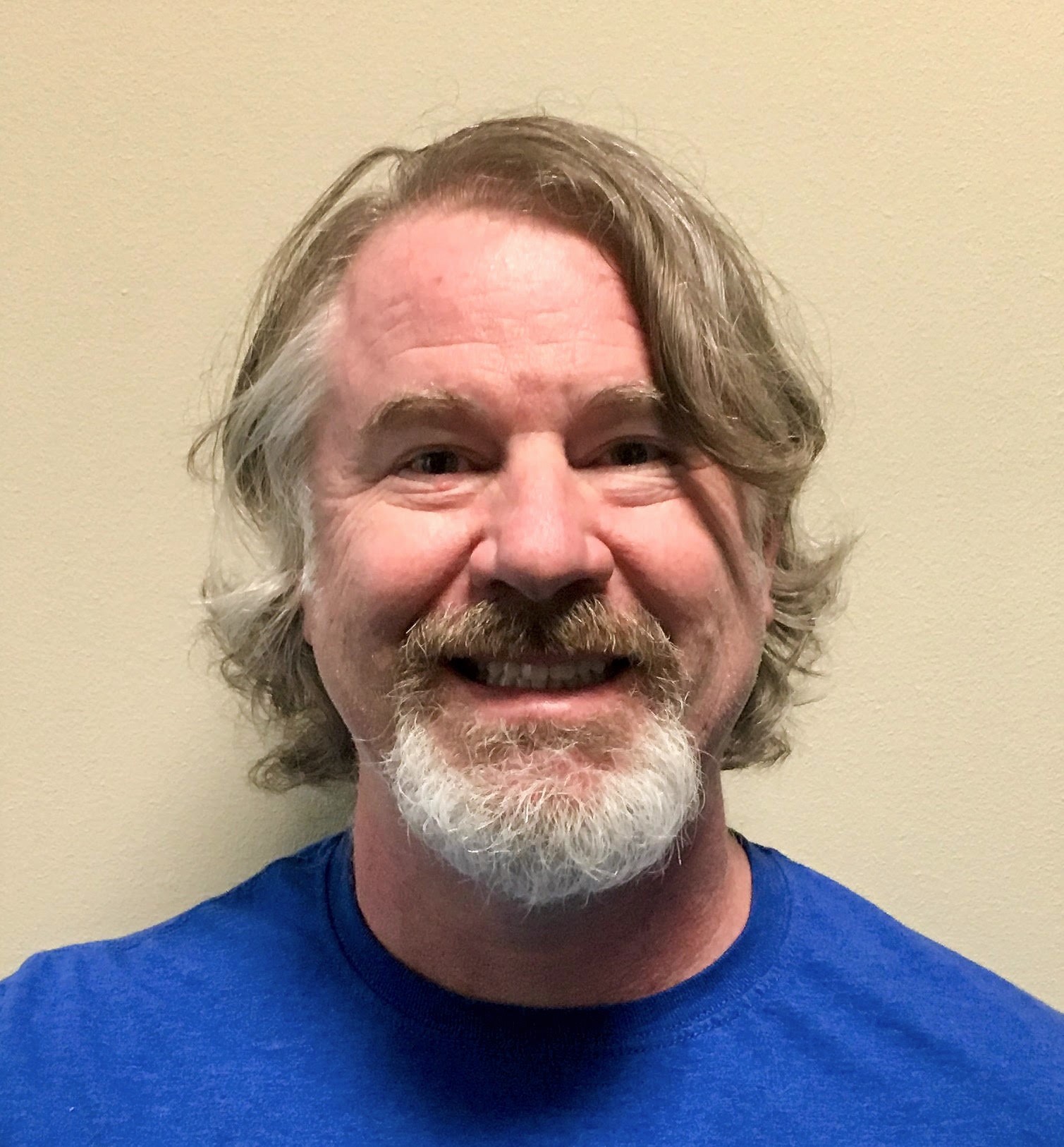

Michael Fingeroff – Host

Michael Fingeroff has worked as an HLS Technologist for the Catapult High-Level Synthesis Platform at Siemens Digital Industries Software since 2002. His areas of interest include Machine Learning, DSP, and high-performance video hardware. Prior to working for Siemens Digital Industries Software, he worked as a hardware design engineer developing real-time broadband video systems. Mike Fingeroff received both his bachelor’s and master’s degrees in electrical engineering from Temple University in 1990 and 1995 respectively.

AI Spectrum

This podcast features discussions around the importance of AI and ML in today’s industrial world.