Microcontrollers: On A Slippery Slope … Uphill?

I always welcome contributions – guest blog posts – from my colleagues and associates. After all, that reduces the amount of work that I need to do – how could I refuse? But seriously, I think that a different “voice” from time to time is refreshing. My colleague Richard Vlamynck has appeared here before. He has an interesting perspective, sitting right on the cusp of hardware and software – definitely an embedded “Renaissance” man. Today Richard is musing on what actually constitutes a microcontroller …

This blog post started out on another blog, when someone offered the premise that anything that has an MMU (Memory Management Unit) can not be a microcontroller. That made me ask: why? Can’t I still call a device a microcontroller if it has an MMU? Can I still call a device a microcontroller if it has an embedded DSP block? For example, “everyone” knows that the Nest home thermostat has an ARM(R) Cortex(R)-A7 and it does have MMU. The same Cortex-A7, in a similar chip, is used in Google Glass.

So what is it that distinguishes a microcontroller from a microprocessor or a CPU (Central Processing Unit)?

To answer that question, let’s set the way-back-machine to sometime near the beginning of digital computing for embedded systems. (Note that I specified “digital” embedded systems, because analog systems have a longer history, which is a separate topic that touches as far back as ancient Greece…)

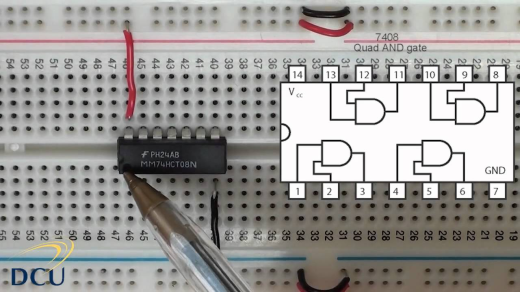

In the good old days of embedded systems digital engineering, life was simple. Whenever you needed to create a new product, you could build a rudimentary control system using nothing more than a handful of 7400 series NAND gates and NOR gates, sometimes a 555 timer, maybe an op-amp, and a selection of resistors and capacitors.

That is to say that you don’t need a software program with an RTOS and device driver and middleware stacks to make simple decisions such as, “Turn on the red alarm lamp, if the motor is running and the cooling water temperature is too high, or the oil pressure is too low.” (For extra credit, you can try to assign engine sensor inputs to the schematic below.)

In the good old days, there was no need for LCD displays or mice or keyboards. Some switches and lamps would be adequate for your HMI (Human-Machine Interface) input/output, ie. green light good, red light bad. You could easily breadboard and debug this type of embedded system before going into production.

For a limited run “production”, you might get some copper clad PCB material, perhaps 6 or 8 or 10 square inches or so for a small system. You would use some lithographic masking tape to lay out the traces on your “motherboard”. The next step would be the judicious dunking of the copper clad board into a bucket of acid (being careful not to burn a hole in the living room rug) and there you have it, your “motherboard” for your embedded system. (In this case it was an oil pressure monitor/alarm for an ancient logging engine, that still ran but needed more careful watching.) You did all this to increase the reliability of the system by eliminating the breadboard wires, swapping changeability for reliability.

As time went by, embedded control algorithms became more sophisticated. We saw the introduction of the first microcontrollers. These first microcontollers were 4-bit and 8-bit designs in the beginning of their life, and your mostly fixed logic embedded control system could now use a relatively inexpensive microcontroller. The microcontroller hardware and software would be “embedded” somewhere inside the larger product, so the term “embedded system” was coined.

We were already on the slippery slope uphill.

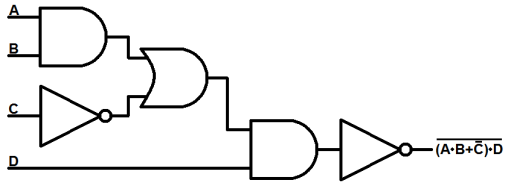

The microprocessor based embedded control system pictured below is 6502 based, and still it could be breadboarded before production. Although we now had created some flexibility in the functionality after final PCB creation through SW, the increase in system size didn’t help the reliability.

Note the wooden case and high quality screen door hinges holding the “Zero” together. One issue was the number of support chips needed to build the system. Wouldn’t it be nice to have a single chip system? A single chip system could reduce cost and eliminate the backplanes for reliability.

This brings us to my favorite definition of “microcontroller”. It is a chip that does not need any supporting chips to perform its function. That is to say, you can build something useful using only your microcontroller chip.

The single chip system that first came to the (reliability) rescue was the 8051. Arguably, the 8051 could be Intel’s first and still the best microcontroller ever, especially for the sharp end of the IoT. The 8051 is the “Mother of all SoCs”.

There were 4-bit and 8-bit microprocessors that pre-dated the 8051. So what is it that makes the 8051 so special (to me, at least)? The 8051 was the first device that did not need any supporting ROM, RAM or I/O chips to build a complete system. The 8-bit microprocessor core in the 8051 was accompanied on the same chip by ROM, RAM, Timer, UART and GPIO. The 8051 was a complete microcontroller, arguably the first commercially available System on Chip – SoC.

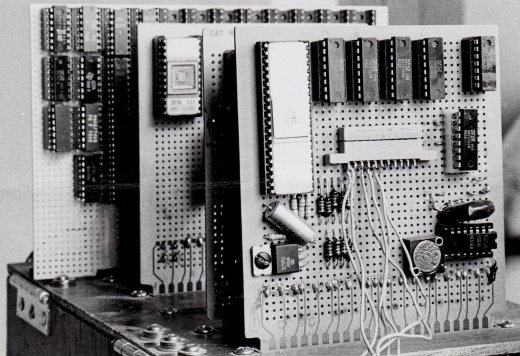

The embedded systems that first ran on the 8051 did not use an RTOS (Real Time Operating System.) In the same sense that the microcontroller reduced the complexity of parts while adding flexability, the RTOS concept later added scheduling flexibility while maintaining or improving responsiveness. The figure below shows the Site Player, a complete IoT sensor node and webserver all running on an 8051.

Is today’s TI OMAP3 a microcontroller? Can you really use it without any other components? It looks like the answer is a vaguely plausible “yes”, it can be used as a microcontroller. For example, the OMAP3 series AM/DM37x has 32K bytes of ROM bootcode and 64K bytes of RAM. That is not a lot of onboard memory for that specific microprocessor unit (MPU), so that’s why I say it’s “plausible” to call it a microcontroller. What I am saying is that if your application fits in 64K, then there are more cost effective choices than an OMAP3. But if you allow external RAM, then that particular device is a great choice for higher end embedded systems, but certainly overkill for low end low cost systems.

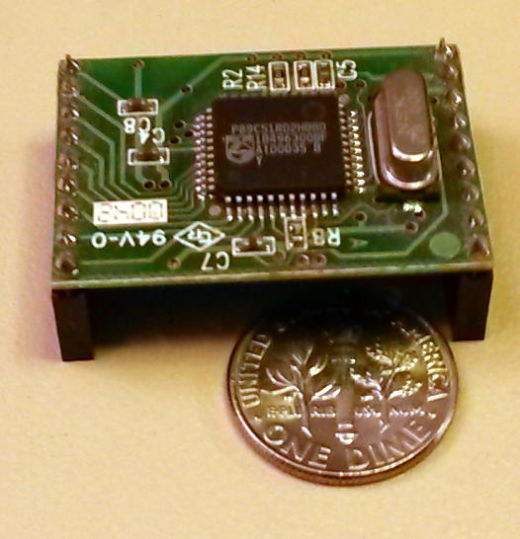

There are 8-bit SoC’s that include Zigbee and/or 802.15.4 wireless transmitters and receivers. That is to say that you can easily build a wireless sensor node based on an 8-bit microcontroller as shown below.

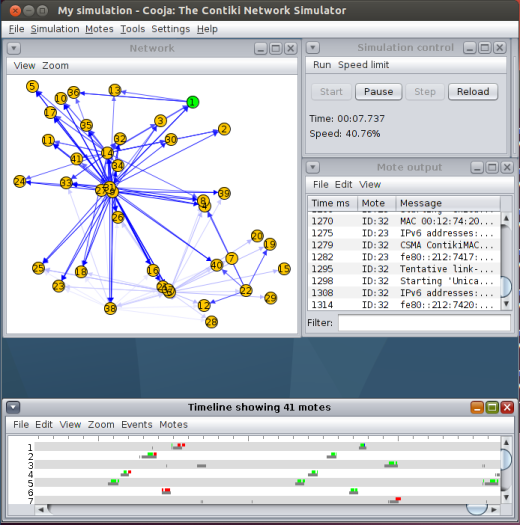

Another issue that makes for a good microcontroller has nothing to do with its architectural superiority or its transistor count or its embedded features and I/O. A good microcontroller has a good “ecosystem”. The ecosystem for the 8051 has been around since 1981 and today it includes free compilers, Eclipse based IDEs, and RTOSs, as with any other microcontroller. The 8051 enjoys support from the Contiki-OS. In fact, Contiki was specifically designed to run on 8-bit micros exactly like the 8051 based chips from TI, Infineon, NXP and others. You can build mesh networks with Contiki and simulate their behaviour in the Cooja network simulator. This is a complete topic in its own right, so we’ll leave it at that for now.

Besides having a single chip solution, there are some other factors that might influence whether some device is suited to an embedded system? How about interrupt latency and interrupt jitter? What should a microcontroller offer in that arena? This is left as an open question if you would care to comment, your thoughts would be appreciated.

Gentle readers, you must understand that this is just a topic for discussion, there is nothing that is set in stone. I’m sure that others will have quite excellent input on what might be the “first” microcontroller by their definition, or what makes the “best” microcontroller for their application. For anything that I put in quotes, you may feel free to supply your own definition. That is to say that we do not need to debate or argue (argue in the good sense of the word) because you should use and enjoy your own definition of microcontroller for the type of systems that you build. Your comments and opinions are always quite welcome.

We’ve talked a lot about software, here. How about the hardware? One of the first things hardware engineers will ask about is not software, but gate count. How many NAND gates or NOR gates (see above) will it take to build my microcontroller. Specifically, I’ll be asked how one microcontroller compares with another. This is difficult to answer in a truly objective fashion, as each device includes a different amount of functionality. The gate count for the Mentor Graphics M8051W is 5400 gates for the bare CPU with interrupt controller. A middle of the road M8051W with i/o and timers is 9120 gates. A fully featured M8051W is 11890 gates.

In conclusion, a microcontroller is a standalone chip that can be used to build an embedded system, and even though embedded systems designers may find themselves on a slippery slope upward to 32 bits, there is still an entire universe of devices that can be built with 8-bit microcontrollers.

[ARM and Cortex are registered trademarks of ARM Limited (or its subsidiaries) in the EU and/or elsewhere. All rights reserved.]