How to optimize performance during physical verification

By John Ferguson – Mentor, A Siemens Business

Are you getting the most from your processes, tools, and hardware? Or are you giving up time and money in your IC design schedule? Here are some strategies that can help you improve your bottom line.

In his novel, Through the Looking Glass, Lewis Carrol wrote, “Now, here, you see, it takes all the running you can do, to keep in the same place. If you want to get somewhere else, you must run at least twice as fast as that!”

The rapid engineering cycles of the IC industry have, no doubt, left many feeling that way, especially in the last few years. The demand to continuously deliver designs that can satisfy the enormous growth of the electronics markets has driven a constant and unrelenting shift to smaller and smaller process nodes. The materials, manufacturing, and design improvements of these node shifts create a demand for design flows and verification tools that can not only scale with the enormous growth in computing needed to handle exponentially increasing design complexity, but at the same time provide an increasing range of functionality to manage the new and emerging layout effects and dependencies that can affect manufacturability, performance, and reliability.

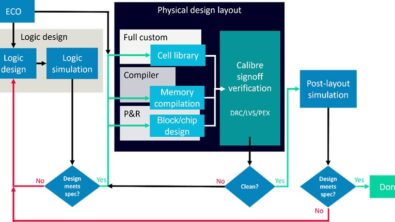

Managing physical verification runtimes has become a multi-dimensional challenge. Three primary strategies used to keep DRC runtimes within industry expectations for each new process node include:

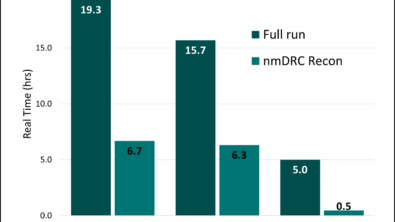

- reducing the computational workload by optimizing the rules and checking operations

- handling design data more efficiently by adding hierarchy

- parallelizing as much of the computation as possible

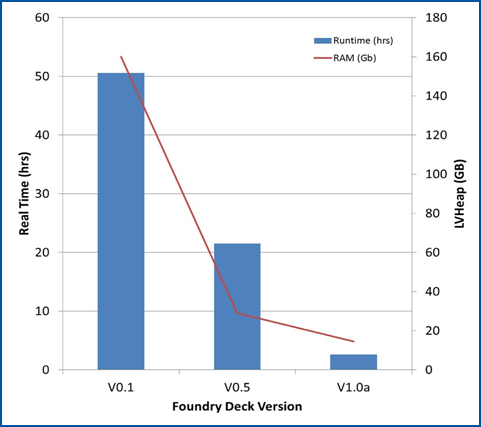

Within each of those strategies are multiple techniques and technologies. For example, rule optimization that minimizes the impact of the explosion in checks and operations can take many forms. DRC tool suppliers work closely with the foundry to help optimize rule performance from the beginning of a new node development cycle right through to the release of a full design rule manual.

Engine optimization is another approach to reducing computational workload. EDA vendors constantly strive to ensure tools run faster, use less memory, and scale more efficiently at all nodes. At the same time, they continuously evaluate and refine tool functionalities to ensure they perform in the most efficient manner possible.

Engine optimization is another approach to reducing computational workload. EDA vendors constantly strive to ensure tools run faster, use less memory, and scale more efficiently at all nodes. At the same time, they continuously evaluate and refine tool functionalities to ensure they perform in the most efficient manner possible.

Data management and transfer are critical in all stages of IC design, from the design phase, to the handoff to manufacturing, to the manufacturing cycle. Adopting new, more efficient database formats, making use of data hierarchy to reduce runtimes, and implementing new fill strategies to minimize post-fill DRC runtimes are just some of the ways data management helps designers manage increasing design complexity while meeting their delivery schedules.

Parallel processing effectively addresses two compounding challenges for physical verification: the volume of layout data, and the computational complexity of the rules. Running multiple CPUs on the same machine, using CPUs distributed over a symmetric multi-processing (SMP) backplane or network, or taking advantage of virtual cores are just some of the ways you can help ensure the fastest total runtimes.

Productivity in physical verification is a multi-faceted objective that requires multiple strategies and technologies. Are you using the tools and techniques that can help you not just stay in the same place, but achieve even more while using the least amount of time and resources? For a more in-depth look at these and other optimization strategies, download a copy of our white paper, Achieving Optimal Performance During Physical Verification.