Automotive IC test technology (that will help you sleep easier)

In my last blog I looked at the product definition process of automotive ICs, using the microcontroller market as an illustration of design exploration to optimize performance, features, die size and product cost. What about the back end of the process? Having defined the feature set and determined the software strategy, both software tools and middleware, what about the final test of these ICs?

Pretty much every vehicle you see on the road today, likely including your own unless you have a classic, has a microcontroller taking care of the braking system. And another one managing the complex motor control algorithms in your electric power steering system. And so on. These are not functions you want to mess around with, so when it comes to testing the electronic components they are qualified to be automotive grade — that is, to live in the world of zero-defect performance.

Zero-defect, as any auto engineer will tell you, is acknowledged to be a journey, and automotive IC companies have to show where they are on that mission and what they are doing to continually improve quality and speed up failure analysis and diagnosis. And that has led to failure rates now plunging from the parts-per-million to parts-per-billion levels.

All of the major automotive IC houses have genuinely impressive quality improvement and defect reduction strategies, having refined their capability over decades of design and test experience, and it’s a fascinating area of innovation. If you ever feel part of the ‘my role only gets noticed when something goes wrong’ brigade, then spending time with automotive IC test engineers will give you a new frame of reference for that.

Final test (that is, excluding the wafer level probe test) for automotive ICs accounts for a high percentage of overall product cost. Depending on the chip configuration and die size, it can be anywhere up to 30% of the total product cost. Any product manager working in this area will tell you stories of optimizing some awesome new chip and having a rock solid business plan, only to have it sunk trying to get test costs under control.

Why is this? The answer is it’s a combination of automotive quality demands and legacy test software, complicated by the fact that it’s a 3-dimensional problem — how to increase quality and yield, but simultaneously reduce seconds on that expensive tester.

These look like conflicting challenges and with traditional test methodology, they are. ICs get more complex with every generation and technology node. And as these components are responsible for controlling what we call safety-critical functions, and test software generally evolves to always add more tests with every new product release but never take any tests out, how do you make this cost competitive?

Test-elimination using statistical data and correlation was seen as a real high-risk, low-reward thing to do. Sure, you could remove a few legacy tests using statistical data, for a negligible overall test time reduction. But the potential downside is that you suddenly become the most in-demand person in the organization after some German carmaker is suddenly parking new cars off the production line on a football field somewhere until they (you) can figure out why something is failing. Who wants to be that? It’s easy to see why the words ‘test software’ and ‘bloated’ get used way too much in the same sentence.

In my experience, IC test engineers generally are super-innovative, have lots of ideas for change and don’t like the old ways any more than you do. That’s why the usual approach to test cost optimization is by spreading the fixed cost. Examples include increasing parallelism (testing more ICs in parallel, therefore spreading the fixed cost overhead over more units) or some kind of yield enhancement program where the benefits of some fractional yield improvement are realized over a period of years.

But what about reducing that fixed cost overhead, rather than just spreading it about? Just as in the last blog I looked at the balance of on-chip IP vs. what can be implemented in software, the IP tested on-chip using in-line self-test has to be balanced against what has to be run on the tester burning expensive seconds of tester time. So why can’t we can apply intelligence to optimize that test software?

Well, we can, by using a fault coverage simulator, which analyzes all the tests you are running, then see what kind of test coverage you have, and importantly, identifies all the legacy test patterns that are not doing anything at all. This kind of simulation at an abstracted level allows you to identify and remove test software that is wholly redundant, allowing for measurable and significant reductions in test time (reported cost savings in the tens of millions of dollars per year).

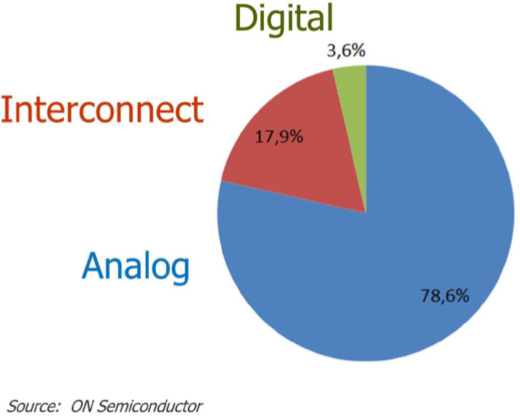

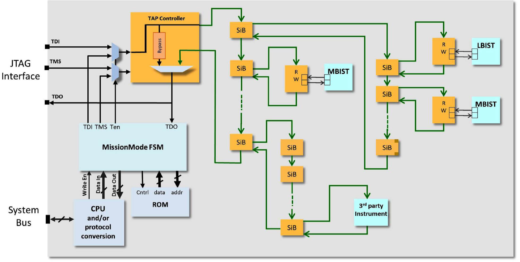

In parallel, by using a fault coverage simulator, you can identify any potential weaknesses in the test program, allowing even greater optimization of reliability metrics. And the benefits of this type of fault simulation are really apparent in the mixed signal domain (which is the automotive world) — analog is where the majority of field failures occur. Digital fault simulation has been commercially available for some time, and now finally we have the same kind of simulation for analog circuits to drive automotive reliability even higher while simultaneously optimizing the test software. We can apply this kind of design automation at the chip level, where we can offload the test resource to the chip using BIST for logic and memories, further reducing the time needed running on the tester.

Of course, the main customer benefit is higher quality. However, the new test technologies also allow customers to access these on-chip resources for in-system self-checking (for example, at power up when you start your car, with memory BISTs that can be running in-system checks even whilst the memory blocks are being accessed.)

So if we can reduce the size of the test software through fault coverage simulation (oh, and once the test software is optimized for coverage, it can then be digitally compressed for further test time reduction, which can, for example, give 10x or 100x compression for logic tests), and we can reduce the time the chip needs to spend on the tester, what can we do to increase yield?

Well, we can get deeper into the chip circuitry and use technology that tests ICs at the cell level structurally, instead of testing only cell inputs and outputs. This becomes more significant as we move to smaller geometries, as there it is estimated that up to half of all circuit defects occur within cells. Memories can be repaired and test fail data analysed to determine which defects are systematic and what needs to be sent back to the design team for design rule analysis. The result is to speed ramp to volume on new processes and improve yields on existing processes. This type of diagnosis-driven yield enhancement uses diagnosis software to find yield-limiting defects that would traditionally take time to find (if found at all) using typical scan diagnosis.

So who benefits from all this? Everyone, including the product manager and the business unit executive management who can show cost competitiveness helping their market position as well as their P&L. But more importantly, their customers benefit from higher test coverage and more robust test methodologies that support increased reliability, in-system checking and faster fault diagnosis, allowing the IC house to show their customers (the tier 1s and carmakers, who are taking an increasing interest in IC design and test methodologies) that their zero-defect journey has not plateaued.

So if you are involved in developing automotive ICs, and the back end of the process is giving you sleepless nights, there are a range of technologies available that can help you get more zzzs. And the test roadmap is of course not static. These technologies are evolving constantly to address the next generation of chips powering sensor fusion boxes for autonomous vehicles, IGBTs for inverters in electric vehicles and the ICs for every other vehicle application.

For more information, please see the whitepaper “IC Test Solutions for the Automotive Market,” written by my Mentor colleague and fellow blogger, Steve Pateras, and from which the images in this post are borrowed.