From Enterprise AI to DIY

From Enterprise AI to DIY: My Journey with Teamcenter AI Chat and Creating a Custom Serverless Version

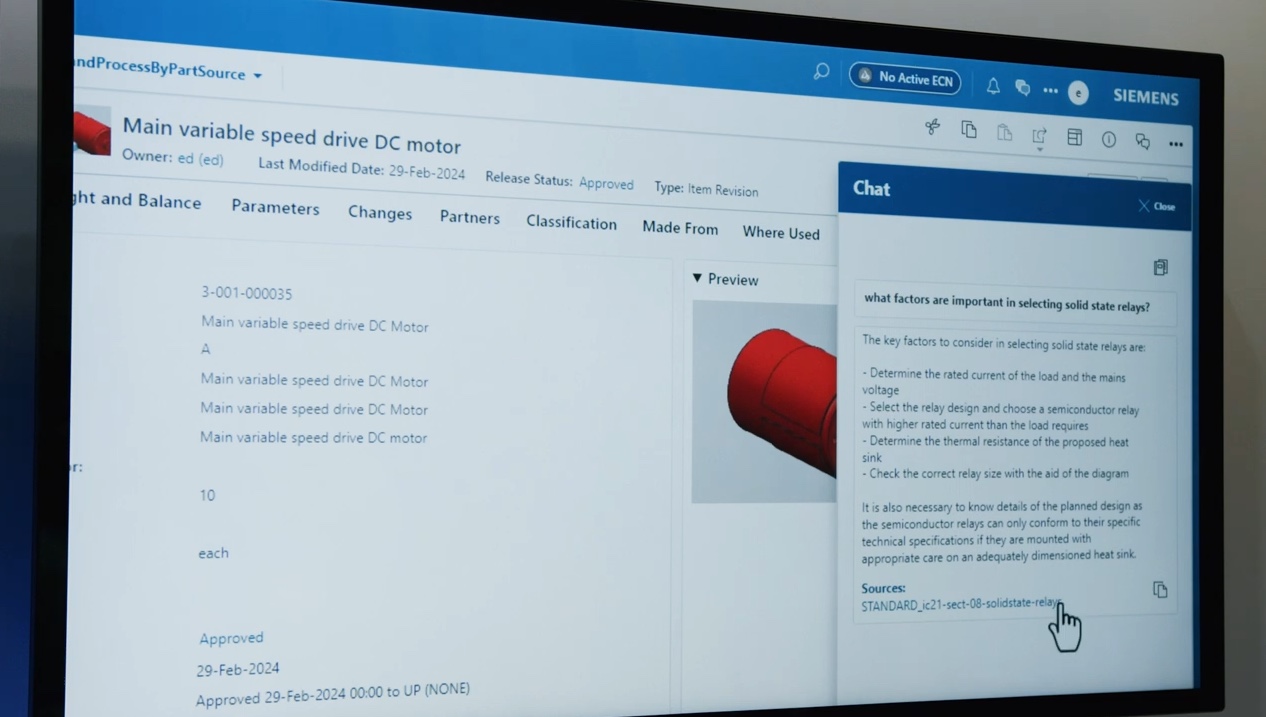

Teamcenter AI Chat

In today’s digital enterprise, information isn’t just scattered—it’s buried. Engineers, product managers, and analysts often spend 20–30% of their time digging through files, systems, and databases to answer what should be simple questions. I’ve seen it firsthand while working on solutions to streamline product lifecycle management (PLM) workflows.

That’s where the idea for an LLM-powered AI chatbot tailored for PLM systems like Teamcenter came in. Inspired by the growing potential of large language models and their ability to interpret natural language queries, my organization focused on designing a chatbot experience that could surface product knowledge from complex, document-heavy environments. The goal was to make it easier for engineers and decision-makers to get fast, accurate answers from within their PLM system — without needing to manually sift through files, navigate folder hierarchies, or use rigid search tools.

Building a DIY Teamcenter AI Chat–Like Experience Using Serverless Cloud (AWS/Azure)

Note:- This is a DIY inspired by Teamcenter AI chat, not the official architecture.

This system leverages Amazon Bedrock for LLM inference and embeddings, with Amazon S3 handling document storage. It uses LanceDB (serverless mode) for efficient vector search, and Amazon Cognito for user authentication. The compute and messaging are managed through AWS Lambda and API Gateway, while Amazon CloudFront delivers the frontend globally.

Disclaimer

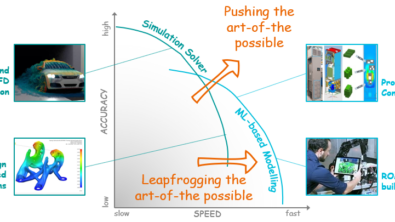

This is a research exploration by the Simcenter Technology Innovation team. Our mission: to explore new technologies, to seek out new applications for simulation, and boldly demonstrate the art of the possible where no one has gone before. Therefore, this blog represents only potential product innovations and does not constitute a commitment for delivery. Questions? Contact us at Simcenter_ti.sisw@siemens.com.