Engineering simulation part 1 – All models are wrong

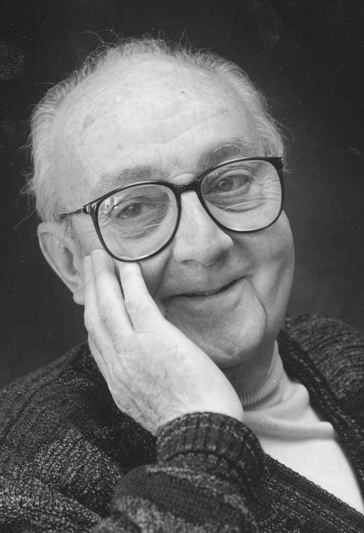

George Box (18 October 1919 – 28 March 2013) was a British statistician who worked in the fields of design-of-experiments, time series analysis, quality control and Bayesian interference. Without him we wouldn’t have Response Surface models, or this classic aphorism:

“Remember that all models are wrong; the practical question is how wrong do they have to be to not be useful.” [1]

The most pragmatic of attitudes that confronts an academic approach to simulation accuracy with the realities of industrial engineering timescales.

The challenges of engineering simulation, the application of physics based predictive models to virtually prototype proposed product or process designs, might well be better addressed by taking Box’s comments to heart.

What is Meant by ‘Models’?

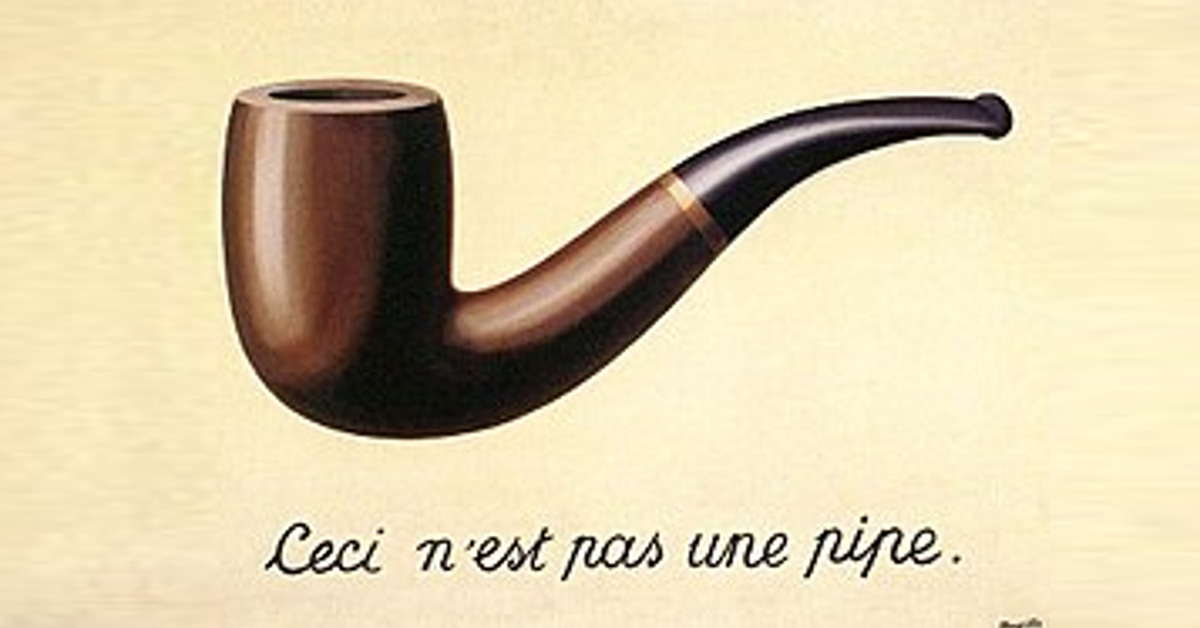

A model is an abstraction, from the Latin modulus, a measure. It’s a representation of reality that isn’t that exact reality, but purports to be so.

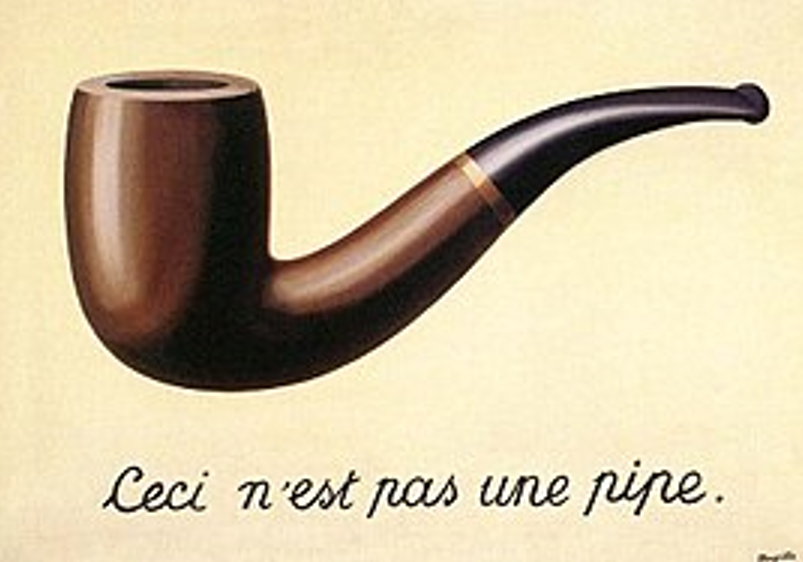

René Magritte’s ‘The Treachery of Images’ painting of a pipe is just that, a painting, but not the pipe itself. It indeed isn’t a pipe, despite outward appearances. But it still has utility in that it’s obvious to anyone looking at it that it represents a pipe, even if it doesn’t explain its purpose or function.

Engineering simulation models are just the same. They intend to represent reality to the extent where some useful insight might be garnered, even though the models themselves are not ‘real’. Why take such a modelling approach? It’s much cheaper, quicker and easier to create an engineering simulation representation of, for example, a Gen IV Sodium fast reactor to determine its functional compliance than it would be to build and test a physical prototype. Same holds true for any product, or process, that is being designed.

Could you design something without employing simulation? Of course, but likely you’d still use your intuition, which is just your brain doing some simulation based on your experience of physical reality.

A Digital Twin engineering simulation model is the counterpart to its Physical Twin. The former may be conceived of even before the latter is born, co-exist with it during its manufacture and operation and even monitor its health all the way to its retirement. The ongoing digitalisation of our reality will entail the creation of Digital Twins of all worldly physical assets, be it our cars, phones, houses, cities or even our own bodies. Such Digital Twins would allow us to understand, predict, monitor and inform the behaviour of their physical analogues in a way that we haven’t fully exploited to date.

And it’s not as if we haven’t been prosecuting a modelling approach throughout human history. The first Mesolithic human to name his friend ‘Bob’ (and thus model him via an abstract linguistic association) could well have been the first modeller/artist by abstracting his friend to a name. We’ve just become much better at doing that in the subsequent 10000 years.

Still ‘Bob’ wasn’t Bob, it was just a way of describing Bob. And although it is said a picture paints a thousand words, so can a phrase: ‘Induction motor’ is easy to say, but would require reams of words to explain.

What is Meant by ‘Wrong’?

‘Bob’ as a name was wrong because it wasn’t Bob himself. But it was still useful, it conjured up the image and sense of Bob as a person. It had utility. But what if someone called him ‘Bobby’? Not exactly true, but still kind of relatable. What if someone called him ‘Prometheus’? Still a name of a human, but the wrong human.

Considering that all models are wrong, by definition, the question then is ‘how wrong can the model be and still be useful?’. The real challenge of Engineering Simulation is in determining the level of error and from that, determining its utility. As an aside, being wrong, knowing why it’s wrong, is itself the best form of learning:

“Failure is instructive. The person who really thinks learns quite as much from his failures as from his successes.” John Dewey

How Might an Engineering Simulation Model be Wrong?

Actually, that’s a badly formed question. As if by understanding the ‘how’, we might assume that those errors could be resolved, wrong! Point being, that regardless of effort, the model will always be wrong to a varying degree. But still, what are the possible sources of error that could be addressed (Epistemic uncertainty) and ones that will always be unknowable (Aleatoric uncertainty)?

Here the authors rely on their 3D discretised CAE background to propose the following, admittedly incomplete, list:

- Epistemic (that which you could control, but might not):

- Mesh. An art to many, but a science nevertheless. Too coarse in regions of high spatial gradient, then some level of numerical diffusion will be imposed, especially for convective CFD. Same is true in the temporal domain.

- Material properties. Be they related to thermal conductivity, Young’s modulus, Poissons’ ratio, temperature dependent coefficient of viscosity, magnetisation etc., the material properties assumed will likely not be those that are used in product.

- Boundary Conditions. Classically those conditions that are set at the periphery of your computational domain linking the space that you model with the surrounding space that you do not. Whether it’s an assumed constant Pressure BC, a constant Heat Transfer Coefficient, a constant Temperature, chances are that your decisions as to where to impose those BCs do not match their applicability in reality.

- Input Conditions. All the other model definition parameters: Powers, Flow Rates, Temperatures, Currents… You’ll be informed of these values by the other engineering disciplines within your organisation or you’ll have to assume them from datasheet values, but that’s no guarantee that they’ll be ‘correct’.

- Numerical Solver Parameters. Energy Conservation is a concept that spans all physics. How conserved should a solution be before it’s considered ‘complete’? Might one just judge the convergence of KPIs to determine acceptable completion of a solution? You could solve on for a few 100 more iterations, but what return would you get?

- Aleatoric (Unknowns and little to nothing you can do about it):

- Production Processes. You can document your design to the Nth degree, but when it’s manufactured there’s no guarantee of retention of the original design intent. More true for non-functional aspects of the product that might not be specifically tested, but might have a bearing on long term reliability.

- Degradation. Throughout the lifetime of your product or process, its functional and non-functional capabilities will degrade. How and by how much is a function of its operating environment which itself may be unknowable or, if presumed, be taken as an assumed probability distribution which will likely be wrong.

- Material properties. Despite the Epistemic uncertainties you think you can address, as soon as you divest responsibility to a CEM there’s less guarantee that they, or rather their material suppliers, will conform to, know or even indicate the exact material properties that they are using.

As indicated, not a complete list by far, but hopefully enough to dispel any misconceptions that an engineering simulation model might ever be ‘perfect’, ‘real’, ‘accurate’ or ‘exact’. But still, it might be Useful…

What is Meant by ‘Useful’?

There are various definitions of ‘Utility’, one of the better ones is: ‘functional rather than attractive’. For something to be Useful it should demonstrate Utility. It should answer a question that has been posed of it. For Engineering Simulation this most often falls into 2 camps:

- Given design architectures/topologies A, B, C, D which one best satisfies its requirements and constraints?

- Given a proposed design, does it pass validation against its design criteria KPIs?

For 1), a comparative approach to simulation enabled design, what are the accuracy requirements to be able to answer that question? The accuracy needs only to be sufficient to indicate which design is better than the others. For shift-left conceptual design, where radically different proposals are considered before such time as any detailed design commitment is made, the potentially large deltas in their performance are such that the requirement for accuracy is reduced (the error bars simply need to be << than the variations in the performance of the proposed designs). Pareto front approaches can also be used to determine the relative trade-off between competing design parameters.

For 2), a go/no-go gate-based workflow validation stage for a particular design KPI requires a corresponding refinement in accuracy, or at least a defendable confidence in that accuracy. This is when you have to sacrifice solution time for assumed accuracy. It’s arguably the most common application of simulation as well as being the most expensive in terms of time and effort.

Although much industrial effort is being invested in 2), more utility is available in 1), and for less effort. If you’re using engineering simulation to validate an already detailed design, you’re missing the point opportunity. Engineering simulation / virtual prototyping is a risk minimisation approach, best applied early in the design process when changes are cheap and easy to accommodate.

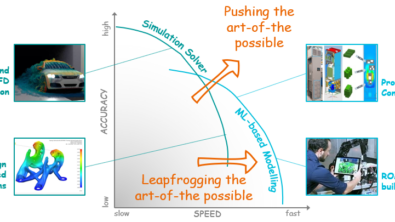

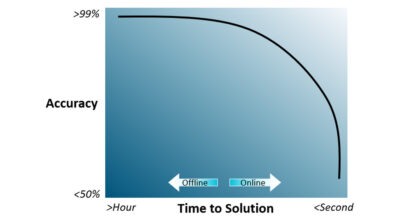

Solution Time vs. Solution Accuracy

None of the above would be relevant were it not for the fact that ‘accurate’ (3D discretised CAE) engineering simulation solutions are slow, often measured in hours and days. Although there is much celebration of Computer Aided Engineering (CAE) tools and their support of HPC compute infrastructures enabling the solution of models with now billions of mesh cells, as well as much admiration of the pretty pictures that are generated, again there is an assumption of absolute accuracy being solely correlated to utility.

Sure, there are certain industrial applications where shaving of 0.01% off of a KPI via refined simulations will have $Ms benefit (cf. F1, aerospace etc.) but these account only for a small % of the total available market for Engineering Simulation solutions and associated workflows.

For decades there has been a demonstrable CAE vendor roadmap of accelerated 3D CAE solutions without compromising ‘accuracy’ (rather ‘fidelity’). Recent advances in real-time GPU enabled physics solvers have attacked the accuracy vs. simulation speed balance from a hardware perspective. But still, there’s a swathe of use-cases that could benefit from ‘some simulation being better than none’ and that simulation need not be 99%+ accurate. To conform to engineering timescales, it might be better to pose the questions of your CAE tool ‘I need results within 8 hours, provide them and indicate how accurate the results are’. Both time and accuracy are precious commodities and their often exclusive relationship is something that needs to be better curated by CAE vendors.

Regardless of this two-pronged approach, too little attention has been applied to George Box’s pragmatic philosophy:

“Remember that all models are wrong; the practical question is how wrong do they have to be to not be useful.”

As and when CAE tools might provide an automatic indication of error (as difficult or impossible as that might be), support a user selected trade-off between accuracy and simulation speed, provide guidance as to how to minimize those errors and enable consideration of those error requirements in conjunction with the accuracy requirements of the intention of the simulation, then we’ll have got that much closer to satisfying the true needs of Engineering Simulation.

[1] Box, G. E. P.; Draper, N. R. (1987), Empirical Model-Building and Response Surfaces, John Wiley & Sons.

Further Reading

- Engineering Simulation Part 2 – Productivity, Personas and Processes – “Make mistakes early and often, just don’t make the same mistake twice”

- Engineering Simulation Part 3 – Modelling – “‘Everything should be made as simple as possible, but not simple“