Building a time-series foundation model – Transcript

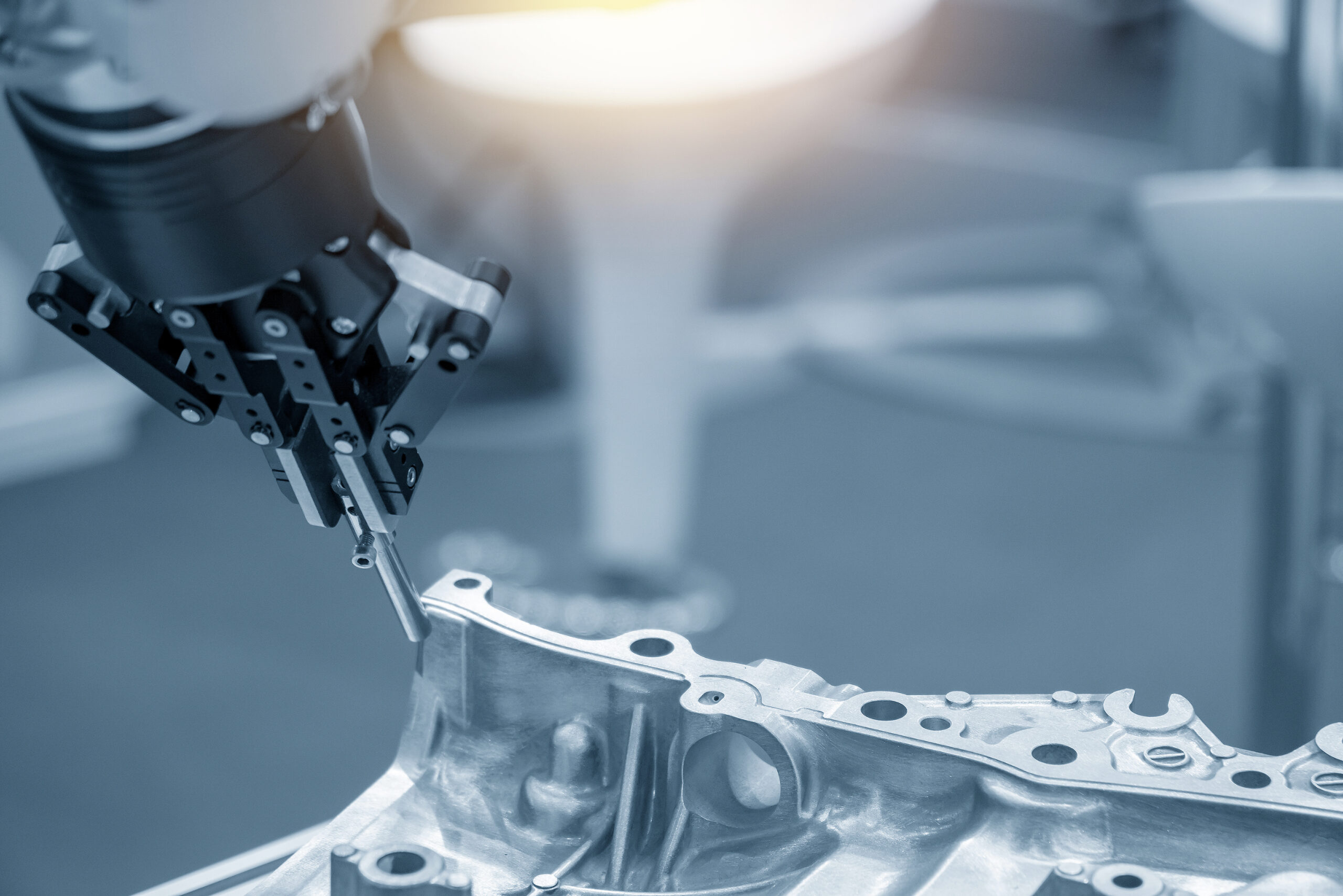

Artificial intelligence is rapidly becoming a core technology for both consumers and businesses but bringing AI into the design process or onto the shop floor presents a unique set of challenges. With a wide variety of tasks and data types, creating AI models to handle industrial tasks is far more difficult than creating simple chat interfaces, which is where foundation models come in.

To learn more check out the full episode here, or keep reading for a transcript of that conversation.

Spencer Acain: Hello and welcome to the AI Spectrum podcast.

I’m your host, Spencer Acain. In this series, we explore a wide range of AI topics from all across Siemens and how they’re applied to different technologies. Today, I am joined by Dr. James Loach, head of research at Senseye Predictive Maintenance. Welcome, James.

James Loach: Yeah, hi, Spencer. Very happy to be here again.

Spencer Acain: Yeah, it’s great to have you back here with us again. So before we go any deeper, could you just give us a quick rundown on who you are and what foundation models are? And why are they important?

James Loach: Yes, it’s a very brief background. My name is Dr. James Loach. I’m head of research at Senseye Predictive Maintenance and I have been since the early days of this organization, so about eight years or so now, from its pre‑Siemens days to the last three years as part of Siemens. Yeah, and I have a background before that in particle physics. Okay, yeah, so foundation models, you just need to think of those as a certain kind of machine learning model that has come to prominence in recent years and these are machine learning models that you typically train them once on large amounts of data and then you’re unable to apply them in different situations without doing extra training or with only doing a small amount of sort of specialization. Okay, and that contrasts with more traditional machine learning models which tended to be trained for particular tasks and every time you have a new task you’re training a new model for that particular thing and this is slow and expensive. So that’s fundamentally what they are and then they’ve come to prominence in recent years through foundation language models, which underlie chat GPT and all of these things that were familiar with. So there’s a particular type of foundation model handling text.

Spencer Acain: Yeah, I could really see how that would be useful, you know, not needing to retrain for every single task must be a huge benefit. And I guess that kind of leads into the next question here, which is you at Senseye, you’ve developed a time series foundation model. Is that correct? Could you tell us what that is and how it’s different from those language models you were just talking about?

James Loach: Yeah, so we do have such a model. The thing is called Chronicle and it’s a time series foundation model for forecasting time series. At Senseye, this sort of has its origins in very early days after the chat GPT moment, when we first became aware of the power of that kind of model. And we noticed quite a number of people at that time that there’s a very strong analogy between what language models do and what a time series forecasting model would do. That’s not completely obvious on the surface because most people’s experience of a language model is talking to a chatbot, so sort of a back‑and‑forth conversation. But the models underlying those chatbots are fundamentally models for extrapolating text. So they’re given some text and then they continue it in a plausible way based on everything that they’ve read on the internet and all of their training. And that shows up as a chatbot because one application of this text continuation is that you can effectively use it to complete a script, right? You know, so you have a script in which you, the user is writing a part, the question that you’re asking, and the language model is completing, you know, the kind of answer that a helpful assistant would give to such a question, etc. Right. So, you know, the underlying model is basically a text extrapolation model, and we experience that as a model used for writing this script that shows it as a chat interaction. Anyway, so that underlying model is for extrapolating text. And they work by breaking down text into tokens, which are words or little pieces of words, and then building this transformer architecture that allows, given a sequence to come up with a prediction of what the next set of tokens will be. So this is effectively a kind of text forecasting process and you can immediately see the analogy with time series forecasting: time series like text is also a sequence, not a sequence of words but a sequence of data points, and then continuing that sequence is equivalent to forecasting the time series forward. Okay, so we noticed that analogy and looked around at things people were doing at the time, found them not good enough for our particular use case and started a long process of initially trying to train our own foundation models and ending up over time adopting a base model generated by somebody else, a model called the AWS Chronos model, and then specializing that to Senseye use cases in very particular and interesting ways. Over about 18 months we eventually managed to build a constructor and forecasting system based on this AWS Chronos, which really performs spectacularly well. It’s a huge improvement on our previous time series forecasting. And it has been in the hands of customers doing useful things for about six months now. So it’s a nice story.

Spencer Acain: I mean, that’s an interesting analogy to think about that comparison between forecasting words first and forecasting data points in a time series. You mentioned that this is already in the hands of customers, right? What is the time series foundation model? What does it bring to Senseye? Why is this such a leave? Why did you take those 18 months to develop this?

James Loach: First of all, just to say the use case that it’s addressing. It’s a kind of prognostic functionality where we’re forecasting against thresholds. The application does a whole lot of things that don’t involve users setting thresholds and the aim is to have as little of that as possible, but it’s a very common use case people know: particular things you record from machines, like the current on your motor or whatnot, need to stay between certain limits. If that current is above reasonable limits, this indicates a problem. So it’s just one part of our prognostic functionality that users can tell the system what values constitute problems, and then we forecast time series routinely and give people advance warning if it looks like the time series is about to hit these particular dangerous values.

It’s a very clear, clean forecasting use case that in practice is quite useful to a lot of our users. But the technology itself can be applied quite widely. There are two additional examples I can mention. One is anomaly detection: anomalies are unusual behavior—something you didn’t expect. What you expect is a forecast of what you thought you should have seen over the past period and then compare that to what you did see, and then factor the user essentially into whether you’re seeing some discrepancy. That’s another potential use case. It involves some alterations from what we’ve done already; one property of foundation models is they tend to be rather large—many parameters—and expensive to run. The model we have was chosen as a base model, trained and distilled to make sense for Senseye so we could run it cheaply enough for our particular use case. We do more anomaly detection than forecasting, and if we want to use that for anomaly detection, we’d look into shrinking the model size or different architectures. So it can be lighter and cheaper.

Another use case is time series matching. One reason these models built on the same transformer architecture work so well is they can form very high‑quality internal representations of the data. A language model learns a rich internal model of the world from all the things it reads, and a time series foundation model does something similar: it exposes to huge amounts of data and gets a deep intuitive representation of the time series in its internal weights. We can use embeddings to refer to this; you give the model a piece of time series data and see how it’s represented internally at a particular stage. That group of numbers really represents, in a deep way, the structure of that time series. Time series that are structurally similar have similar embeddings. So we can use these internal representations to tell which time series are similar to one another. Very similar technology is used in language models for text retrieval—rag systems—that turn input text into numerical representations and spot things with the same meaning even if words differ. Using embeddings in time series foundation models allows us to automatically tell which bits of time series are similar in important ways, and we can use that practically for many tasks. One key task at Senseye is failure matching: we record data from different machines connected to our maintenance management system and know when problems happened. We’ve collected the time series data leading up to those events, and as new data comes in we want to compare it with previous data and recognize what’s similar and what’s not—to tell whether the same kind of thing is happening again. Embeddings let us do this very well, so that’s another main use case we’re interested in: using it to match time series over time and add a slightly more sophisticated prognostic functionality to complement forecasting thresholds.

So we have forecasting against thresholds, anomaly detection, and time series matching and indexing as our key use cases at the moment.

Spencer Acain: Well, James, thanks for that quick rundown of everything that Senseye is applying these foundation models to. It sounds like you’re really getting a lot of mileage out of them, and it was a great move to get these together. But unfortunately, that’s all the time we have for this episode. So once again, I’ve been your host, Spencer Acain, on the AI Spectrum podcast. Tune again next time as we explore the exciting world of AI. Thank you.

Siemens Digital Industries Software helps organizations of all sizes digitally transform using software, hardware and services from the Siemens Xcelerator business platform. Siemens’ software and the comprehensive digital twin enable companies to optimize their design, engineering and manufacturing processes to turn today’s ideas into the sustainable products of the future. From chips to entire systems, from product to process, across all industries. Siemens Digital Industries Software – Accelerating transformation.