How automakers can keep autonomous vehicles safe in bad weather

Today meets tomorrow – simulating the impact of harsh conditions on ADAS sensors

Recently, an OEM was working to modify their side view mirror and their team was tasked with making changes, any changes they deemed necessary, while preserving the design and positioning of the camera and mount on the mirror. The challenge was to prevent film from dirt or water from building up on the camera.

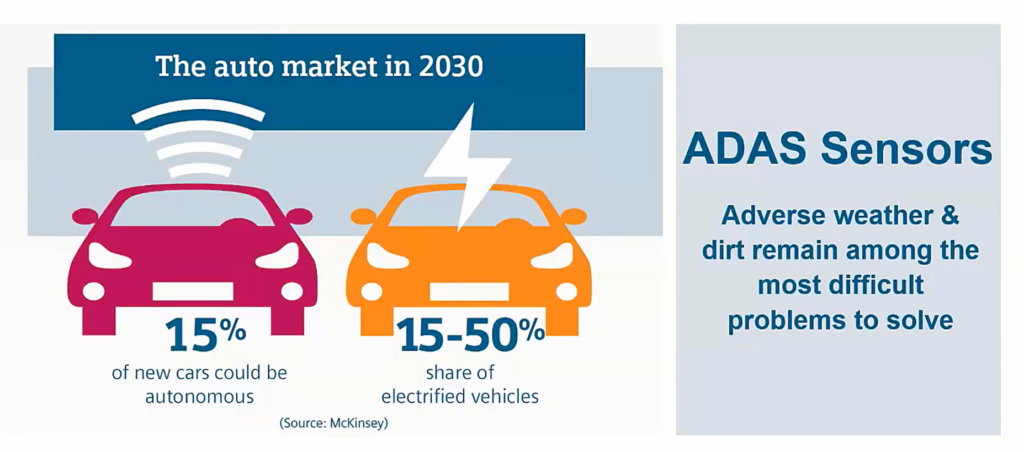

According to a McKinsey&Company report, by 2030, 15 percent of the new cars entering the auto market may be autonomous and 15-50 percent will likely be electrified vehicles (Figure 1). While this is just a decade away, there are still monumental challenges that automakers face. One in particular involves the advanced driver assistance system (ADAS) sensors and their ability to function in adverse weather conditions or if dirt soils the sensors.

For anyone driving in harsh weather conditions, this is an all-too-familiar sight – the rain and snow or the road’s dirt and wintertime salt soiling the windshield. The safety of the vehicle depends on your ability to see. The sensors in an autonomous vehicle must be able to “see” at all times as well.

The last thing you want to do is be driving and have your sensors suddenly indicate they’re malfunctioning because of too much snow, rain or mud.

In the webinar, Massive Verification and Validation of ADAS and Autonomous Driving Vehicles, one of the intriguing points brought up regarded autonomous capabilities on not just dry days or when it’s light, but harsh weather conditions as well.

There was a simulation example of a backup camera in which water was impacting what could be seen by both a human or an ADAS sensor (Figure 2). In the example shown (figure to be provided), it was difficult to see a human stepping into the parking spot behind the vehicle as it was backing in. This error put the safety of the individual at risk. These situations may sound familiar to anyone who has tried backing out of a parking spot on a rainy night but is unacceptable if we’re to fully adopt autonomous vehicles.

Enhancing the visibility for both the human eye and the software is a major challenge that must be addressed. To have the capability of entering the requirements into a simulation and a comprehensive digital twin allows engineers to investigate and see where the design needs improvements and ensure whoever or whatever is making use of the camera and the sensors has the ability to make decisions with near-zero risk of harming anyone or anything.

By discovering ways to limit the water or dirt on the camera or sensor, engineers and design teams can better improve the performance of the autonomous system itself. What you can do to see if you’ve met the requirements is to take the results, put it into the software and make sure you’re meeting the requirements of the design.

Managing water and dirt

The first priority in aerodynamic design is the styling and performance of the vehicle itself for which you want to try to minimize energy use. This entails drag reduction, increasing the drive range for an electric vehicle and improved energy management, which includes enhancing cooling performance.

The second priority is managing the acoustics of the vehicle, can involve noise from the side mirror, open side window throb, sunroof buffeting, underbody noise or HVAC noise.

The final impact on aerodynamic performance, and the one we’re going to take a closer look at, is water management. This includes water impact wiper and sensor performance as well as its affects on sideview mirrors and cameras.

Water and dirt management has so many factors that it’s a complex problem to solve, but not impossible. Multiphysics simulation is being used to determine everything from a vehicle’s reaction from hitting a puddle to wipers swiping away water. With increasing autonomous features, managing how sensors react to adverse conditions is a critical step on the path to full autonomy. What multiphysics simulation must do is capture the correct physics by accurately modeling air and water interactions, ensure a quick turn-around so there’s an impact design before creating the first prototype and enable design exploration.

One of the most complex sensor problems to solve involves the one behind the windshield, which is more apt to face head-on rain, snow, dirt and shifting windshield wipers. For this level of simulation and digital twin, you have to recognize that the sensor behind a windshield is not only reacting to the adverse conditions like multiple layers of precipitation filming up but the layers of glass in its way. The first simulation conducted should set a baseline of its performance in optimal conditions and then make design changes to see how it impacts the design itself.

From there, the next challenge is creating a realistic simulation of interaction between all aspects, such as disperse phase of the water, external flow field, fluid film modelled as 2D on the surface, three-dimensional, complex motion and randomly driven influences like wind.

With a comprehensive suite of multiphase models, you can accomplish simulation and build an accurate digital twin.

Simulating the windshield wiper

Water on the windshield has complex physics that must be considered within the simulation. For instance, water appears not only as particles but also as rivulets, continuous films and pools, and moves from there. This means the sensors behind the windshield aren’t necessarily going to be where the primary impingement of droplets take place. Instead the secondary film rivulets, how they roll past the sensor as the wind or wipers push them off the windshield, are critical factors that impact both safety as well as risk of failure.

The complex motion of the wiper blade moving back and forth for the simulation seems straightforward, but in reality, you have to make sure the wiper blade is sliding on the curved windshield surface. This is accomplished by using an overset mesh approach. In this case, it would be a stationary, fixed background mesh around the car and the moving overset regions — the wipers.

Then the digital twin and simulation would use mesh morphing broken down as:

- A small fillet close to the windshield and all wiper surfaces defined as floating, or freely moving while attached to the other surfaces.

- The windshield boundary in the overset region is defined as ‘slide on guide surface’, meaning the wiper is sliding on the guide surface, which is the windshield

- The outer surface is set to slide ‘tangential to surface,’ in this case, the windshield

Conclusion

This was a small example of the impact a comprehensive digital twin and simulation can have on meeting the most complex challenges of designing the ADAS sensors on autonomous vehicle and managing issues from rainwater and dirt.

Comprehensive digital twin and innovative software from the Xcelerator portfolio can pave the way toward advanced autonomous capabilities, greater levels of reliability and uses in different industries like aerospace.

It was proven when the OEM mentioned at the beginning of this blog successfully used tools like NX and other multiphysics simulation to come up with a design concept that accommodated the camera and mount while altering the side view mirror geometry.

Watch the full webinar: Reducing impact of rainwater on ADAS sensors by optimizing vehicle aerodynamics.