Autonomous Driving – Effective utilisation of machine learning

My last blog introduced some of the key technology challenges in sensing and perception for autonomous driving. Now I would like to consider a few potential obstacles to realizing effective machine learning in highly assisted and autonomous vehicles. Specifically, the impressive rise in popularity of artificial neural networks is twinned by increased latency and power consumption. And despite what the academics and big chip vendors say, this problem is unlikely to be solved simply by applying more compute resources and larger data sets.

The idea of an artificial, intelligent automaton has inspired scientists for centuries. However, it was only with the convergence of mathematics, information theory and electronics that the idea of an artificial brain could emerge from medieval alchemy to engineering reality. Interest in the topic has waxed and waned ever since, as have cultural attitudes. In 1981, an AI-powered talking HAL was an ominous enough boogeyman in 2001: A Space Odyssey. Today, Amazon’s Alexa, powered by AI that Stanley Kubrick and Marvin Minsky likely couldn’t have imagined, is a cute Super Bowl ad celebrity (along with Jeff Bezos).

Presently, there is more data, more processing power than ever before. This raw material, combined with fundamental improvements in algorithmic methodologies, is the helping to drive the multi-billion dollar race for autonomous driving.

However, as is always the case in technology development, advances in the field create new challenges and academic enthusiasm invariably far outpaces engineering reality. In the case of autonomous driving, one of the most challenging issues, particularly with the current trend towards deep-convolutional neural networks, has to do with increased latency and power consumption. The convolutional layers in CNNs are an effective way of decomposing image data into machine-readable features, which make the learning process more effective, but at considerable computational expense. This is particularly the case in the domain of autonomous driving, where large GPU-based processing solutions may prove to be commercially problematic.

The technical operation of neural networks is simple in conception, but complex in realization and too detailed to describe here. (The free online ebook by Y Combinator research fellow, Michael Nielsen, provides an excellent introduction.) However, there are two related features that drive the host latency and power issues:

- The size of the architecture, which represents the numerical model relating the data inputs with labelled outputs.

- The labelled training data used to form that model, providing the context of what is learnt.

In the past, neural networks were typically limited to very specific pattern recognition problems. Such networks, created for specific cases and using expert-derived domain knowledge, tend to degrade in performance when test cases differ from the small set of training data (for example, images collected in different lighting and weather conditions, and with different sensors, or sensors with different noise models). That is, such networks lack the generality to remain robust under different sensing conditions.

However, in recent years with the availability of big data and massively parallel processing frameworks such as GPUs, it is feasible to train networks consisting of many millions of neurons (with the largest consisting of hundreds of billions) organized into many layers. Expert knowledge is being replaced with large sets of training data and so-called ‘deep’ neural networks.

The concept of experts ‘tuning’ a network has evolved to one of using more data and, as an academic at the Intelligent Vehicle Symposium in 2017 suggested, “throwing a bigger network at the problem.” Essentially the trend is to replace expert user input with brute force processing. However, more processing and more data generally introduce higher latency and greater power consumption – two factors which are incompatible with the concept of commercially viable autonomous driving.

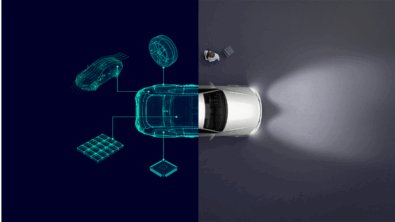

At Mentor Graphics, we have taken inspiration from the feature extraction process for convolutional neural networks. Within our automotive applications, we use multiple sensors with disparate physical and spectral capabilities to provide multi-modal input vectors. Using such a multi-sensor system, we are able to infer physical states which are otherwise unobservable.

The next blog will expand on this process and outline our strategy for incorporating effective AI into our DRS360, Autonomous Driving sensor fusion platform.

Comments