Developments in sensing and information fusion for achieving full level 5 autonomy

Even though autonomous driving has been a topic of interest for over half a century, there is currently no consensus on the exact technology that will form the basis of a driverless vehicle. Will future driverless vehicles be automatons which simply follow a set of pre-programmed instructions? Or will such vehicles be autonomous in the sense of deriving their own instructions based on a rule set? Sensor system requirements will differ depending on which option prevails. The first uses sensors to monitor the precision with which a vehicle follows instructions, while the second uses sensors to determine which actions to undertake.

In a series of blogs to come, I plan to explore one of the key challenges that’s common both of these schemes – how to precisely and confidently perceive the environment around the vehicle. This basic act is simple in its objective but remarkably difficult to achieve.

Fully autonomous driving is generally held as one of the key technological challenges for the first decades of the 21st Century. It is widely perceived that realizing fully autonomous cars will cause disruptive changes in the way that transportation is integrated into modern society. Yet, autonomy is not a new topic; projects ranging from autonomous aircraft, robotics and even vehicles have existed for decades. My first position after my undergraduate degree at QinetiQ was on the test and evaluation of integrated navigation systems for autonomous guidance and this was back in 2004. In fact, autonomous guidance in road-vehicles has been an active field of research in both industry and academia since at least the 1950s (and possibly before).

So why is there now such an interest in autonomous vehicles? Society is increasingly seeing transportation as a service (TaaS) that we rent rather than a function that we own. Already a large portion of new cars are purchased on a finance scheme, which effectively sees the customer ‘renting’ the vehicle for a period of years. As autonomy will play an increasing role in TaaS, it is likely that this societal change is pushing for the development of technology which will enable fully autonomous driving.

There are many proposals on the technology configurations that will enable effective perception for autonomous vehicles and invariably there will be many different configurations that lead to success. Surely most systems will include multiple disparate sensors with different operating characteristics (both physical and spectral) to observe different aspects of the environment. Observation and perception is made all the more difficult by the fact that present day automotive infrastructure, within the vehicle and beyond, has been developed around human drivers. Automotive sensors are in general quite limited and coarse, recording a highly imperfect analogue to the real world in a discrete form. This is why fully autonomous driving is unlikely to be achieved simply by combobulating existing technology. Further research in collaboration with development is not only desirable, but mandatory.

Sensor signal processing will estimate the physical state of objects in the environment; machine learning will help to enable semantic classification of an object; sensor and information fusion will allow us to integrate the data/observations/information from different sources in order to understand what the object is and how we expect it to behave in the future. Further aspects such as collaboration with other agents (infrastructure and other vehicles) will also play a significant role, as will susceptibility and vulnerability to security issues.

The primary aim of this series of blogs will be to explore some of the technologies in more detail, including:

- Sensors – challenges and opportunities: Sensors provide discrete representations of the analog physical world. Autonomous vehicles then use sensor signals to represent objects within the torrents of digital data. Here I will discuss different sensing modalities, the requirements for sensors, high-resolution surround-view radar technology and the role of sensors in the infrastructure.

- Machine learning: In the context of autonomous driving, machine learning offers the potential to characterize recorded information according to some statistical property, usually through optimizing the map between labeled inputs and outputs. For most autonomous vehicle sensing applications, machine learning is used to detect and classify objects in the environment. Here I will discuss the challenges of realizing high computational complexity on low power embedded hardware.

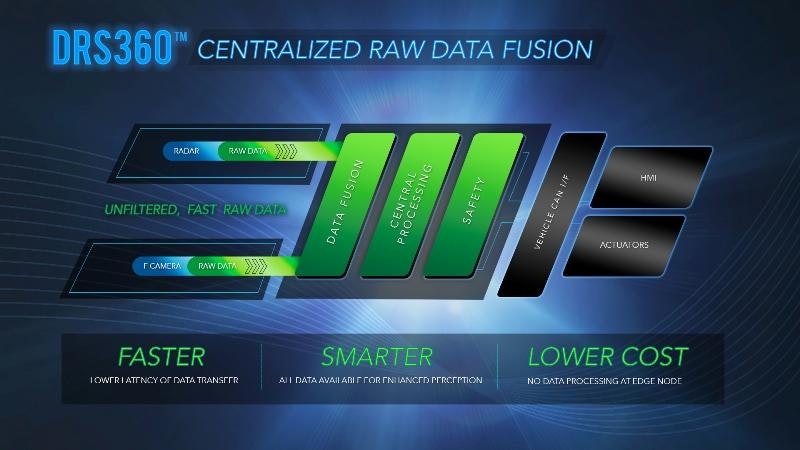

- Sensor fusion: In the most general sense, multi-sensor data fusion allows us to reduce the uncertainty of the physical state estimates (e.g. object location or orientation) and estimate physical properties which might otherwise be unobservable (e.g. using stereo-cameras to estimate range). I will consider the challenges of fusing raw sensor data, discuss the role of information theoretic gain and loss, and attempt to understand the role of both traditional and sub-optimal filtering algorithms in autonomous vehicles.

I invite you to follow me on LinkedIn.