Redefining Verification Performance (Part 2)

In my last blog, I gave a few examples of different ways of thinking about getting more work done by finding solutions that increase amount of work accomplished per cycle, instead of just a brute-force approach to the problem. Before I talk about advanced verification solutions, I want to talk about why performance even matters.

First, we all intuitively know that the sooner we find a bug, the cheaper it is to fix. Doug Josephson and Bob Gottlieb attempt to quantify this notion in their chapter “Silicon Debug,” from the book Advances in Electronic Testing: Challenges and Methodologies (Springer, 2006). Figure 1 summarizes their findings in terms of the relative cost of finding bugs within a typical design cycle. Notice that a functional bug that prevents us from achieving first silicon success can cost us 10,000 X or more to fix than if it was found during the initial design phase.

Obviously, speed, accomplishment, efficiency, and quality of results are all important attributes to getting more work done, finding bugs sooner, and thus reducing cost.

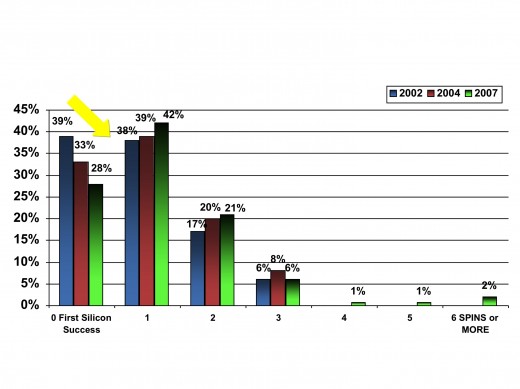

Let’s look and see how the industry as a whole is doing in achieving first silicon success. Figure 2 shows the 2002 and 2004 Ron Collett International functional verification studies and the late 2007 FarWest Research functional verification study. You can see that there is a continual downward trend in achieving first silicon success.

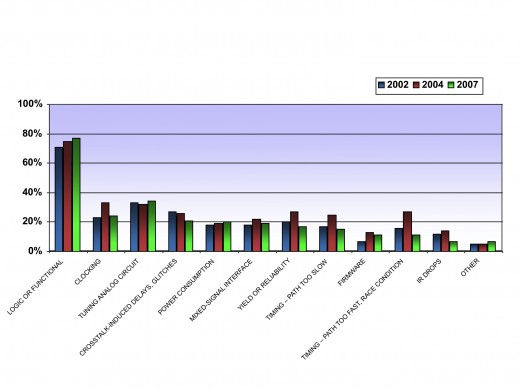

Figure 3 list the types of bugs that caused a respin, where functional bugs account for the largest contributor.

So this is the state of the industry today. But what about tomorrow’s design? What additional performance requirements will be required to meet tomorrow challenges? Will brute force approaches to achieving verification performance really enable us to get more work done?

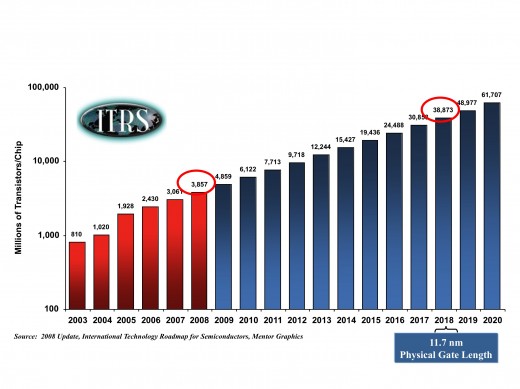

Figure 4 shows the International Technology Roadmap for Semiconductors’ projected growth of transistors on a chip. Let’s focus on the ten-year span from 2008 to 2018.

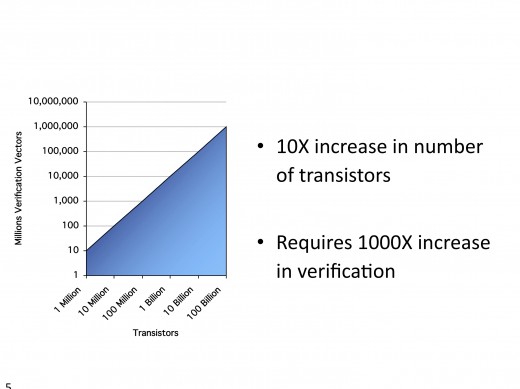

You can see that, within ten years, there is about a 10x increase in the number of transistors on a chip. Now obviously, not everyone will be creating behemoth designs that take advantage of all the available transistors in 2018. Yet, for the sake of argument, it is interesting to calculate what theoretical maximum increase in verification effort would be required to verify a large design in 2018 compared to 2008, as shown in Figure 5. The verification effort grows at a double-exponential rate with respect to the Moore’s Law curve. Hence, if the number of transistors per chip increases 10X between 2008 and 2018, then the verification effort would increase 1024X.

Obviously, verification performance matters! Certainly, we as an industry can’t afford a 1000x increase in verification teams. Nor will brute force verification approaches to the problem scale.

In my next blog, I’ll discuss ideas on ways to improving verification performance.

Comments