How AI helps bridge the gap between the real and virtual world

In today’s world the presence of artificial intelligence in products, services and software is starting to seem more like a forgone conclusion than a next generation revolution. However, there are still some areas where AI can drive truly ground-breaking innovation. One such area being human-computer interaction. The way we interact with computers has remained largely the same over the last 70 years or more, despite the fact that none of the most common methods, such as keyboard, mouse or touchscreen, truly capture all the ways humans interact with their environment. Now, thanks to advances in AI-driven natural language processing, virtual reality (VR) and augmented reality (AR) the possibility of operating a computer as naturally as we interact with another person is starting to take shape.

One of the first areas where AI has risen to prominence for end users is in natural language processing, the underlying technology which enables voice recognition and digital assistants. While this use case has become relatively common in the consumer space, for professional applications, such as mechanical engineering and design software, the ability to interact with software through intuitive voice command has remained largely out of reach. The difficulty in using voice commands to drive professional software is in the sheer volume of potential actions available, going far beyond single button presses or easily defined behavior. To truly allow for a user to drive software of this caliber, with voice alone, would require the computer to understand not just commands, but the part being worked on as well. By training AI from user actions as they work, the system itself learns the complete command sequences which can later be recalled through a simple voice command, eliminating the need to exhaustively train all possible commands before deployment. To learn more about advanced voice command for mechanical design software, check out episode 1 and episode 2 of the AI Spectrum podcast on AI-driven smart human-computer interactions.

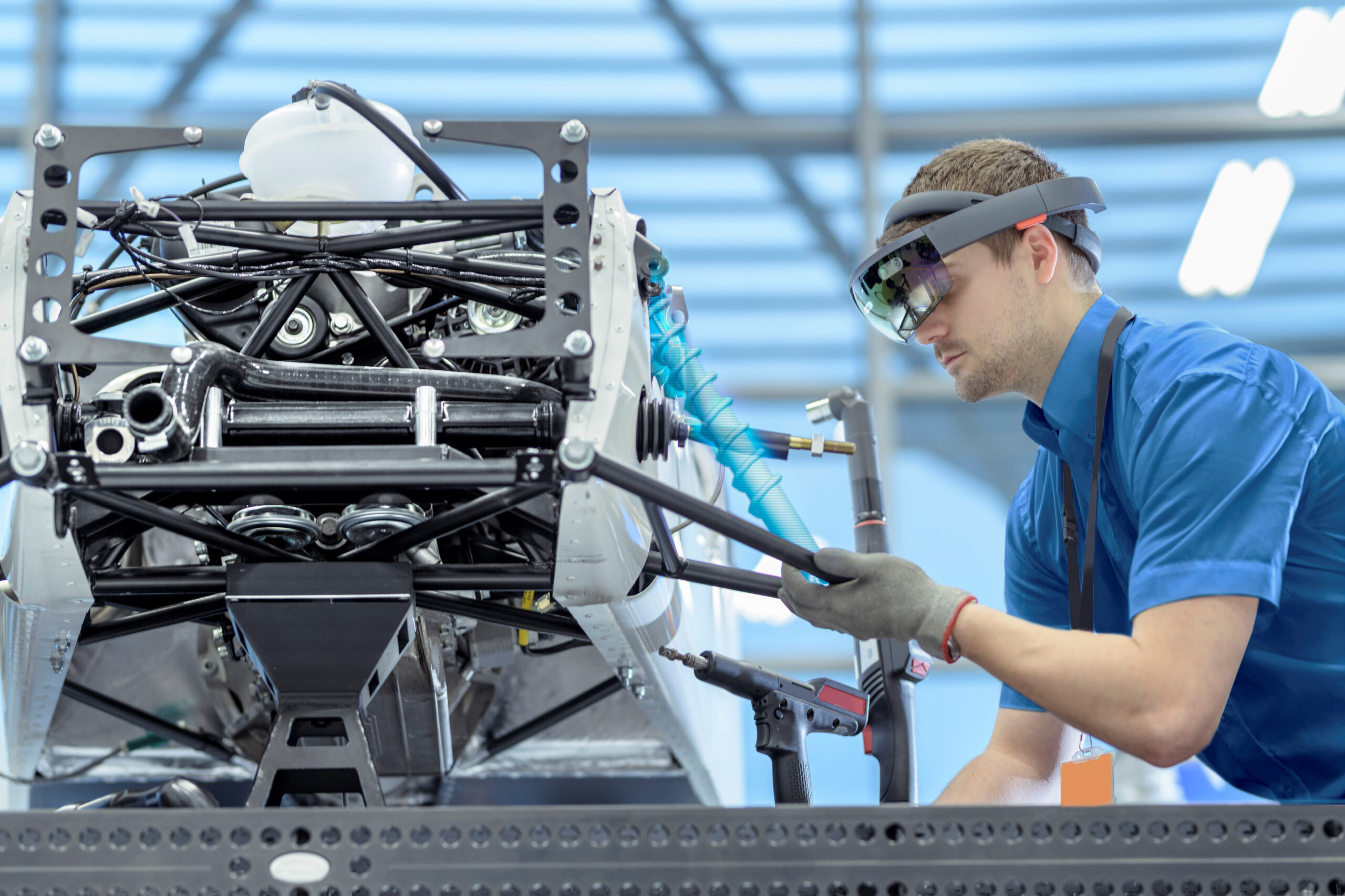

While impressive, voice command is only part of the puzzle when it comes to shifting the paradigm of human-computer interactions. Mechanical design is an inherently 3-dimensional processes, but neither a computer screen nor mouse is well suited for working on 3D objects. This is where VR and AR begin to rise to prominence thanks to their ability to allow users to interact with virtual parts in true 3D. To be of real use and not just a passing fad, it must be possible to use all the tools and functionality traditional input methods offer in VR as well. While voice command helps enable a wide range of abilities in the virtual world, fundamentally interacting with a part in full 3D is different than interacting with a representation of the part on a flat screen. Simply grabbing and moving a part is one thing, but to allow users to cut, mold, shape, and draw naturally in 3D space requires a far greater degree of comprehension from the software than is traditionally possible. Of course, breaking from tradition is exactly what AI excels at.

Thanks to the data processing and learning ability of AI, it is now possible for computers to comprehend human actions to a degree never seen before. In the case of VR and AR, this manifests in the ability to interpret gestures and movement into actionable commands with enough precision for detailed professional work. Thanks to AI, it will soon be possible to merge the benefits of both real and computer models, allowing designers to work with virtual parts as intuitively as if they had a physical prototype while retaining the computer model’s ability to instantly manipulate, change, and analyze without needing to build a new prototype from scratch.

There is no doubt that the future of mechanical design and professional software as a whole is starting to change. No longer are engineers and designers tied to desks, sitting in front of screens and using keyboard and mouse; now they are free to move around and interact with their creations in tangible and intuitive ways, utilizing the full range of human capabilities. While adoption of such revolutionary technology is sure to take time, the benefits it will enable are sure to be worth the wait. Just as so many other fields have been reshaped by AI, so too will the very way in which we interact with our software.

Siemens Digital Industries Software helps organizations of all sizes digitally transform using software, hardware and services from the Siemens Xcelerator business platform. Siemens’ software and the comprehensive digital twin enable companies to optimize their design, engineering and manufacturing processes to turn today’s ideas into the sustainable products of the future. From chips to entire systems, from product to process, across all industries. Siemens Digital Industries Software – Accelerating transformation.