Machine Learning and Artificial Intelligence … how does it work for simulation?

In this edition of our Engineer Innovation podcast, we hear from Chad Jackson at Lifecycle Insights in discussion with Siemens Digital Industries Software AI expert, Justin Hodges as they explore the role of machine learning for simulation engineers.

Justin breaks down what can seem a daunting area into the key benefits, real-life application examples and most importantly how you can adopt the methodology to see rewards for your simulation projects.

Whilst the clearest benefit is the time saved, not only for the simulation process but potentially across the entire design-cycle, that time can then be used to determine even better outcomes and improvements for future product configurations.

Chad and Justin explore some real-life examples before diving into how to roll out this methodology in your organization.

- The role of machine learning in simulation

- How machine learning is offering time (and therefore cost) savings for engineers

- The possible applications for machine learning

- How machine learning can help optimize any physical testing

- The next steps for deploying machine learning in your organisation

Ginni Saraswati: Welcome to the Engineer Innovation podcast. In this episode, Chad Jackson from Lifecycle Insights is joined by Justin Hodges — an AI expert from Siemens Digital Industries Software. They discuss finding the right role for machine learning in simulation. Can we use machine learning to change the way simulation engineers work? Tune in to discover the possibility in the future of simulation.

Chad Jackson: Welcome, everybody, to our podcast. Today, we’re going to be talking about machine learning being applied to simulation. And joining us is Justin Hodges, Simcenter Machine Learning Tech Specialist. Thanks for joining, Justin.

Justin Hodges: Thanks for having me. It’s always a pleasure to talk about AI.

Chad Jackson: There’s a lot to cover here. To get started, let’s talk a little bit about how these technologies have been used in this space recently. AI and machine learning — they mainly have gotten a lot of attention around IoT applications: getting streaming data off of a product, you collect it, you analyze it, you look for anomalies, and that type of thing. But increasingly, companies and solution providers are applying these technologies to software. So, I know, for example, in NX, they’re using AI algorithms as a way to predict what you want to use next. Now, today, we’re going to be talking about simulation and how AI and machine learning can be used there. So, Justin, can you give us a little bit of orientation on what does that look like when you use machine learning in simulation?

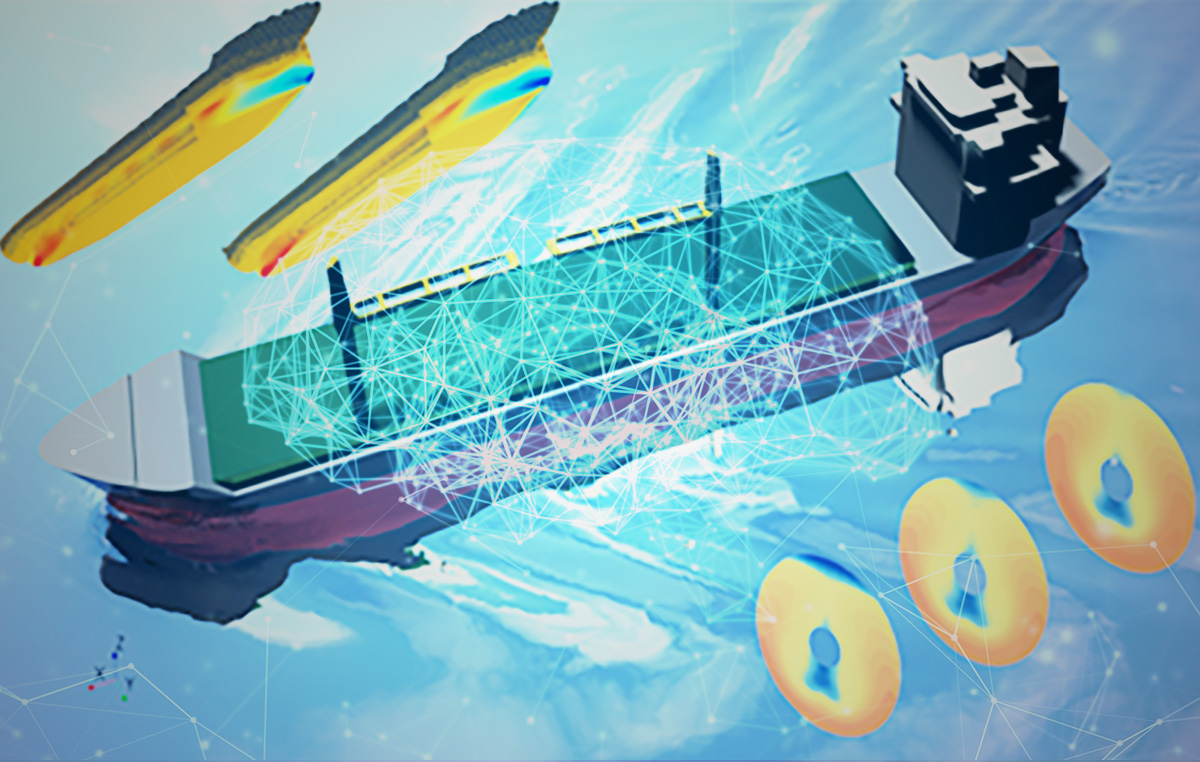

Justin Hodges: You brought up a few good examples, and there’s certainly an abundant amount of other examples, but some of the really common themes and use cases I can speak to briefly. You mentioned recommender systems with NX workflows being automated with some AI-based offerings to what the user would probably do next to automate some of those things. So, that can be pretty broadly applied to simulation irrespective of what you’re doing. Usually, simulation work requires some prep work, some actual setup, and then some post-processing, and things to be done there after the simulation is complete. So, any of those frequent and redundant type tasks that may be the same from design to design, those processes can be automated. And ultimately, a huge theme for value-add our customers are having is how fast pipelines can get done when they do their design work. So, automating those sorts of things allows people to be freed up and focus on other things, and in some cases, can make the processes go faster. That’s why I would say, category of the way that AI is being used today, and that we’re improving what’s available. Another would be along the same vein as far as time savings, but in this case, I always see a lot of simulations that are being done but some of them can be pretty simple — in terms of the outcome, it could be a smaller correlation that’s used; it could be a different set of equations that are a little bit filled with some assumptions, and that can give relatively good answers. So, some people are using Reduced-Order Models as a surrogate for their actual simulation. And one class of Reduced-Order Models is machine learning-based ROMs. So, people are actually taking some of their existing data and design work, and then compiling that to create a surrogate or ROM. And then, in that case, it saves the user quite a bit of time so they don’t have to do all of the expensive simulation all of the time because some of those cases can be easily predicted with these machine learning-based surrogates. So, those are some pretty common applications. And you touched on another one, too, that can come by basically synthesizing different types of data. In that case, you may have some real-time data or test data being fed back to the engineer to monitor the performance of a given device, which, keep in mind, could be quite a bit of data that’s disparse and in a really complex system, like a jet engine, where you may have hundreds of experimental measurements of temperature and pressure. These are complex patterns to relate all of that information in real-time to part safety, individual or the whole system safety, performance, things like that. So, there’s certainly anomaly detection, and you could say application machine learning and field-based problems as well. And that can be tied back to simulation depending on the problem, but that’s where you get into the fun problems where you synthesize different types of data into some common machine learning framework. So, there are a lot of options, but those are some popular ones.

Chad Jackson: So, those are three really good applications in terms of helping you figure out what you want to do next: simplified Reduced-Order Models, as well as IoT data. Let’s talk about that second one real quick because that’s the one that I hear the most about in the industry, especially simulation, that you can take Reduced-Order Models, apply these algorithms, and then do a lot more with them. So, what would you say is the biggest advantage or benefit of applying that kind of approach with machine learning?

Justin Hodges: It’s a good question. There are a few, and it depends on the scale of which you’re looking through and what lens. But if you go pretty granular to the technical side, if you’re doing design work for, say, some aerodynamic component, you may explore a pretty vast space that is all possible designs that you could work with as far as the operating conditions that you would be in or the geometries that you would use. So, what ends up happening is you have some finite timeline to arrive at this best design, and then also take that best design and explore it under a number of different circumstances to ensure that there’s some reliability. So, one thing that’s super useful to have is if you have some Reduced-Order Model that’s at some tolerance that it’s acceptable — it’s accurate enough at some percentage — then instead of running simulations for every variation of the geometry, every variation of the operating condition; you can just ping that machine learning-based-generated ROM or surrogate to simulation, and it can provide you the answer normally in fractions of a second. And then that way, you can focus on coming up with good designs, and then, like I said, ensuring that reliability. But furthermore, I would say it’s also not just about time savings, but it’s kind of pitch it as two sides of the same coin with time savings; what do you do with that? So, the other one could be efficient design exploration. You can run a first batch of designs or simulations, build some Reduced-Order Models, and then use that to explore what you should do next. And essentially, if you keep doing this process throughout your design and simulation work, you should be able to more efficiently arrive at the subspace that you’re trying to design that should provide you the best performance. And then you can apply whatever methods you like, maybe legacy or optimization and things like that, whatever tools you’re comfortable with, and you can spend more time exploring better designs.

Chad Jackson: That’s really interesting. I hadn’t quite thought about it that way before. I know that with really big design spaces, you certainly could use gradient-based optimization routines, but you’re very likely to run into a local minima or a design that’s really good up in one corner but might not be the global best design. So, it sounds like in using this approach, you assess a larger aspect of the space. And when you get into a certain area, then you can use gradient-based optimization or some other routines. Is that fair?

Justin Hodges: Yeah, that’s a fair statement. Let’s say, in your team, the expertise and the best practice is optimization with one or two of your computational fluid dynamics codes or something — whatever it is that team does. Well, if I’m designing a component, oftentimes, maybe in automotive, they have some end-stage of a design where they would run it in an experimental facility or some sort of very expensive wind tunnel large at scale. That’s a very short window of time that people have, to leverage and take advantage of that sort of environment, to provide real data to tie back to their simulations. So, for the car example, it’s just not possible to explore all the best designs at all the different RPMs, at all the different fuel economy and gas mileages that you’re looking for. So, in essence, you have to be very, very decisive and specific with what you simulate and how you populate that greater map. So, having a tool that is reliable and accurate enough, and can explore that space for you in real-time; it’s really invaluable.

Chad Jackson: Absolutely. Especially, if you’re dealing with highly-engineered products. It feels like this would be a really good fit, where you have competing or conflicting constraints or requirements. You’re really going to find the sweet spot where all that fits.

Justin Hodges: And basically, some of the best designs, exactly as you put it, may be ruled out due to some of those constraints — if I operate at this high-pressure state, well, then that best design now fails or causes some other engineering team to have non-negotiable failures in, say, the thermal performance of the part, even though that team is focused on aerodynamics. So, that’s what I was alluding to. You throw out those cases or those designs that just don’t work under enough wide breadth of conditions. Then you can take, for example, your bread-and-butter optimization work and focus on those ones that are plausible. And that’s where you can see that improvement in the performance and your design by more quickly electing and identifying that space that you should be in for your design.

Chad Jackson: That’s really great, Justin. So, as a next step, can you give me an example or two where this has been applied and what a company has realized as a result?

Justin Hodges: Absolutely. A recent example, I guess, is through some of our consulting teams, and it was for automotive customers. And essentially, one area that is particularly expensive to simulate, which slows down the whole process of designing in the pipeline, which is – we’re always trying to go faster and faster – simulation and design of the thermal comfort for all the passengers inside an automobile, inside the cabin. So, in that particular case, these are very large models, you could get very intricate and model things outside the cabin and near the hood and things like that to convey how the temperatures would shift. And essentially, what we did was we applied some machine learning models that could learn in as few as, I think, 50 simulations. It could learn what the temperature would be like at a grid of numerous discrete points throughout the cabin. So, that way, instead of doing simulations, you could have an AI model that was quite accurate at just providing this temperature distribution to the cabin, which is basically what’s needed to provide some sort of index on how comfortable the passengers would be. And in this case, the time savings is pretty incredible. The accuracy for that was under 2% for 75% of the cases. And the training time was on the order of two hours from just 50 simulations. And if you think about all the variables in terms of the flow rate of the air conditioning, the positions and the designs of such, the ambient temperatures, temperatures under the hood; you could easily reach a permutation of 50 possibilities very quickly. So, that’s one application that’s pretty useful: Cabin comfort modeling with machine learning-based surrogates.

Chad Jackson: That was a really good example. I think, as a concept, this is appealing. If the company is interested in taking the next step, maybe trying to figure out if this is the right kind of tool for them, what should they do? What’s the next step there?

Justin Hodges: Probably a fair but less glamorous thing to point out upfront before truly answering the question is a lot of things are just a practical balance of how quickly a company wants to ramp up such a methodology that they have, and how much time and resources they have to accomplish it. So, we’ve seen that some customers have the best success in just reaching out to a consulting group that has expertise in that with the commercial provider. And then, basically, you can get a walkthrough hand-in-hand in having an environment set up for you. And then that’s, obviously, the fastest route. But whether it’s from an educational point of view or a research point of view, I guess I would start out by saying, first get some basic grasp as to some of these textbooks theories. You’re not going to crack open a textbook on machine learning and see examples in simulation. So, you’re going to, basically, have to take what you learn in a theoretical context, and then first get your bearings in that; such as like, what is a neural network? How does it work? And some basic concepts in statistics. And there’s a huge evangelization effort in this knowledge and fundamentals everywhere on the internet, whether it’s free courses, like Coursera, or open resources and lectures from universities; there’s an overwhelming amount of good information actually on this. So, that’s a great starting point. I think once you have your bearings in some of the tools, processes, and terms; I think a good thing to do is look at just open canonical data. So, whatever environment you’re in, as far as simulation goes, there are probably some validation cases and literature that is really common, reused over and over again in literature through time. So, maybe get your hands on those, and then just have a shot at applying some of the machine-learning type fundamental approaches, or the simple models that you may learn about first, just to see what that looks like in your industry on how AI could be applied. And then as far as how do you set your environment up? One of my favorite resources, just because of the massive dissemination of information that’s within seconds able to be done, is Google Colab. And the limitation is that you have to be very careful because it’s not appropriate for anything other than public data and public information. So, we’re talking about tutorials and completely open stuff that you wouldn’t care if other people ran across it. And that’s a great environment because you have everything set up; you have the libraries; you don’t have to worry about installing TensorFlow or any other packages. You just simply create an account like you would at Gmail, login, and there are plenty of examples online that walk you through tutorials in Google Colab. So, I would say the final step is just plug in any completely public data into a tool like that, and then have a shot through some tutorials.

Chad Jackson: Definitely sounds like you need to ramp up on your skills and knowledge, especially with these technologies. Really good advice.

Justin Hodges: And it’s such a great time to be able to do that because I would say that in 2017-19 and still now, but really upfront, there were these few years where major advances in the actual principle matter of what algorithms are available? How accurate are they? How do they scale? I mean, to this day, there are advances coming out weekly. But for a while, there was a huge push on that front. And then, as that gained momentum, in the last two or three years, you’ve seen this huge cross-pollination effort, where the experts are making those techniques, algorithms, and methods more available to other industries and people that aren’t a PhD in machine learning or something. And that’s this really exciting time we’re in where we have open products and open tools made to be approachable by a non-expert, and thus used in industries outside of just, say, Natural Language Processing or Computer Vision, where things like simulation data is really achievable to be used with those platforms.

Chad Jackson: That’s excellent. Well, Justin, thanks so much for your time. I really appreciate it. This is a great discussion. Maybe we can have you on again in the future.

Justin Hodges: Yeah, I’d like that. Thanks.

Justin Hodges, our guest

Justin Hodges is a Senior AI/ML specialist and Product Manager at Siemens DISW. He has a bachelors, masters and Ph.D in Mechanical Engineering specialising in thermofluids.

Stuff to watch:

- Podcast: Exploring the impact of AI in CFD

- Podcast: The birth of Simcenter

- Ride the digital wave with CFD simulation

Good reads:

- 4 Myths about AI in CFD

- Monolith AI and Simcenter STAR-CCM+ bring machine learning to CFD simulations

Engineer Innovation

A podcast series for engineers by engineers, Engineer Innovation focuses on how simulation and testing can help you drive innovation into your products and deliver the products of tomorrow, today.

Engineer Innovation Podcast

A podcast series for engineers by engineers, Engineer Innovation focuses on how simulation and testing can help you drive innovation into your products and deliver the products of tomorrow, today.